The New Era of Computing: An Interview with “Dr. Data”

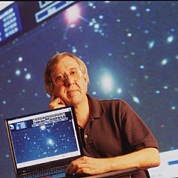

When it comes to thought leadership that bridges the divides between scientific investigation, technology and the tools and applications that make research possible, Dr. Alexander Szalay is one of the first scientists that springs to mind.

Szalay, whom we will dub “Dr. Data” for reasons that will explained in a moment, is a distinguished professor in the university’s Department of Physics and Astronomy. Aside from his role as a scientist—an end user of high performance computing hardware and applications–he also serves director of the JHU Institute for Data Intensive Engineering and Science.

Szalay, whom we will dub “Dr. Data” for reasons that will explained in a moment, is a distinguished professor in the university’s Department of Physics and Astronomy. Aside from his role as a scientist—an end user of high performance computing hardware and applications–he also serves director of the JHU Institute for Data Intensive Engineering and Science.

Part of what makes Dr. Szalay unique is that he sees scientific technology from both sides of the fence; both as a physicist reliant on massive simulations and supercomputers–and as a computer scientist probing the underlying performance, efficiency and architectural issues that are increasingly important in the age of data-intensive computing. He is the architect for the Science Archive of the Sloan Digital Sky Survey and project director of the NSF-funded National Virtual Observatory and has penned over 340 journal articles on topics including theoretical cosmology, observational astronomy, spatial statistics, and computer science.

Szalay’s world of diverse research hinges on solving big data problems and working with the complex algorithms and applications that are creating it. In addition to his astronomy and physics research, Szalay has been presenting on topics such as “Extreme Data-Intensive Computing with Databases”—a topic that caught our attention recently and prompted the following interview.

What is missing in current computing architectures as we look toward the future of data-intensive computing (i.e. involving not just petabytes, but exabytes of data)?

There is a growing distance between computational and the I/O capabilities of the high-performance systems. With the growing size of multicores and GPU-based hybrid systems, we are talking about many Petaflops over the next year (10 PF for the Jaguar upgrade by 2013…).

At the same the scientific challenges involve increasingly larger data sets. These are either generated by new peta-instruments (like the Large Hadron Collider) or by huge numerical simulations running on our largest supercomputers. In both cases the large data sets represent a new challenge.

In commerce, large data are dealt with a scale-down-and-out architecture (see Google, Facebook, etc). For example, the internal connectivity of the Google cloud is quite high, the disk I/O speed of each node is comparable to the network bandwidth. We need to have the ability to read and write at a very much larger I/O bandwidth than today, and we also need to be able to deal with incoming and outgoing data streams at very high aggregate rates.

In one of your recent talks you said that in data-intensive computing, its worthwhile to revisit Amdahl’s Law, especially since we can use it examine current computing architectures and workloads. You say that existing hardware can be used to build systems that are much closer to an ideal Amdahl machine… can you describe this thought in more detail?

Gene Amdahl noticed in 1965 that for a balanced system 1 bit of sequential I/O is needed for every instruction. On today’s supercomputers this ratio is typically 0.001, the systems are consciously very CPU-heavy. We have built the GrayWulf (winner of the SC08 Data Challenge), out of standard Dell components, with an Amdahl number of 0.5. eBay’s analytics system has an Amdahl number of over 0.83 (O. Ratzesberger, private communication).

For heavy-duty analytics we need to scrape through very large amounts of data, and with a large dynamic data stream the cache hierarchy of the processors will not provide as much benefit as for a high-end computational problem in fluid dynamics.

In particular, one can eliminate a lot of system bottlenecks from the storage system by using direct-attached disks, a good balance of disk controllers, ports and drives. It is not hard to build inexpensive servers today where cheap commodity SATA disks can stream over 5GBps per server. This 40Gbits of streaming data rate would be Amdahl-balanced by 12 cores running at 3.3 GHz, right on the best price performance of a normal dual-socket Westmere motherboard.

What do expect the role of GPGPU computing to be in data-intensive computing?

GPGPUs are extremely well suited for data-parallel, SIMD processing. This is exactly what much of the data-intensive computations are about. While the GPUs first emerged in the highly floating point-intensive calculations, related to graphics and rendering, even in those applications the difficulty has been to feed the cards fast enough with data to keep them busy. Building systems where GPGPUs are co-located with fast local I/O will enable us to stream data onto the GPU cards at multiple GB per second, enabling their stream processing capabilities to be fully utilized.

You have mentioned that the idea of a “hypothetically cheap, yet high performance multi-petabyte system currently under consideration at JHU… can you describe this and how it might (theoretically, of course) address the needs of data-intensive computing?

We have received a $2M grant from the National Science Foundation to build a new instrument, a microscope and telescope for Big Data, that we call the Data-Scope. The system will have over 6PB of storage, about 500GBytes per sec aggregate sequential IO, about 20M IOPS, and about 130TFlops.

The system integrates high I/O performance of traditional disk drives with a smaller number of very high throughput SSD drives with high performance GPGPU cards and a 10G Ethernet interconnect. Various vendors (NVIDIA, INTEL, OCZ, SolarFlare, Arista) have actively supported our experiments. The system is delivered as we speak and should become operational by the end of the year.

How are developments in HPC aiding developments in data-intensive computing; where do these two areas of computing come together but more importantly, where are their differences felt most?

Scientific computing is increasingly dominated by data. More and more scientific disciplines want to perform numerical simulations of the complex systems under consideration. Once these simulations are run, many of them become a “standard reference”, that others all over the world want to use, or compare against. These large data sets generated on our largest supercomputers must, therefore, be stored and “published”. This creates a whole new suite of challenges, similar in nature to data coming off of the largest telescopes or accelerators. SO it is easy to come up with examples of convergence.

The differences are felt by the scientists, who are working on their science problems “in the trenches”, where it is increasingly harder to move and analyze the large data sets they can generate. As a result, they are still building their own storage systems, since the commercially available ones are either too expensive or too slow (or both). We need the equivalent of the BeoWulf revolution, a simple, off-the shelf recipe, based on commodity components, to solve this new class of problems.

More about Szalay can be found here.

Related Stories

Astronomers Leverage “Unprecedented” Data Set

New Techniques Turbo-Charge Data Mining

Live from GTC Asia: Accelerating Big Science

Big Data in Space: Martian Computational Archeology