The Week in Big Data Research

We span the globe for this week’s big data research and development stories. From modeling in Poland and Montreal to analyzing e-literature in Norway and hashing in Japan, this week has a distinct international flair. Also included this week are studies of big data compression out of MIT and private data center access from Microsoft.

In case you missed it, last week’s research can be found here. Without further ado, we head to Montreal to kick off this week’s big data research.

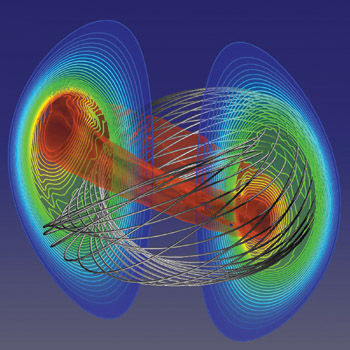

Democratization of Modeling and Simulations

For researchers at Gdansk University in Poland and McGill University in Montreal, computational science and engineering play a critical role in advancing both research and daily-life challenges across almost every discipline. People apply search engines, social media, and selected aspects of engineering to improve personal and professional growth.

Recently, leveraging such aspects as behavioral model analysis, simulation, big data extraction, and human computation is gaining momentum. The nexus of the above facilitates mass-scale users in receiving awareness about the surrounding and themselves.

Recently, leveraging such aspects as behavioral model analysis, simulation, big data extraction, and human computation is gaining momentum. The nexus of the above facilitates mass-scale users in receiving awareness about the surrounding and themselves.

In their research, they propose an online platform for modeling and simulation (M&S) on demand. It aims to allow an average technologist to capitalize on any acquired information and its analysis based on scientifically-founded predictions and extrapolations.

Their overall objective is achieved by leveraging open innovation in the form of crowd-sourcing along with clearly defined technical methodologies and social-network-based processes. The platform aims at connecting users, developers, researchers, passionate citizens, and scientists in a professional network and opens the door to collaborative and multidisciplinary innovations. An example of a domain-specific model of a pick and place machine illustrates how to employ the platform for technical innovation and collaboration.

Next — Architecture for Compression of Big Data

Architecture for Compression of Big Data

Scaling of networks and sensors has led to exponentially increasing amounts of data.

Researchers from CSAIL at MIT argue that compression is a way to deal with many of these large data sets, and application-specific compression algorithms have become popular in problems with large working sets.

Researchers from CSAIL at MIT argue that compression is a way to deal with many of these large data sets, and application-specific compression algorithms have become popular in problems with large working sets.

Unfortunately, these compression algorithms are often computationally difficult and can result in application-level slow-down when implemented in software. To address this issue, they investigated ZIP-IO, a framework for FPGA-accelerated compression.

Using this system they demonstrated that an unmodified industrial software workload can be accelerated 3x while simultaneously achieving more than 1000x compression in its data set.

Next — Ensuring Private Access in the Data Center

Ensuring Private Access in the Data Center

According to researchers from Microsoft, IBM, and AMD, recent events have shown online service providers the perils of possessing private information about users. Encrypting data mitigates but does not eliminate this threat: the pattern of data accesses still reveals information.

Thus, the team presented Shroud, a general storage system that could hide data access patterns from the servers running it. Shroud, the researchers note, functions as a virtual disk with a new privacy feature: the user can look up a block without revealing the block’s address.

Thus, the team presented Shroud, a general storage system that could hide data access patterns from the servers running it. Shroud, the researchers note, functions as a virtual disk with a new privacy feature: the user can look up a block without revealing the block’s address.

The resulting virtual disk could be used for many purposes according to the team, including map lookup, microblog search, and social networking. Shroud targets hiding accesses among hundreds of terabytes of data.

They achieved their goals by adapting oblivious RAM algorithms to enable large-scale parallelization. Specifically, they show, via techniques such as oblivious aggregation, how to securely use many inexpensive secure coprocessors acting in parallel to improve request latency. Their evaluation combines large-scale emulation with an implementation on secure coprocessors.

Next — Exploring Methodologies for Analysing Electronic Literature

Exploring Methodologies for Analysing Electronic Literature

Electronic literature is digitally native literature that, to quote the definition of the Electronic Literature Organization, “takes advantage of the capabilities and contexts provided by the stand-alone or networked computer”.

Researchers from the University of Bergen in Norway argue that electronic literature is only published online and thus digital methods would seem an obvious technique for studying and analysing the genre. Electronic literature works are multimodal, highly variable in form and only rarely present linear texts, so data mining the works themselves would be difficult.

Researchers from the University of Bergen in Norway argue that electronic literature is only published online and thus digital methods would seem an obvious technique for studying and analysing the genre. Electronic literature works are multimodal, highly variable in form and only rarely present linear texts, so data mining the works themselves would be difficult.

Instead, they wished to take what Franco Moretti has called a “distant reading” approach, where one looks for larger patterns in the field rather than in individual works. However, the lack of established metadata for electronic literature makes this difficult as there is no central repository or bibliography of electronic literature.

The ELMCIP Knowledge Base of Electronic Literature is a human-edited, Drupal database consisting of cross-referenced entries on creative works of and critical writing about electronic literature as well as entries on authors, events, exhibitions, publishers, teaching resources and archives.

All nodes are cross-referenced so you can see at a glance which works were presented at an event, and follow links to see which articles have been written about those works or which other events they were presented at. Most entries simply provide metadata about a work or event, but increasingly they are also gathering source code of works and videos of talks and performances.

The Knowledge Base provided them with a growing wealth of data that we are beginning to analyse and look at in terms of visualisations, social network analysis and other methods.

Next — A Virtual Node Method of Hashing

A Virtual Node Method of Hashing

In the distributed search system, per researchers from Hitachi and Osaka University, the method of mapping and managing documents on segmented indexes is significant to realize load balancing of distributed search process and efficient cluster reconfiguration.

Consistent hashing is the advanced method of data mapping which minimizes the network traffic and redundant index data processing in the index reconfiguration. However, if the cluster consists of several thousand nodes, it requires huge memory resources. Furthermore, it takes a long time to execute the index reconfiguration because of the overhead of many index splitting processes.

Consistent hashing is the advanced method of data mapping which minimizes the network traffic and redundant index data processing in the index reconfiguration. However, if the cluster consists of several thousand nodes, it requires huge memory resources. Furthermore, it takes a long time to execute the index reconfiguration because of the overhead of many index splitting processes.

They proposed a new method called slot-based virtual node method of consistent hashing to solve the above issues. As the multiple nodes are added or removed, their new method plots or reallocates the virtual nodes on the hash ring space to realize “a bunch of” data migration as far as possible to optimize the index reconfiguration.

Slot-based virtual node management saved memory consumption for the mapping information. They evaluated memory consumptions of both conventional and our proposed methods to bring out the resource-saving effect. They also estimated lapse times of index reconfiguration processes based on the data processing models to verify the effective reduction of time in our method.