Hadoop Version 2: One Step Closer to the Big Data Goal

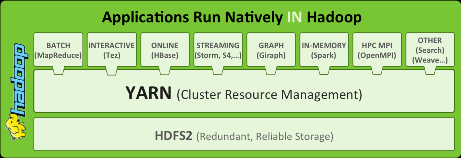

The wait for Hadoop 2.0 ended yesterday when the Apache Software Foundation (ASF) announced the availability of the new big data platform. Among the most anticipated features is the new YARN scheduler, which will make it easier for users to run different workloads–such as MapReduce, HBase, SQL, graph analysis, and stream processing–on the same hardware, and all at the same time. Better availability and scalability, and a smoother upgrade process, round out the new platform, as Hadoop creator Doug Cutting explains, but still not everybody is happy with Hadoop.

Yesterday’s launch of Hadoop 2.2 marks the first stable release of the version 2 line, which is the first major upgrade to the big data framework since version 0.20 (what has been renamed version 1.0) came out in 2010. The open source bits are ready to be downloaded by do-it-yourself coders. The big Hadoop distributors, like Cloudera, Hortonworks, MapR Technologies, and Intel are expected to begin distributing their Hadoop 2.0 releases in the very near future.

Yesterday’s launch of Hadoop 2.2 marks the first stable release of the version 2 line, which is the first major upgrade to the big data framework since version 0.20 (what has been renamed version 1.0) came out in 2010. The open source bits are ready to be downloaded by do-it-yourself coders. The big Hadoop distributors, like Cloudera, Hortonworks, MapR Technologies, and Intel are expected to begin distributing their Hadoop 2.0 releases in the very near future.

In an interview with Datanami, Cutting, who is also an ASF board member and chief architect at Cloudera, gave us the low down on the high technology wrapped up in the version 2 release.

“The bigger change we’re going to see is a wider mix of workloads that are supported by the new platform,” Cutting says. “With the YARN scheduler in there, we can now better intermix different kinds of workload and better utilize resources when these loads are inter-mixed. So we’ll be able to get graph processing in here along with SQL and other things, and have them effectively sharing memory, CPU, disk, and network I/O more effectively than they can today. It gets a lot finer grained integration.”

Not only can a single Hadoop version 2 cluster simultaneously support the different batch and real-time workloads, but they can simultaneously support different groups of users. As part of that trick, Hadoop needed better security, which is also a big component of the new version.

Part of sharing storage means sharing security, Cutting says. “You need to have a common authentication and common authorization framework if you’re going to be sharing storage. And to be able to do all these things with a high degree of confidence that one isn’t going to stomp on the other,” he says. “The promise of this platform is that you can bring different workloads and different groups out of their siloed data systems, into a common data system. But that only works if the common system supports multi-tenancy, if it permits people to run the things they need to run on the schedule they need to run them, and this is a huge step forward on that agenda.”

The big promise of Hadoop as an “operating system” for big data has yet to be realized. For starters, many organizations are still in the experimentation phase with Hadoop, and have not yet rolled out production Hadoop clusters. Cloudera has a handful of customers running really big Hadoop clusters (bigger than 1,000 nodes) and a lot of customers running smaller clusters, on the order of 50 to 100 nodes.

Keep in mind, even at 50 machines, a cluster can be a phenomenal amount of computational power,” Cutting says. “It’s reasonable to have a few nodes around for doing testing of systems…But having multiple relatively equal-size clusters is usually wasteful because typically your different groups won’t be 100 percent saturating their system all the time.”

Upgrades should also be smoother with Hadoop 2, which brings support for rolling upgrades. “To go from 1.x to 2.x, you’re going to have to shut your cluster down, which is difficult for people serving consumers in production. Scheduling down time is not an easy thing to come by,” Cutting says. “Once you’re running in version 2, then you can do rolling upgrades. As we improve things in 2.x series of releases, you shouldn’t need to have any downtime when you upgrade.”

Windows is also now officially a supported operating system with Hadoop 2. While some customers have been running Hadoop 1.x on Windows through a compatibility layer, Windows will now get some love from the little yellow elephant in the form of more native support.

That is big news for one corner of the country. “It’s a big deal for Microsoft,” Cutting says. Will it trigger a stampede of new or existing Hadoop users onto the OS from Washington State? Probably not. Linux has a commanding lead over Windows not only in running Hadoop, but in running most of the Internet. That momentum isn’t likely to change any time soon.

Some of the Hadoop version 2 features have already been available from the Hadoop distributors. For instance, Cutting’s company, Cloudera, has already had support for some of the new HDFS federation capabilities that eventually made it into version 2.0 (and are now GA with 2.2). But for many of the other features–notably YARN scheduler–Hadoop distributors like Cloudera have waited until Hadoop 2.0 was finalized.

“Shortly, Cloudera will start rolling out the next major release of our distribution of Hadoop. This will be CDH 5, built entirely on Hadoop 2,” Cutting says. “That will start rolling out very soon with early beta versions. Early next year we should see people start to use Hadoop 2 in production. I think it will roll out pretty quickly into a lot of folks’ businesses. It helps that it’s all very back compatible. We spent a lot of time working on compatibility.”

The Hadoop world isn’t standing around now that version 2.2 is out the door. There are a lot of promising new Hadoop technologies coming down the pipeline, such as Spark and Storm, which are probably the top Hadoop Incubator Projects. It’s tough to say exactly when the next big capability will graduate from incubation, and what release of Hadoop it will make it into. But it’s safe to say there is plenty of work to be done.

“I think we’re going to see the platform get generalized, and turn into what I think of as the OS for big data,” Cutting says. “So we have SQL today, we recently brought search into the platform, and now we’re see support for streaming with systems like Storm and S4, and we’ll be integrating in-memory processing, with things like Spark,” Cutting says. “I think there’s going to be a succession of new functionality that’s well-integrated with the rest of the platform.”

There are, however, detractors from Hadoop, who see version 2 as coming up a bit short in the usability department. You can count 1010data CEO Sandy Steier among that group. “Hadoop 2.0 is more of the same,” Steier said in a statement to Datanami. “Despite improvements in reliability and development choices, it remains a tool for technologists, flying counter to the trend towards self-service. Business users continue to be left in the cold with respect to their big data analytical needs.”

To be sure, there is lots of room under the big data tent. Some of the complaints that Steier logged against Hadoop are valid–especially in the areas of Hadoop requiring lots of coding and IT expertise, as well as the hardware resources required to run it effectively. But few computer users have the capability to writing directly against the Windows or Linux kernels, either, but millions of users leverage those kernels everyday with applications that run on them.

It takes time to build an ecosystem of tools that insulate users from the technological wizardry that goes under the covers. We’re seeing that process play out with Hadoop right now, and while the covers are not yet super thick and cozy, the ecosystem is making definite process toward that end.

Related Items:

Please Stop Chasing Yellow Elephants, TIBCO CTO Pleads

The Big Data Market By the Numbers

YARN to Spin Hadoop into Big Data Operating System