The Real-Time Future of Data According to Jay Kreps

When Jay Kreps helped develop Kafka several years back, the LinkedIn engineer was just happy to replace a batch messaging process with a new data pipeline that worked in real-time. Fast forward to July 2015, and the CEO of Confluent is talking about transforming how businesses think about and use data.

If you haven’t heard about Apache Kafka yet, you likely will soon. At the simplest level, the open source software is a next-generation messaging bus for big data, providing a secure and efficient way to move bits from point A to point B. Whether you need to move credit card transaction data to a fraud-detection system, or keep a Hadoop data warehouse fed with the latest clickstreams for real-time bidding on advertising, Kafka can fit the bill.

But the distributed technology goes beyond that, and delivers the underlying plumbing that allows people to build real-time applications that sit atop Kafka. If you want to build a streaming analytic application using something like Apache Storm or Apache Spark Streaming or Project Apex from DataTorrent, then you’re likely going to use Kafka as the core underlying messaging layer.

Those are just some of the use cases for Kafka that Kreps foresees, but Kreps’ imagination takes it much further than that.

“It is a new way of thinking about it,” Kreps, the co-founder and CEO of Confluent, tells Datanami. “One of realizations we came to was there was nothing in the company which generated data in batch. Customers, sales, clicks–whatever it is, it was always a continuous process that was generating a continuous stream of data.”

Batch processing is an artificial concept that was created in the infancy of the IT industry and implemented on the earliest mainframes, Kreps says. But the modern world doesn’t run in batch, and it’s time for new systems that can react more quickly to changing conditions.

“In a lot of domains, it’s actually critical [to have a real-time element], especially when people are talking about operational intelligence,” he says. “You don’t want to have a situation where your business is not functioning correctly and you only catch that after the fact, and when you’re trying to respond, you have to wait for the rollover of some very slow ETL process to find out if it truly worked. In some sense, it is new, but it’s almost overdue and very natural for the way the modern world works, which is built on top of totally digital systems that run more or less continuously.”

Confluent’s Big Round

Kafka’s journey from LinkedIn project to big data phenomenon was solidified today when Confluent–the company Kreps co-founded with two former LinkedIn colleagues, Neha Narkhede and Jun Rao–announced $24 million in Series B funding. (The Series B was slated to be $25 million, and there was another $1 million stake still available for private investors as this story went to press). This investment, which includes $20 million from Index Ventures and $4 million from Benchmark, comes on the heels of the $6.9 million it attracted last fall when it was spun out of LinkedIn.

Confluent is in an excellent position to capitalize on the need for big data applications that work in real-time, including streaming analytics and the wider world of stream processing. The plan calls for keeping the core of Kafka open source, while building a collection of tools to help customers run the messaging systems. Some of those will be open source and some will be using traditional licenses.

“What we’re building is all the stuff that people otherwise did in-house,” Kreps says. “We’re building tools to monitor the Kafka system, as well as tools to monitor flow of data through data pipelines. We’re building plugins to connect Kafka to all the data systems that companies run. We’re hoping to develop some of the stream processing capability that sit on top, and we’re hoping to secure the whole pipeline as data flows throughout your organization, especially if it’s critical.”

When companies have a dozen or a hundred applications tapping into a centralized Kafka cluster with more than 1PB of storage, keeping everything working well, without elevating the complexity level too far, can be a challenge.

“There’s a ton of work to do to make that something that’s doable and easy to get started with, as well as fleshing out the rest of this ecosystem, developing stream-processing capabilities, and making it easy to plug into other systems,” he says. “That’s our focus and that’s what we’re going to spend the money on–continuing to develop and extend the platform and help bring it to new customers.

Stitching Together the Digital World

The architecture of Kafka has the capacity to be transformative due to the simplicity of its design. While Kreps and his colleagues at LinkedIn put a lot of hard work into making Kafka bulletproof and ensuring that it could scale and recover from failures without losing data, the elegance of Kafka’s design is what will spur adoption.

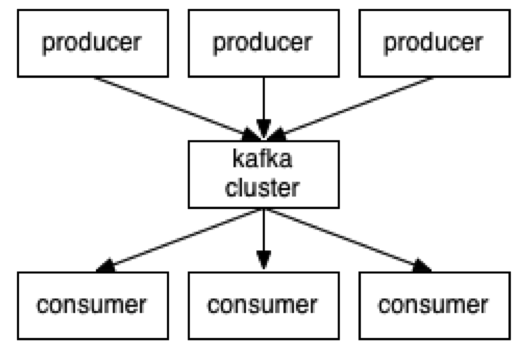

Kafka works by essentially exposing streams of data to whichever parties need it or request it. So once the work is done to encapsulate a stream of data within Kafka, then it becomes available to any other users or applications, via API, that have a need to use it. That’s a very simple concept, but it’s powerful in its implications for building real-time systems.

Kreps explains:

“The way companies make use of Kafka is they basically take each thing that’s happening in their company–whether a sale or a click or a trade–and turn it into a feed, almost like a Twitter feed, something that’s continuously adding to. What Kafka does is it makes that feed that available to other systems for real-time processing or ingestion. So you can suck it up into Hadoop or react to what’s happening in a program, an application that a developer might write, or in a stream processing system like Storm,” he says.

Customers can have hundreds of Kafka feeds, and each Kafka broker in a cluster can handle a significant amount of data. If the amount of data thrown at Kafka exceeds what one broker can handle (hundreds of megabytes of reads and writes per second, per the official Kafka webpage), then groups of brokers can come together to handle the load. The system is fault-tolerant, scalable, and ensures the integrity and the order of data as it flows through the pipelines.

Kreps continues:

“This becomes a really easy way to tie together what’s happening in different parts of the business, especially for large companies which are often broken into different business units running in different data centers with different tech stacks,” he says. “Being able to have this kind of unifying mechanism that captures what’s happening, that keeps the data all in synch across these systems and makes it universally accessible to everything else–is actually a huge payoff.”

Kafkas For All

When Bill Gates was starting Microsoft 40 years ago, he had an audacious plan to put a PC in every home (and he might have realized his dream, if it wasn’t for those middling iPhones!) Kreps is borrowing a bit from the grandmaster Gates with a similarly ambitious plan.

Kafka’s relationship to Hadoop, per Confluent

“Our fundamental belief is that this is something that makes sense at the heart of every company. There should be one of these at every company,” he says. “We don’t have that yet. So there’s lots of room to grow.”

There are already thousands of Kafka users, according to Kreps, including some large companies that have big production systems running atop Kafka. Besides LinkedIn, many of Silicon Valley’s top Web-scale outfits, such as Netflix, Uber, Pintrest, Tumblr, and Airbnb, are already using it. Compared to other types of scale-out, distributed technologies, such as NoSQL databases and Hadoop, the uptake has been rather swift. As the amount of data increases in the future, companies won’t have the luxury of a batch window to process it, which makes technologies like Kafka more necessary.

“It’s just easier to process this stuff as it happens because there’s so much of,” he says. “I expect that every company will have some of this, and I expect that it will grow on top of Kafka.”

Related Items:

Cloudera Brings Kafka Under Its ‘Data Hub’ Wing

Why Kafka Should Run Natively on Hadoop

LinkedIn Spinoff Confluent to Extend Kafka