The big data analytic ecosystem has matured over the last few years to the point where techniques such as machine learning don’t seem as exotic as they once were. In fact, consumers have even come to expect their products and services to be adaptive to their behavior, which is one of the hallmarks of machine learning. As we look to the future, the field of deep learning on GPUs is poised to carry the big data analytics torch forward into the realm of artificial intelligence.

While the theories behind deep learning are not new, the real-world application of deep learning in the modern sense is a relatively recent phenomenon that’s emerged in a big way over the past few years. Its rise is closely tied to the advent of modern GPUs, a fact that NVIDIA (NASDAQ: NVDA) co-founder and CEO Jen-Hsun Huang hammered home in his keynote at the GPU Technology Conference (GTC) last week.

“Five years ago the big bang of AI happened,” Huang said at GTC last Tuesday. “Amazing AI computer scientists discovered new algorithms that made it possible to achieve levels of results and perception using this technique called deep learning that nobody had ever imagined.”

NVIDIA founder and CEO Jen-Hsun Huang

At a high level, deep learning involves the use of layers of algorithms that “extract high-level, complex abstractions as data representations through a hierarchical learning process,” according to a 2015 article in the Journal of Big Data. “Complex abstractions are learnt at a given level based on relatively simpler abstractions formulated in the preceding level in the hierarchy.”

Various deep learning architectures–such as deep neural networks, convolutional deep neural networks, deep belief networks, and recurrent neural networks—are useful for tackling well-defined big data processing problems, including computer vision, speech recognition, text mining, natural language processing, audio recognition, and bioinformatics. But those aren’t the only ways that deep learning will be applied, which is what makes this field so interesting.

Universal Applicability

What makes deep learning so powerful, and what makes its future so incredibly bright, according to NVIDIA’s Huang, is how universally applicable the new algorithms are to a host of big data problems.

For example, consider AlphaGo, the program that Google’s DeepMind subsidiary created to play the ancient Chinese game of Go. Researchers “taught” AlphaGo how to play Go by using an artificial neural network that essentially to play millions and millions of rounds of Go. Eventually, the system figured out the best strategies to play the game (which features a nearly infinite number of possible moves) well enough to beat one of the best Go players in the world.

The interesting bit is that the Chinese Web giant Baidu (NASDAQ: BIDU) used the same basic approach to train its natural language processing service, called Deep Speech 2, how to recognize different languages. This is significant, according to Huang, because it shows that one approach is driving breakthroughs in different areas.

“Using one general architecture, one general algorithm, we’re now able to tackle one problem after another problem after another problem,” he said. “The fundamental difference is this. In the old approach, programs were written by domain experts and it took time and refinement. In this new approach, you have a general algorithm called deep learning and what you need is massive amounts of data and huge amounts of high performance computing horsepower. It’s a fundamental different approach for developing software.”

The AI industry has exploded thanks to deep learning algorithms running atop GPU, says NVIDIA’s Huang

So far, deep learning has primarily been the domain of major tech firms, such as Google (NASDAQ: GOOG) and Baidu (NASDAQ: BIDU) which employ the algorithms on massive GPU-powered clusters to power various services they make available over the Web, such as image recognition or speech recognition. Other large companies like Amazon Web Services (NASDAQ: AMZN), Microsoft (NASDAQ: MSFT), and IBM (NYSE: IBM) have also made significant investments in deep learning. Facebook (NASDAQ: FB), which acquired AI startup MetaMind last week, is also exploring the area.

But like many other technologies that started out as research projects, deep learning is in the process of going mainstream. Thousands of AI startups have popped up in the last few years, and venture capitalists invested $5 billion in them, according to Huang. Underpinning many of these startups are investments in deep learning algorithms, which has emerged as the key technology driving the emerging artificial intelligence/cognitive computing industry that analysts say will be worth upwards of half a trillion dollars in the next few years.

Why AI Needs Big Data

This surge of interest into deep learning and AI is good business for CrowdFlower, the crowdsourced data labeling service that’s used by companies adopting machine learning. CrowdFlower founder and CEO Lukas Biewald says the fledgling AI industry is where the big data industry was several years ago.

NVIDIA calls the new the DGX-1 “the world’s first deep learning supercomputer”

“At this point, big data has settled into a place where people care about it and companies are using technologies related to it,” Biewald told Datanami in an interview earlier this year. “I think AI is a little less understood…Everybody’s trying to figure out what’s their AI strategy. If you’re a CIO or CTO at any company, you’re probably thinking what’s my AI strategy right now for 2016 and beyond.”

The advent deep learning techniques, when combined with the big data explosion and specialized processing environments such as NVIDIA’s GPUs, is giving people an incredible tool to make sense of the world, and people are trying to figure out how to use it.

“The coolest thing you can do with your data is AI, and AI needs big data to work,” Biewald says. “People are starting to realize that machine learning isn’t smarter than people. It’s just able to look at way more stuff than people. It’s attention span is a lot longer than a human’s…Now that companies are actually storing all these data, they can use it to train these algorithms and they’re way more successful than they were in the past.”

What Comes Next

So what comes next for deep learning? NVIDIA is certainly betting big on the technology driving huge demand for its products, including the new Tesla P100, a new chip it unveiled last week that features 15 billion transistors, or the DGX-1, which it bills as the world’s first deep learning supercomputer that’s able to churn through more than billion images per day.

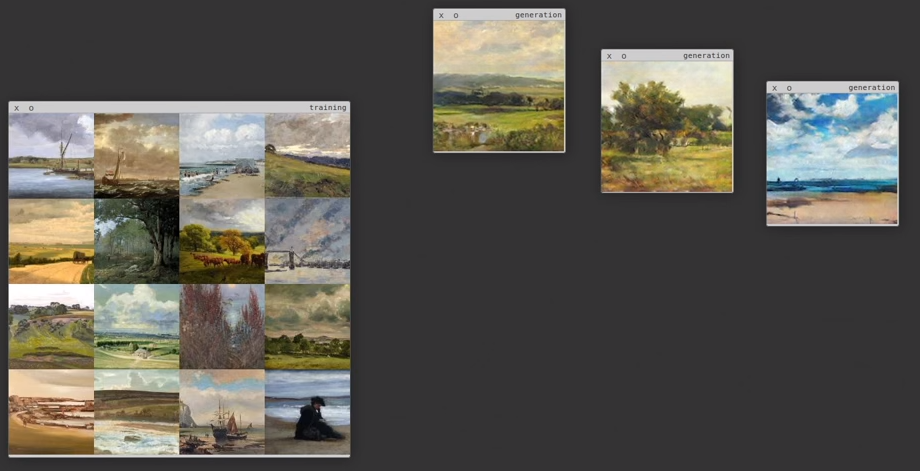

Facebook’s AI Research group was able to generate pictures based on features extracted from scanned Romanticism-era paintings

Of particular interest is the intersection of deep learning and Internet of Things (IoT). The huge amounts of machine data emanating from sensors are perfect places for deep learning to go to work and boost the accuracy of predictions, which is critical for everything from self-driving cars to functional robots. But better and faster image recognition is just the start. We’re on the cusp of seeing new services that are powered by deep learning algorithms trained on mounds of big data that could change the way we live.

As of yet, we’ve yet to make full use of deep learning algorithms in the context of big data analytics, according to the writers of the aforementioned Journal of Big Data article “Deep learning applications and challenges in big data analytics.” “The ability of deep learning to extract high-level, complex abstractions and data representations from large volumes of data, especially unsupervised data, makes it attractive as a valuable tool for big data analytics,” they write.

At GTC, the Facebook AI Research team showed how programs backed by deep learning could extract important features from a corpus of landscape paintings by Romanticism-era artists and actually create a unique work that looks remarkably like an actual painting. Google is creating an email feature that will automatically reply to emails for you. And companies like Deep Genomics and Deep Instinct are using deep learning algorithms to accelerate the understanding of areas like genomics and cyber security, respectively.

“The beauty of deep learning is that it’s an approach that’s powerful enough to actually achieve super-human results without super humans to train them,” Huang said during his keynote. “It’s like Thor’s Hammer. It fell from the sky. It’s that big a deal.”

Related Items:

How NVIDIA Is Unlocking the Potential of GPU-Powered Deep Learning

Why Google Open Sourced Deep Learning Library TensorFlow

Inside Yahoo’s Super-Sized Deep Learning Cluster

April 23, 2025

- Metomic Introduces AI Data Protection Solution Amid Rising Concerns Over Sensitive Data Exposure in AI Tools

- Astronomer Unveils Apache Airflow 3 to Power AI and Real-Time Data Workflows

- CNCF Announces OpenObservabilityCon North America

- Domino Wins $16.5M DOD Award to Power Navy AI Infrastructure for Mine Detection

- Endor Labs Raises $93M to Expand AI-Powered AppSec Platform

- Ocient Announces Close of Series B Extension Financing to Accelerate Solutions for Complex Data and AI Workloads

April 22, 2025

- O’Reilly Launches AI Codecon, New Virtual Conference Series on the Future of AI-Enabled Development

- Qlik Powers Alpha Auto Group’s Global Growth with Automotive-Focused Analytics

- Docker Extends AI Momentum with MCP Tools Built for Developers

- John Snow Labs Unveils End-to-End HCC Coding Solution at Healthcare NLP Summit

- PingCAP Expands TiDB with Vector Search, Multi-Cloud Support for AI Workloads

- Qumulo Launches New Pricing in AWS Marketplace

April 21, 2025

- MIT: Making AI-Generated Code More Accurate in Any Language

- Cadence Introduces Industry-First DDR5 12.8Gbps MRDIMM Gen2 IP on TSMC N3 for Cloud AI

- BigDATAwire Unveils 2025 People to Watch

April 18, 2025

- Snowflake and PostgreSQL Among Top Climbers in DB-Engines Rankings

- Capital One Software Unveils Capital One Databolt to Help Companies Tokenize Sensitive Data at Scale

- Salesforce Launches Tableau Next to Streamline Data-to-Action with Agentic Analytics

- DataVisor Named a Leader in Forrester Wave for AML Solutions, Q2 2025

- GitLab Announces the General Availability of GitLab Duo with Amazon Q

- PayPal Feeds the DL Beast with Huge Vault of Fraud Data

- OpenTelemetry Is Too Complicated, VictoriaMetrics Says

- Will Model Context Protocol (MCP) Become the Standard for Agentic AI?

- Thriving in the Second Wave of Big Data Modernization

- What Benchmarks Say About Agentic AI’s Coding Potential

- Google Cloud Preps for Agentic AI Era with ‘Ironwood’ TPU, New Models and Software

- Google Cloud Fleshes Out its Databases at Next 2025, with an Eye to AI

- Accelerating Agentic AI Productivity with Enterprise Frameworks

- Can We Learn to Live with AI Hallucinations?

- Monte Carlo Brings AI Agents Into the Data Observability Fold

- More Features…

- Grafana’s Annual Report Uncovers Key Insights into the Future of Observability

- Google Cloud Cranks Up the Analytics at Next 2025

- New Intel CEO Lip-Bu Tan Promises Return to Engineering Innovation in Major Address

- AI One Emerges from Stealth to “End the Data Lake Era”

- SnapLogic Connects the Dots Between Agents, APIs, and Work AI

- Snowflake Bolsters Support for Apache Iceberg Tables

- GigaOM Report Highlights Top Performers in Unstructured Data Management for 2025

- Reporter’s Notebook: AI Hype and Glory at Nvidia GTC 2025

- Mathematica Helps Crack Zodiac Killer’s Code

- Big Growth Forecasted for Big Data

- More News In Brief…

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- MinIO: Introducing Model Context Protocol Server for MinIO AIStor

- Dataiku Achieves AWS Generative AI Competency

- AMD Powers New Google Cloud C4D and H4D VMs with 5th Gen EPYC CPUs

- Prophecy Introduces Fully Governed Self-Service Data Preparation for Databricks SQL

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- CData Launches Microsoft Fabric Integration Accelerator

- MLCommons Releases New MLPerf Inference v5.0 Benchmark Results

- Opsera Raises $20M to Expand AI-Driven DevOps Platform

- GitLab Announces the General Availability of GitLab Duo with Amazon Q

- More This Just In…