Kognitio Pivots SQL Data Warehouse Into Hadoop

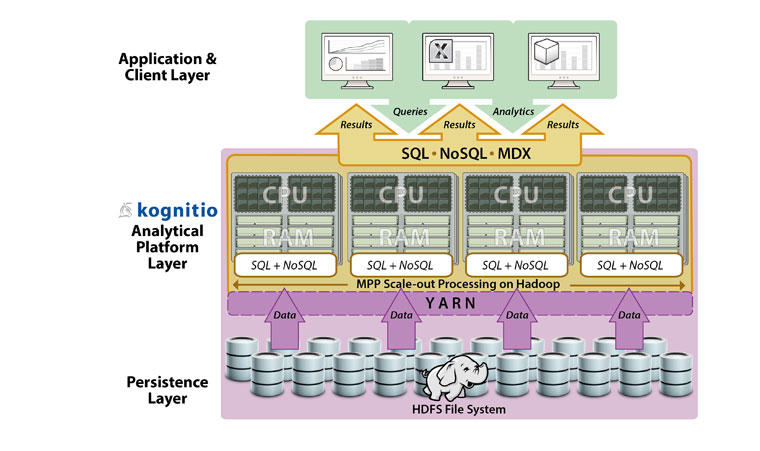

If you’re in the market for a SQL-on-Hadoop solution, you have many options to consider. And now that Kognitio has adapted its proven massively parallel processing (MPP) in-memory SQL database to run under YARN and MapReduce, you now have one more.

The Chicago-based company announced that its Kognitio Analytics Platform was Hadoop ready at the Strata + Hadoop World show in London earlier this year. During a conversation at the New York City version of the show last week, CTO Roger Gaskell told Datanami the new approach is having success.

By giving the Hadoop version of its SQL database away for free, Kognitio hopes to shake up the market for SQL-on-Hadoop solutions. The main target for the free software is organizations that have already adopted Hadoop, but are having trouble scaling their systems to handle multiple concurrent SQL query processing. Customers who have already adopted the Kognitio platform on traditional platforms or appliances will not see much benefit of migrating to Hadoop.

Kognitio got into the Hadoop game at the urging of one of its customers in the financial services industry. The company, which Kognitio identifies as one of the world’s largest credit card issuers (and one that never lets its name be used as a reference), was having trouble serving SQL queries from its Hadoop store to clients who would access the using Tableau‘s visualization tools.

The numbers involved in this particular account are pretty gaudy: About 100,000 corporate customers would have access to a 9PB Hadoop cluster storing 12 billion credit card transactions. At any given time, the system would be required to serve 300-400 concurrent clients. Considering that a single dashboard could generate up to 100 individual SQL queries, the system needed to be able to handle thousands of queries per second from hundreds of clients.

After considering the options, the bank apparently asked Kognitio if it could provide a solution to this SQL query throughput problem. Kognitio took the challenge, and adapted its platform to run under YARN and to access the Hadoop Distributed File System (HDFS). Kognitio’s MPP database can install directly on Hadoop nodes, giving it the capability to bring HDFS-resident data quickly into its in-memory store.

The bank configured Kognitio to use 5TB of memory and 320 processing cores of its Hadoop cluster, according to Kognitio’s case study. The vendor says the system processes thousands of SQL queries per second, and maintains a dashboard render time of less than four seconds for 98% of users. Because Kognitio is a scale-out solution, the bank can add more CPU cores and RAM to increase performance.

“While there may be few companies in the world that have that kind of data on hand,” Gaskell stated in June, when it launched its Hadoop solution, “the need for enterprise ready, simple solutions that can allow business users to rapidly interact with Hadoop-based data is increasingly universal.”

Most, if not all, SQL-on-Hadoop vendors claim performance superiority. Gaskell told Datanami that Kognitio plans to back up its big talk by running the new TPC-DS benchmark, which was unveiled last year.

Anybody can download and run the Kognitio for Hadoop solution. There are no restrictions on production uses of the software, which also supports NoSQL data types like JSON, and can run queries written in R and Python. However, customers who want technical support for the product will need to pay about $2,000 to $4,00 per node. By comparison, traditional Kognitio deployments run about $120,000 per TB.

There’s one more benefit to running Kognitio. In the near future, the company will be giving away $5,000 to one lucky customers as part of its Kognitio-on-Hadoop competition. Organizations who download and install the software are asked to submit a short paper explaining how they used Kognitio on Hadoop. The entries will be judged by an independent industry analyst. The full details are available at the company’s website: www.kognitio.com.

Related Items:

New TPC Benchmark Puts an End to Tall SQL-on-Hadoop Tales

Picking the Right SQL-on-Hadoop Tool for the Job