(Sergey Nivens/Shutterstock)

Yahoo, Cambridge Semantics, and Ravel Law are among the companies making news this week in the burgeoning field of graph and entity analytics.

Wouldn’t it be nice to automatically extract meaningful information from text, such as what it’s about and identifying the major entities (people, places, things) without explicitly training a program to do so? That’s essentially what Yahoo does with its Fast Entity Linker, which is an unsupervised entity recognition and linking system.

The big news out of Sunnyvale, California today is that Yahoo has decided to make Fast Entity Linker freely available as an open source product. Now, anybody can use software to automatically extract entities and perform basic link analysis on text.

One of the cool things about Fast Entity Linker is that it supports not just English, but also Spanish and Chinese. That makes it one of only three freely available entity recognition and linking systems that support multiple languages. The others, according to today’s Yahoo Research blog post, are DBpedia Spotlight and Babelfy.

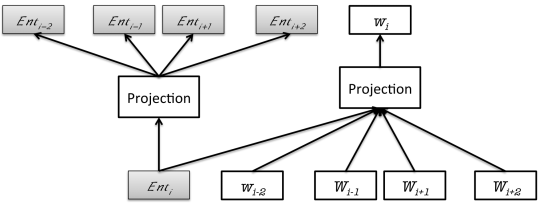

Yahoo’s Fast Entity Linker (FEL) core was trained with text from Wikipedia, and uses entity embeddings, click-log data, and efficient clustering methods to achieve high precision, according to Yahoo Research engineers. “The system achieves a low memory footprint and fast execution times by using compressed data structures and aggressive hashing functions,” the company says.

How Yahoo’s FEL trains word embeddings and entity embeddings simultaneously. (Image courtesy Yahoo Research)

Named entity extraction and linking systems like FEL are critical elements for many text analytics projects. The approach is particularly useful for fine-tuning search engines, recommender systems, question-answering systems, and for performing sentiment analysis.

You can download Yahoo’s contribution at github.com/yahoo/FEL.

A Triple Crown

We also have news out of Boston, Massachusetts, where Cambridge Semantics claims it has “shattered” the previous record for loading and querying humongous sets of linked data, or “triples” that include three pieces of related data, including a subject, a predicate, and an object.

The company says its Anzo Graph Query Engine, which it obtained with its 2016 acquisition of Barry Zane’s SPARQL City, completed a load and query of one trillion triples on the Google Cloud Platform in less than two hours. That is 100 times faster than the previous solution running the same Lehigh University Benchmark (LUBM) at the same data scale, the company claims.

To put that into perspective, one million triples occupies the same amount of data as six months of worldwide Google searches, 133 facts for each of the 7 billion people on earth, 100 million facts describing all the details of each of 10,000 clinical trial studies, or 156 facts about each device connected to the Internet, according to Cambridge Semantics.

To put that into perspective, one million triples occupies the same amount of data as six months of worldwide Google searches, 133 facts for each of the 7 billion people on earth, 100 million facts describing all the details of each of 10,000 clinical trial studies, or 156 facts about each device connected to the Internet, according to Cambridge Semantics.

Zane, who is Cambridge Semantics vice president of engineering, says long load times have been a big hurdle for enabling semantic-based analytics. “With the LUBM results, it’s been validated that a loading and query process that once took over a month’s worth of business hours can now be completed in less than two hours,” he says in a press release.

Anzo Graph Query Engine is a clustered, in-memory graph analytics engine designed to enable users to write and run ad hoc and interactive queries using open semantic standards, like SPARQL, which is a query language for Resource Description Framework (RDF).

Zane, who also created the ParAccel column-oriented database that today is at the heart of Amazon’s RedShift offering, says the graph approach is needed because increasing data volumes will break traditional analytics built atop inflexible relational databases.

“This benchmark record set by our Anzo Graph Query Engine signals a paradigm shift where graph-based online analytical processing (GOLAP) will find a central place in everyday business by taking on data analytics challenges of all shapes and sizes, rapidly accelerating time-to-value in data discovery and analytics,” he says.

Graphing Legal

This week also saw the launch of new semantic technology from Ravel Law, a San Francisco-based startup that’s looking to bring advanced analytics to the legal domain.

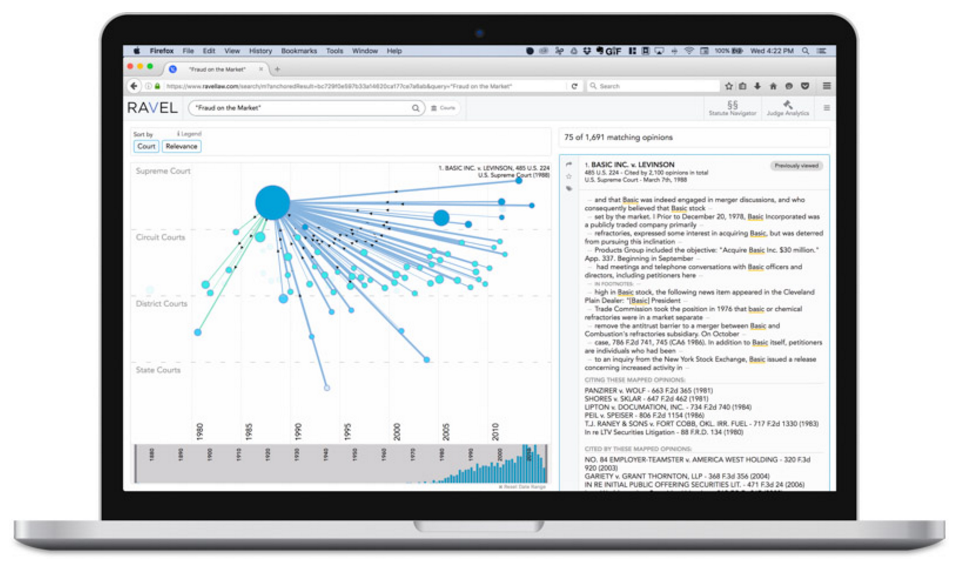

Ravel’s Court Analytics gives the user a graph-based interface to explore connections among judges, courts, and cases in the legal world.

Ravel’s new Court Analytics offering combines natural language processing, machine learning, and semantic technology to help lawyers get a leg up in court. By analyzing millions of court opinions and identifying patterns in language and case outcomes across 400 federal and state courts, the software enable lawyers to make better decisions when comparing forums, assessing, possible outcomes, and writing briefs, the company says.

“Attorneys can inform their strategy with objective insights about the cases, judges, rules and language that make each jurisdiction unique,” Ravel CEO and co-founder Daniel Lewis says in a press release.

The product builds on the company’s exclusive access to one of the country’s largest libraries of case law: the one at Harvard University, which is second only to the Library of Congress. Harvard Law School has tapped Ravel in a partnership called the Caselaw Access Project to digitize 40 million pages of text across 43,000 volumes to make them freely accessible online. In exchange for doing the OCR grunt work, Ravel gets an eight-year license to commercially exploit redacted files.

The company, which was founded by Stanford Law graduates, looks to be doing exactly that. Court Analytics is its third product, behind Judge Analytics and Case Analytics. And it appears the company is aiming for more. “Judges are just one entity,” Lewis tells ABA Journal. “You can use analytics for individual firms, lawyers and companies. There’s fertile ground for new insights there.”

It’s unknown exactly what technologies Ravel uses. But according to a recent job posting for a lead data scientist, the company was looking for somebody familiar with named entity recognition systems, semantic relatedness, topic modeling, and automatic document summarization. Expertise in Spark, Weka, H2O, Python, MLLIB, Scala, Java, Python, and SQL were also listed.

Related Items:

Text Analytics and Machine Learning: A Virtuous Combination

Yahoo Shares Algorithm for Identifying ‘NSFW’ Images

5 Factors Driving the Graph Database Explosion

February 5, 2025

- PEAK:AIO Powers AI Data for University of Strathclyde’s MediForge Hub

- dbt Labs Surpasses $100M ARR, Expands Global Customer Base

- Qlik Connect 2025 Brings AI, Iceberg, and Automation to the Forefront

- Immuta Finds Legacy Data Provisioning Systems Are Hindering AI Adoption

- Arcitecta Named a Leader in Coldago Research’s Map 2024 for Unstructured Data Management

- Hydrolix Releases Apache Spark Connector for Databricks Integration

February 4, 2025

- MindBridge Partners with Databricks to Deliver Enhanced Financial Insights

- SAS Viya Brings AI and Analytics to US Government with FedRAMP Authorization

- Percona Announces Comprehensive, Enterprise-Grade Support for Open Source Redis Alternative, Valkey

- Alluxio Enhances Enterprise AI with Version 3.5 for Faster Model Training

- GridGain Enables Real-Time AI with Enhanced Vector Store, Feature Store Capabilities

- Zoho Expands Zia AI With New Agents, Agent Studio, and Marketplace

- Object Management Group Publishes Journal of Innovation Data Edition

- UMD Study: AI Jobs Rise While Overall US Hiring Declines

- Code.org, in Partnership with Amazon, Launches New AI Curriculum for Grades 8-12

- WorkWave Announces Wavelytics, Delivering Data-Driven Insights to Optimize Businesses

February 3, 2025

- The Top 2025 Generative AI Predictions: Part 1

- Inside Nvidia’s New Desktop AI Box, ‘Project DIGITS’

- OpenTelemetry Is Too Complicated, VictoriaMetrics Says

- PayPal Feeds the DL Beast with Huge Vault of Fraud Data

- The Top 2025 GenAI Predictions, Part 2

- 2025 Big Data Management Predictions

- Big Data Career Notes for December 2024

- Slicing and Dicing the Data Governance Market

- Why Data Lakehouses Are Poised for Major Growth in 2025

- What Are Reasoning Models and Why You Should Care

- More Features…

- Meet MATA, an AI Research Assistant for Scientific Data

- IBM Report Reveals Retail and Consumer Brands on the Brink of an AI Boom

- Oracle Touts Performance Boost with Exadata X11M

- Dataiku Report Predicts Key AI Trends for 2025

- AI Agent Claims 80% Reduction in Time to Complete Data Tasks

- Sahara AI’s New Platform Rewards Users for Building AI Training Data

- Qlik and dbt Labs Make Big Data Integration Acquisitions

- Bloomberg Survey Reveals Data Challenges for Investment Research

- Collibra Bolsters Position in Fast-Moving AI Governance Field

- Observo AI Raises $15M for Agentic AI-Powered Data Pipelines

- More News In Brief…

- Informatica Reveals Surge in GenAI Investments as Nearly All Data Leaders Race Ahead

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- AI and Big Data Expo Global Set for February 5-6, 2025, at Olympia London

- GIGABYTE Launches New Servers with NVIDIA HGX B200 Platform for AI and HPC

- Domo Partners with Data Consulting Group to Provide Advanced BI Capabilities to Global Enterprises

- Oracle Unveils Exadata X11M with Performance Gains Across AI, Analytics, and OLTP

- Dremio’s New Report Shows Data Lakehouses Accelerating AI Readiness for 85% of Firms

- Exabeam Enhances SOC Efficiency with New-Scale Platform’s Open-API Integration

- Centific Integrates NVIDIA AI Blueprint to Advance Video Analytics Across Industries

- Crisp Introduces AI Blueprints to Simplify Retail Analytics and Supply Chain Challenges

- More This Just In…