Scrutinizing the Inscrutability of Deep Learning

via Shutterstock

Most people can’t make heads or tails out of most algorithms. By “most people,” I’m referring to anyone who didn’t literally develop the algorithm in question or doesn’t manage the rules, data, and other artifacts that govern how its results drive automated decision processes.

An algorithm only starts to make sense if an expert can explain it in the proverbial “plain English.” Algorithms’ daunting size, complexity, and obscurity create a serious challenge: finding someone to speak authoritatively on how they might behave in particular circumstances. Often, there may be no one expert who has sufficient knowledge of the algorithm in question who can express in ordinary language how it might produce particular analyses, decisions, and actions.

In deep learning, machine learning, and other algorithmic disciplines, even the leading experts may lack the vocabulary for explaining to ordinary people why their handiwork generated a particular result. The mathematical, statistical, and other technical language they use is part of the problem, but it may not be the most serious issue.

Quite often, the interpretability conundrum is more deep-seated than that. For example, machine learning specialists do their best to explain in plain language how their creations emulate the neural processing that goes on in our brains. However, that’s not the same as explaining how any particular specimen of those artificial “brains” arrives at a particular assessment in a particular circumstance. And that neural-like processing, no matter how well described, can’t be equated with any particular rational thought process, because, like the synaptic firings in our own brains, it operates at a sub-rational level. If you were a lawyer, you would be at pains to explain to a jury the low-level decision processes executed by a specific machine learning algorithm.

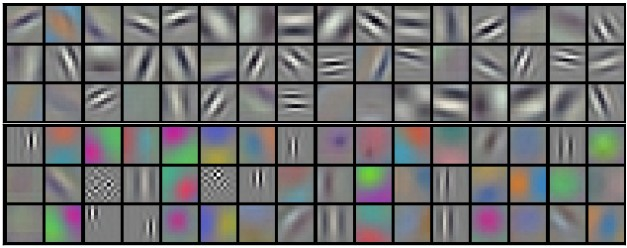

As we add more complexity to neural networks, the interpretability issues grow more acute. That’s when we’re in deep learning territory, which is the heart of most new, practical applications of this technology. To illustrate the magnitude of the interpretability challenge, try wrapping your head around the menagerie of algorithmic structures associated with this field, as discussed here. Even if you’re a deep learning specialist, you’d be at a loss to explain how each layer, neuron, connection, and hyperparameter constitutes some essential element of a rational thought process.

I doubt that it’s possible to break out the plain-English decision narrative associated with each element of a deep learning algorithm. That’s because the very feature that makes deep learning so powerful—multiple layers of abstraction that drive fine-grained predictions, classifications, and pattern manipulations—also distances an algorithm’s individual elements further and further from rational interpretability. Iterative training of a deep learning model is designed to boost its effectiveness in practical applications, but doesn’t imply that the model has any necessary validity (metaphysical, logical, or mathematical) beyond the fact of its brute utility.

In fact, the convoluted process of training deep learning networks renders any fine-grained interpretation of their underlying “cognitive processes” futile. To understand what I mean, check out this recent blog on “tips and tricks to make generative adversarial networks (GANs) work.” Try explaining an “ADAM Optimizer” to the judge when your GAN inadvertently crashes an autonomous vehicle into a crowd of innocent people. Not only does the training procedure discussed in that blog not even remotely resemble rational thought, it probably wouldn’t strike a neuroscientist as having any direct correspondence to the deep processing that goes on in organic brains.

As society comes to rely more fundamentally on algorithmic wizardry of this sophistication level, we’ll need new approaches for elucidating, explaining, and justifying these development approaches. Just as important, society will need a clear set of criteria for distinguishing which algorithmic processes can be rolled up into rational decision-path narratives.

That will not be easy. As Thomas Davenport states in his recent article, there are often trade-offs between the accuracy of deep learning models and their plain-language interpretability. “These new deep learning neural network models are successful in that they master the assigned task,” Davenport says, “but the way they do so is quite uninterpretable, bringing new meaning to the term ‘black box.”

“Try explaining an ‘ADAM Optimizer’ to the judge when your GAN inadvertently crashes an autonomous vehicle into a crowd of innocent people.”

The stakes of algorithmic interpretability could not be higher. Per Davenport’s discussion, society will increasingly pin life-and-death decisions—such as steering autonomous vehicles and diagnosing illnesses–on such algorithmic tools. Without plain-language transparency into how these algorithms make decisions, the cognitive revolution may stall out. Without visibility into which features contributed to real-world algorithmic decisions, trust in the technology will decline and backlash will ensue.

Considering how fundamental deep learning algorithms are to this revolution, I think Davenport is onto something in stating that “the best we can do is to employ models that are relatively interpretable.” Fortunately, many smart people are working on the intricacies of identifying what’s “relatively interpretable.” Davenport’s article points to a fascinating set of research papers in this regard.

Reading through those research studies, I’ve compiled a high-level list of tips for developers of deep learning, machine learning, and cognitive computing applications seeking to build interpretability into their output:

- Develop models in high-level probabilistic programming languages that ensure interpretability in the underlying logic behind algorithmic execution;

- Build parsimonious models that incorporate fewer, higher-dimension, more influential features;

- Rely on decision-tree induction models to call out predictive features and apply similarity analyses to ensembled decision trees;

- Apply comprehensive, consistent labeling in model feature sets;

- Use automated tools to rollup interpretable narratives associated with for model decision paths;

- Use interactive tools for assessing the sensitivity of predictions to various features, such as through hierarchical partitioning of the feature space

- Employ predictive models to assess the likelihood of various algorithmic outputs and the impacts that various features are likely to have in them;

- Use variational auto-encoders to infer interpretable models from higher-dimensional representations;

- Implement trusted time-stamped logging and archiving of narratives and the associated feature models, data, metadata and other artifacts that contributed to particular results; and

- Enable flexible access, query, visualization, and introspection of narratives associated with model inputs, features, and decision paths.

The data science community has a lot of work ahead of them to crystallize these and other approaches into consensus best practices. Many of the advances in this area will come from needing to responds to legal, regulatory, and practical mandates to mitigate the practical risks of deploying artificial intelligence into sensitive real-world scenarios.

Everybody agrees that the “black box” of algorithmic decision processes needs to be illuminated at all levels. But it’s not clear yet how practitioners will do that in a standard fashion that can be automated and made accessible to the mainstream analytics developers.

About the author: James Kobielus is a Data Science Evangelist for IBM. James spearheads IBM’s thought leadership activities in data science. He has spoken at such leading industry events as IBM Insight, Strata Hadoop World, and Hadoop Summit. He has published several business technology books and is a very popular provider of original commentary on blogs and many social media.

Related Items:

How Spark Illuminates Deep Learning

Why Deep Learning, and Why Now

IBM: We’re the Red Hat of Deep Learning