Why Cheap Learning Is In Your Future

(a-image/Shutterstock)

While deep learning racks up the likes among the big data crowd, a potentially bigger phenomenon is the emergence of extremely simple machine learning models that do not require sophisticated technical and mathematical skills, or what machine learning expert Ted Dunning calls “cheesy and cheap machine learning,” or simply “cheap learning.”

“Deep learning is all the rage, all the fashion, and of course everybody has to like it because of that, because we’re all so fashionable in the tech industry,” Dunning, who is MapR Technologies‘ chief application architect, tells Datanami in a recent interview. “But what also is happening is cheap learning, which are very simple models to solve very simple problems, but which in aggregate give a very large value.”

In the new cheap learning age, developers will avail themselves to the powerful and easy to use machine learning frameworks — and do so without having to understand all the complex mathematics driving the predictions and recommendations and optimizations under the covers, Dunning says.

“They can use machine learning without even realizing it’s machine learning, and without having to become mathematical sophisticates,” says says Dunning, who co-authored with Ellen Friedman two machine learning books, including “Practical Machine Learning: Innovations in Recommendation” and “Practical Machine Learning: A New Look at Anomaly Detection.”

“If this observation is right, we still have to learn machine learning, but it doesn’t mean what most people think it means.”

Learning on the Cheap

Dunning uses his integrated development environment (IDE) as an example of cheap learning in action. As he codes, his IDE notices what libraries Dunning is using and what menu items he prefers, and reorders them on the screen in a way that makes them easier to use. “It learns from the code I’ve written,” Dunning says. “It’s analyzing and storing little nuggets about me, like ‘He never talks about these XML libraries, but he talks about Google’s all the time.’

MapR chief application architect Ted Dunning

“It is machine learning,” he continues. “It’s cheesy and cheap machine learning. It is cheap learning, not deep learning. But it’s incredibly valuable on a moment to moment basis, when you have 500 different examples of that, where the machine is scurrying around making life better for you.”

Dunning expects cheap learning development techniques to become the predominant form of machine learning, as opposed to the bigger, heavier, and complex forms of deep learning, which typically require huge data sets, massive clusters, and long training cycles. Cheap learning models, by contrast, are trained, released, and then go on to continue learning in an autonomous fashion.

Several factors are driving the rise of cheap learning, including the current state of big data tech, the trend toward greater abstraction levels, and the huge unmet demand for extracting real value out of data. “In the present, I think the data capitalization on the advantages that machine learning can give us, or give companies, is abysmal,” he says. “I think there’s a huge amount of latent value that companies are not taking advantage of.”

As technology improves and machine learning frameworks become easier to work with and languages like Python help to abstract away complexity, it will open up the world of machine learning to a new crowd of users who lack PhDs in mathematics, Dunning says.

Math Anxiety

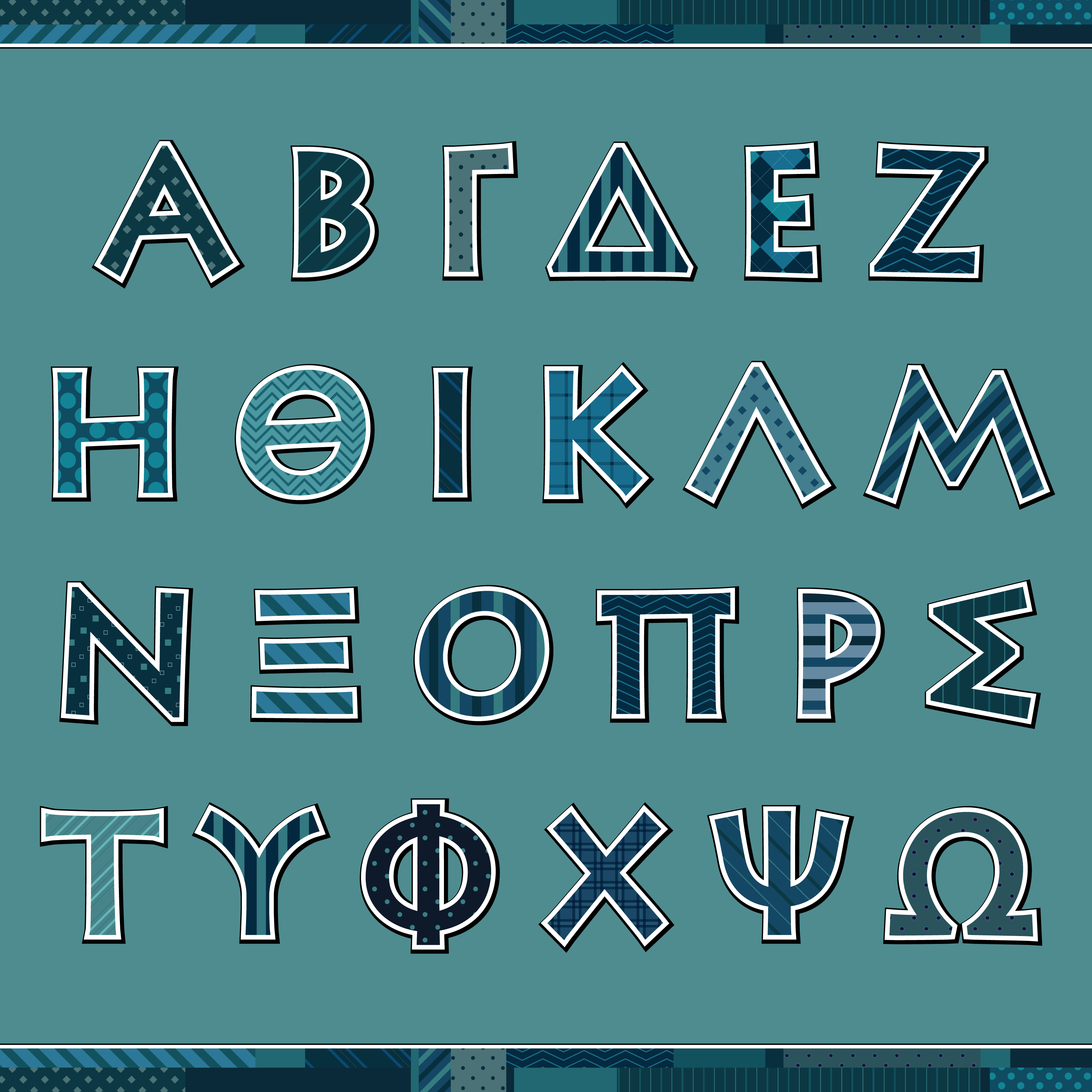

(drosostalitsa/Shutterstock)

Prospective big data application developers often “freeze up” when presented ideas in a mathematical way. Dunning says his frequent co-author, Ellen Friedman, has documented this math fright during presentations by Dunning.

“She noticed that people would freeze up when I would start showing mathematical notation and matrixes on the screen,” he says. “I was explaining them in a very friendly way, but peopl

e see the Greek letter and they freak out.”

The solution was to eliminate the scary Greek letters and other overt signs of math, and use cartoons instead to represent basic ideas, like columns and rows.

“We made it not look very mathematical and people were very happy,” Dunning says. “Because the essence, the core problem there, was counting. It wasn’t PhD stuff. It was counting. And it turns out the really simple stuff pays off really big and can be deployed by people with massive amounts of math anxiety, and they can have high value out of that.”

That doesn’t mean deep learning goes away, says Dunning, who admits that deep learning is “important” and is “a big deal.” Dunning also isn’t saying that math is not important. “It’s tremendously useful and you’ll be much better at this stuff if you know the math,” he says.

While knowing the math is useful, it’s no longer necessary for getting results with machine learning, Dunning says. “I don’t think that most people should be running out and jumping into Andrew Ngs’ machine learning course,” he says. “It talks a lot about the mathematical underpinnings. I think most people would be totally freaked out by that stuff and turned off, rather than turned on about it.”

Deep learning is “just one end of the spectrum,” Dunning says. Lower-end machine learning techniques will occupy the longer tail of opportunity, and will become more ubiquitous for developers with time.

“Everybody is going to wise up to doing [cheap learning], or at least everybody who’s new and doesn’t realize that it’s supposed to be hard, will be doing it,” he says. “It’ll become a very standard part of the toolkit.”

Higher Levels of Abstraction

In the new cheap learning paradigm, the combination of sophisticated frameworks like Theano and Tensorflow and the powerful but simple languages like Python will help to create a new layer of abstraction that eliminates the need for big data application developers to understand the nitty gritty details of high-level math and low-level execution models to get stuff done.

(Aleksandr N/Shutterstock)

Developers will be able to tell the computer what to do at a high level, Dunning says, and the computer will take care of the implementation details, whether it’s running on a 1,000-node cluster, a gaggle of GPUs, or just a laptop.

Dunning uses a car analogy to communicate his vision for how the cheap learning metaphor will evolve.

“I don’t understand cars anymore. I understand what combustion is and what gasoline is, but the actual details of how cars work escaped my grasp many years ago,” Dunning says. “But I have a mental model, when I want to get to work or talk to somebody about the car. We need to have that same sort of loss of detail, and a higher abstraction level, when talking about these parallel programs.”

Dunning sees machine learning frameworks evolving to become more powerful and separating us from the implementation details. “There will be some fiction, some mirage, that is understandable and is a useable metaphor for what actually does happen,” he says. “The literal truth of it will not be as important as the actionable truth of it.

“We live in a world of metaphors. We do not see quantum mechanics. We do not see spark plugs firing. We do not see all of the 36 computers in a modern car. We live with a metaphor that is operational, and the dashboard lights and things like that, give us a metaphorical indication of what’s going on inside the car, and machine learning models must do the same.”

Related Items:

How Machine Learning Is Eating the Software World

Four Common Mistakes in Machine Learning Projects

Machine Learning: No Longer the ‘Fine China’ of Analytics, HPE Says