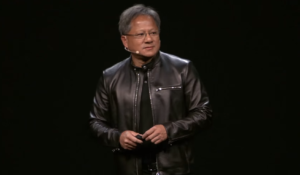

Nvidia’s Huang Sees AI ‘Cambrian Explosion’

The processing power and cloud access to developer tools used to train machine-learning models are making artificial intelligence ubiquitous across computing platforms and data framework, insists Nvidia CEO Jensen Huang.

One consequence of this AI revolution will be “a Cambrian explosion of autonomous machines” ranging from billions of AI-power Internet of Things devices to autonomous vehicles, Huang forecasts.

Along with a string of AI-related announcements coming out of the GPU powerhouse’s annual technology conference, Huang used a May 24 blog post to tout the rollout of Google’s (NASDAQ: GOOGL) latest iteration of its TensorFlow machine-learning framework, the Cloud Tensor Processing Unit, or TPU.

The combination of Nvidia’s (NASDAQ: NVDA) new Volta GPU architecture and Google’s TPU illustrates how—in a variation on a technology theme—”AI is eating software,” Huang asserted.

Arguing that GPUs are defying the predicted end of Moore’s Law, Huang further argued: “AI developers are racing to build new frameworks to tackle some of the greatest challenges of our time. They want to run their AI software on everything from powerful cloud services to devices at the edge of the cloud.”

Along with the muscular Volta architecture, Nvidia earlier this month also unveiled a GPU-accelerated cloud platform geared toward deep learning. The AI development stack runs on the company’s distribution of Docker containers and is touted as “purpose built” for developing deep learning models on GPUs.

That dovetails with Google’s “AI-first” strategy that includes the Cloud TPU initiative aimed at automating AI development. The new TPU is a four-processor board described as a machine- learning “accelerator” that can be accessed from the cloud and used to train machine-learning models.

Google said its Cloud TPU could be mixed-and-matched with the Volta GPU or Skylake CPUs from Intel (NASDAQ: INTC).

Cloud TPUs were designed to be clustered in datacenters, with 64 stacked processors dubbed “TPU pods” capable of 11.5 petaflops, according to Google CEO Sundar Pichai. The cloud-based Tensor processors are aimed at computer-intensive training of machine learning models as well as real-time tasks like making inferences about images

Along with TensorFlow, Huang said Nvidia’s Volta GPU would be optimized for a range of machine-learning frameworks, including Caffe2 and Microsoft Cognitive Toolkit.

Nvidia is meanwhile releasing as open source technology its version of a “dedicated, inferencing TPU” called the Deep Learning Accelerator that has been designed into its Xavier chip for AI-based autonomous vehicles.

In parallel with those efforts, Google has been using its TPUs for the inference stage of a deep neural network since 2015. TPUs are credited with helping to bolster the effectiveness of various AI workloads, including language translation and image recognition programs, the company said.

The combination of processing power, cloud access and machine-learning training models are combining to fuel Huang’s projected “Cambrian explosion” of AI technology: “Deep learning is a strategic imperative for every major tech company,” he observed. “It increasingly permeates every aspect of work from infrastructure, to tools, to how products are made.”

Recent items:

New AI Chips to Give GPUs a Run For Deep Learning Money

‘Cloud TPU’ Bolsters Google’s ‘AI-First’ Strategy