AI, You’ve Got Some Explaining To Do

(Besjunior/Shutterstock)

Artificial intelligence has the potential to dramatically re-arrange our relationship with technology, hearkening a new era of human productivity, leisure, and wealth. But none of that good stuff is likely to happen unless AI practitioners can deliver on one simple request: Explain to us how the algorithms got their answers.

Businesses have never relied more heavily on machine learning algorithms to guide decision-making than they do right now. Buoyed by the rise of deep learning models that can act upon huge masses of data, the benefits of using machine learning algorithms to automate a host of decisions is simply too great to pass up. Indeed, some executives see it as a matter of business survival.

But the rush to capitalize on big data doesn’t come without risks, both to the machine learning practitioners and the people whom are being practiced upon. The risk posed to consumers by poorly implemented machine learning automation is fairly well-documented, and stories of algorithmic abuse are not hard to find.

And now, as a result of the European Union’s General Data Protection Regulation (GDPR), the risks are being pushed back to the companies practicing the machine learning arts, which can now be fined if they fail to adequately explain to a European citizen how a given machine learning model got its answer.

“In addition to all the rights around your personal data, the right to be removed and so forth, there’s a passage in GDPR talking about the right to an explanation,” says Jari Koister, FICO Vice President of Product and Technology. “The consumer actually has the right to ask, Why did you make that decision?”

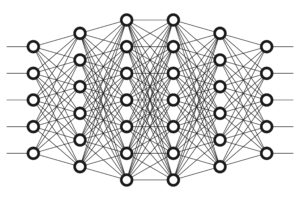

Getting a clear explanation of how a given model works is no simple task. In fact, with deep learning approaches, where hundreds of hidden layers populate a neural network, getting an answer can be downright difficult.

“How can we govern a technology its creators can’t fully explain?” is the question posed by Immuta in the first line of its new white paper, “Beyond Explainability: A Practical Guide to Managing Risk in Machine Learning Models.”

At first glance, the answer to that question doesn’t look good, the Immuta authors note. “Predictive accuracy and explainability are frequently subject to a trade-off,” they write. “Higher levels of accuracy may be achieved, but at the cost of decreased levels of explainability.”

But that tradeoff simply won’t due, especially considering the $8 trillion that Bain & Company expects to be invested in automation over the coming years. While real challenges exist, there simply is no excuse for not tackling them as best as we can.

People and Processes

Immuta prescribes a methodical approach to dealing with the problem of explainability in machine learning. The recommendations in its white paper are practical and represent common-sense steps that companies can follow to position people and processes to deliver explainability.

The Maryland company, which just received $20 million in venture funding to continue developing its big data security and privacy software, recommends a three-layered approach to tackling the explainability problem.

The first layer of personnel are the principal data scientists and data owners who are involved with the actual development and testing of machine learning models. The second line of defense is composed of data scientists and legal experts who focus on validating the machine learning models and facilitating the legal review. The last line of defense is populated with governance personnel, and conducts periodic auditing, no less than every six months.

Once those pieces are in place, Immuta recommends turning to the data. Steps that should be performed include documenting the requirements for the model and assessing the quality of the data used by the model. The company recommends separating the model from the underlying data infrastructure for testing, and monitoring the underlying data to detect “data drift.” An alerting system should be built to detect unwanted changes in model behavior, including potential feedback loops.

Model Exercise

Taken together, these steps can get a company on the path to having a greater understanding of how their models work. However, Immuata stresses that this is an on-going process, and says not to expect a silver bullet that solves explainability once and for all.

“There is no point in time in the process of creating, testing, deploying, and auditing production ML where a model can be ‘certified’ as being free from risk,” the company says. “There are, however, a host of methods to thoroughly document and monitor ML throughout its lifecycle to keep risk manageable, and to enable organizations to respond to fluctuations in the factors that affect this risk.”

There are other approaches to tackling the explainability problem. FICO, for instance, has developed an approach to “exercise” the predictive models that it builds to show customers how different inputs directly lead to different outputs. This was the focus of its Explainable AI offering that the San Jose, California company launched in April.

In fact, this offering is the basis of the “Certificate of Explanations” that it offers through its software. These certificates can take the form of user interface components that pop up on the screen, or letters sent out to the consumers explaining how the computer made its decision.

“Of course you won’t see details, such as ‘Your score was 3.5 on this thing and 2.5 here,'” Koister says. “But you might abstract it and say ‘You need to be accident free for at least 12 more months and then I’ll give you another quote.'”

Justifying AI

Another AI firm plying the explainability waters is Ayasdi. The Menlo Park, California company, which largely spearheaded the field of topological data analysis (TDA), says its unique approach to unsupervised machine learning can provide an advantage for companies looking to justify the predictive models they use to internal auditors and external regulators.

“A lot of this work is very logistical in nature,” says Gurjeet Singh, Ayasdi’s CEO and co-founder. “You have to have software that helps you maintain audit trails, you have to have software that makes sure that the data that’s aggregated, that you’re able to at least discern which output came from this data and why. Where we intersect on this quite heavily is the justifiability of the model you pick.”

In effect, Ayasdi’s software acts as an independent arbiter of the effectiveness and fairness of a given machine learning model. Even if a model was developed outside of the Ayasdi software environment, customers can use the company’s unsupervised machine learning capabilities to double check the work, and ascertain whether or not it’s working as advertised.

“It’s important for this justification to be an independent process,” Singh says. “It helps customers understand what are the specific places where models fall down, and it also in some cases is able to recommend how to go about fixing the models.”

Most production machine learning models are complex beasts composed of numerous smaller models. Ayasdi can essentially peak under the big model’s covers to see if all the smaller models are working well, says Ayasdi chief marketing officer Jonathan Symonds.

“[An Ayasdi customer] had a global model that was performing, I think they would say at a B+ level,” Symonds says. “But when you looked at it under a microscope, you found that it was comprised of a number of local models, and certain local models were performing at a D- level. We were able to tell them which one of those models are performing at a D- level and why.”

In some cases, companies may be best served by taking the “student-teacher learning” approach to building production models, Singh says. Instaed of putting a big, complex predictive model that’s tough to explain into production, companies instead should build the big model as a test case, and then imiplement a simpler version of the model into production. The simpler version is easier to explain, but doesn’t damage the accuracy, he says.

“It’s a well-known academic area of research, and the entire game in student teacher learning is to create conditions where you can have small children models that are simple and explainable emulating the larger, more complicated models,” he says. “It’s a neat trick in machine learning.”

Ayasdi has no plans to sell a GDPR version of its software. Instead, the capability to double-check the results of other models is simply a feature in its current suite.

Related Items:

Opening Up Black Boxes with Explainable AI

Who Controls Our Algorithmic Future?