AWS Bolsters Machine Learning Services at Re:Invent

Amazon Web Services today unveiled a slew of new machine learning services, including a version of Sagemaker that uses reinforcement learning, a specially designed inference chip, and a marketplace of machine learning algorithms.

Andy Jassy, the CEO of AWS, took the stage for a marathon keynote address at the AWS Re:Invent conference in Las Vegas, Nevada, this morning to show off the cloud giant’s progress and unveil a slew of new products and services.

A number of the announcements revolved around machine learning, which has grown into a real money-maker for the Seattle, Washington-based company. According to Jassy, tens of thousands of companies are running ML workloads on AWS, including more than 10,000 using SageMaker, the automated machine learning environment unveiled at last year’s re:Invent.

According to Jassy, AWS caters to three types of machine learning users. That includes folks at the bottom of the stack who like to get their hands dirty with tools like TensorFlow and MXnet, those in the middle who want a guided approach to using machine learning (i.e. SageMaker), and those who just want to call a pre-built model via an API and get the results.

AWS had a little something for all three types of users in its announcements this week.

Low-Level ML Users

Data scientists who want to build and train their own machine learning models have a number of new capabilities to play around with. It starts with Sunday’s announcement that AWS is providing a new P3DN instance type on EC2.

The P3DN.24xlarge instances offer eight Nvidia V100 Tesla GPUs, 256 GB of GPU memory, and 100 Gbps of network bandwidth. It is “the most powerful instances for machine learning that you’ll find anywhere in the world,” Jassy says.

AWS customers who run TensorFlow average about 65% utilization rate of the GPUs they use for training, which isn’t great. AWS responded by contributing code to the TensorFlow project that optimizes how weights are distributed in the neural network models. Jassy says the improvement allow TensorFlow customers to utilize up to 90% of the GPU.

AWS also made two announcements around inference. While training of machine models gets all the press, Jassy says inference actually consumes 90% of the resources needed to run machine learning programs in production. However, running inference workloads can be subject to similar workload optimization dynamics as other computer workloads.

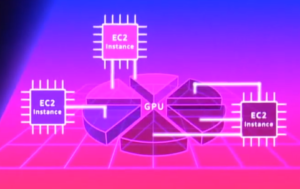

The company launched a new service called Elastic Inference that allows users to access 1Tflop to 32 Tflops of GPU capacity on demand. “You attach Elastic Inference to an instance much like you would attain an EBS [Elastic Block Store] volume,” Jassy says. “You only need to provision the amount of Elastic Inference that you want.” Jassy says it will cut costs of inference by up to 75%.

The company also announced that it has developed its own inference chip, called Inferentia, and that it will make the chip available to customers via EC2.

“Inferentia will be very high throughput, low latency, sustained performance, very cost effective processor for inference,” Jassy says. “It will be available for you on all the EC2 instance types, as well as in SageMaker. And we’ll be able to use it with Elastic Inference.”

Inferentia customers will save 10x on inference costs, above and beyond the 75% cost reduction brought by Elastic Inference, he says.

Midrange ML Users

The shortage of data scientists has been well documented, both in this publication and elsewhere. “More and more are being trained in universities, but there just aren’t that many, and they mostly hang out at the big tech companies,” Jassy says. (AWS has squirreled away its share of unicorns, too.)

SageMaker RL brings reinforcement machine learning algorithms to AWS’ automated machine learning environment

Jassy says regular developers and analyst can be infused data science superpowers with SageMaker, which has been adopted by many big orgnaizations in the past year, including Cox Automotive, Formula 1 racing, Expedia, MLB, Shutterfly, GoDaddy, and FICO. “The reason that people are so exited about SageMaker is the speed it allows everyday developers to get started and be able to use machine learning,” he says.

The company launched three new offerings today to augment its automated machine learning environment, including SageMaker Ground Truth, SagerMaker Reinforcement Learning, and SageMaker Marketplace for Machine Learning

SageMaker Ground Truth is a data labeling service that helps users by labeling data used for machine learning models. Customers can choose either automated labeling, where a machine learning algorithm assists with the labeling, or they can choose to tap into a pool of human labelers, such as its Mechanical Turk crowdsourced service.

The SageMaker Marketplace for Machine Learning, meanwhile, provides an online store where users can browse from a catalog of more than 150 algorithms for use in SageMaker. “This is a huge game changer, not just for consumers of ML algorithms, but also for the sellers who want to make money from the things they’ve built,” Jassy says.

Reinforcement learning has emerged as a third option to go along with supervised learning and unsupervised learning algorithms. With SagerMaker RL, Amazon provides several RL algorithms, which Jassy says is ideal for situations where there is no one right answer, and there is no cleanly labeled data that can model the right answer.

“If there’s something in the ability to model where there is a right answer — is there a stop sign or is this a pedestrian? – you need to have labeled training data and supervised learning models,” he says. “But for problem where there is no right answer or you don’t know the right answer. reinforcement learning is incredibly valuable.”

The company also unveiled DeepRacer, a 1/18-scale autonomous vehicle that uses RL to navigate a track. AWS will encourage developers to play around with RL by racing the vehicles in a DeepRacer league.

High-Level ML Users

Sometimes, you just want the right answer given to you without all the work that goes into getting it. In the world of machine learning, that translate into shrink-wrapped products and services that can be called by an API, which AWS is more than happy to provide.

AWS already offered a slew of shrink-wrapped machine learning services, including computer vision service called Rekognition, a natural language processing (NLP) service called Comprehend, a language translation service called (fittingly) Translate, and a speech-to-text service called (you guessed it) Transcribe.

Jassy unveiled several more offering along those lines today, starting with an optical character recognition (OCR) service dubbed Textract. According to Jassy, this new service can intelligently identify and extract data residing in text fields on scanned forms. As the healthcare, banking, or tax form changes over the years, Textract automatically adapts to identify where pertinent pieces of information, such as names, addresses, and Social Security numbers, reside.

“Textract doesn’t miss a beat,” Jassy says. “You don’t need to have any machine learning experience to use Textract.”

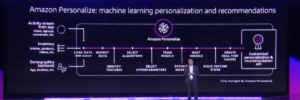

AWS has also built machine learning into its new personalization service, which is called Personalize. According to Jassy, Personalize is based on the same machine learning models that Amazon has refined over the years to optimize its retail business. The software builds a private model for each client based on historical data, such as music purchases or articles of clothing.

“As you’re streaming that [historical] data in, we set up EMR cluster and we inspect the data,” Jassy says. “We look for the interesting parts of the data that gives us some kind of predictors. … Then we’ll select up to six algorithms that we have built ourselves over the years to do personalization’s in our retail business. And we’ll mix and match. We’ll set up hyperparameters to train … the models. Then we’ll host the model for you.”

The personalizations that come out of it will look a lot like SageMaker, “except at this layer in the stack, we’re taking care of those for you,” Jassy says. “All you have to do is submit an input, and you get the outputs, which are recommendations.”

The same concept is at play in AWS’ new Forecast offering, which is also based on supply chain optimization algorithms that Amazon developed over the years to maximize sales of goods.

“Forecasting is actually pretty difficult,” Jassy says. “It’s not usually one or two data points that impact the forecast. It’s typically lots of these data points….There are tens, sometimes hundreds of these variables that you need to analyze and it’s pretty complicated to do. Again our customers say, you do this at scale, yo’ve been doing it for a long time. You’ve built a lot of these models. Find a way to make the models available to us.”

Tomorrow’s keynote, which starts at 8:30 am. PT, will be delivered by Werner Vogels, the CTO of AWS.

Related Items:

AWS Unleashes a Torrent of New Data Services

AWS Takes the ‘Muck’ Out of ML with SageMaker

AWS Unveils Graph Database, Called Neptune