How Databricks Keeps Data Quality High with Delta

Data lakes have sprung up everywhere as organizations look for ways to store all their data. But the quality of data in those lakes has posed a major barrier to getting a return on data lake investments. Now Databricks is positioning its cloud-based Delta offering as a solution to that data quality problem.

The invention of the data lake remains a critical moment in big data’s history. Instead of processing raw data and then storing the highly structured results in a relational data warehouse that can be queried as needed, distributed systems like Apache Hadoop allowed organizations to store huge amounts of data and add structure – that is, add value — to the data at a later time.

This “schema on read” approach, versus the old “schema on write” technique used in traditional data warehousing, bought organizations additional time to figure out how to organize the petabytes of clickstream, Web logs, images, text files, JSON, video, and sundry other pieces of data exhaust that were suddenly being generated. With the explosion of data, there simply was not enough time to process the data into an optimized form before loading it into a data warehouse for analysis.

But for all the timesaving benefits that data lakes brought, they also introduced new challenges. One of the challenges is simply knowing what one has stored in a big data lake, what’s sometimes known as Hadoop’s “junk drawer” problem, which has spawned a run on data catalogs and data governance efforts.

A corollary of the governance challenge is the problem of poor data quality in data lakes. Ali Ghodsi, the CEO of Databricks, says the data quality issue remains one of the biggest challenges to modern data analytics, whether it’s conducted on-premise or in the cloud.

“The issue is, they’re dumping all kinds of data into the data lakes in these IT departments, and they’re hoping that later on they can do machine learning on it,” he says. “But we realize that one of the reason that many of the projects are failing is the data quality is low.”

Databricks has built a Spark-based cloud analytics service that has attracted more than 2,000 customers over the past few years, propelling the company to a valuation of $2.75 billion, which is almost equal to Cloudera‘s market capitalization. But data quality problems can crop up any place the data lake approach is used, regardless of whether the lake’s underlying technology features Hadoop or Spark processing engines.

“It’s not a problem with Spark,” Ghodsi says. “It’s doing its job right. But rather, the stuff they’re adding, they’re not being careful about the data quality issues.”

Data Gremlins

Since the dawn of the computer age, data quality has bedeviled computer scientists and analysts alike. You put garbage into a computer program, you’re going to get garbage out. It’s a tired trope, GIGO is, but it also happens to be true.

One of the common places that data quality gremlins rears their little heads is when organizations amass large data over a period of years, Ghodsi says. “Let’s say there’s a table with several columns, and let’s say one of those columns stores the dates of the events that are happening that you’re adding to your data set in your data lake, and it slowly changes in a subtle way,” he says.

“Maybe you change the format of the date field,” he continues. “Let’s say the year used to be four digits, but somebody decided to truncate it to two digits. So 1999 becomes 99. No one notices it.”

Nobody sees any problems, until they start doing machine learning on the data, and the machine, in typical GIGO manner, starts spitting out garbage and alerting the organization to anomalies that aren’t actually there.

A failure to properly load big data sets when performing ETL or ELT processes is a related problem. “If they’re adding a big data set, and it that fails, and they’re not careful about it, they might end up with half of the data there and the other half not there,” Ghodsi says. “That’s the second thing.”

A third common source of data quality issues is a failure to correctly anticipate data format requirements, he says.

“When using Spark, you have to kind of in advance decide exactly how you want your data to be formatted,” Ghodsi says. “Do you want to store the year first or do you want to store the month first? That effects performance quite a bit. So if you format it in a particular way and then several years later you’re trying to build a project that requires you to use this other way … you might get huge performance issues.”

Delta to the Rescue

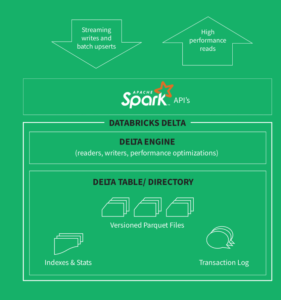

Databricks is positioning Delta –which it launched in October 2017 a hybrid solution that combines the benefits of data lakes, MPP-style data warehouses, and streaming analytics — as a potential solution to the data quality issue.

“What Delta does is it looks at data coming in and it makes sure it has high quality,” Ghodsi tells Datanami in a recent interview. “So if it doesn’t have high quality, it will not let it into Delta. It will put it back into the data lake and quarantine it so you can go and look at it to see if you can fix it.”

Data lakes stored in Delta provide organizations certain guarantees about data quality, says Ghodsi, who is one of Datanami‘s People to Watch for 2019. “Data lakes are awesome. You’re storing all your data with Spark in a data lake,” he says. “But then you can have these more gold quality data sets in what we call a Delta Lake, which is basically Delta looking at the data to make sure it’s high quality.”

In addition to rejecting bad data at the gate, Delta can help address the partial data problem that crops up when ETL and ELT jobs go awry.

“Delta augments Spark with transactions,” Ghodsi says. “So when your operations in Databricks Delta, you’re guaranteed that everything either completely succeeds or completely fails. You cannot end up with an in-between situation. So if you’re ingesting a petabyte of data and you run into failure, you’re not going to end up with half a petabyte here and then missing [the other half], because that can be very hard to detect years later.”

Organizations that fail to detect data loading issues often have to resort to conducting computational “archeology to find the needle in the haystack,” Ghodsi says.

All About That Metadata

Finally, Databricks Delta has one more trick up its sleeve to keep the data quality gremlins at bay: metadata analysis.

When data hasn’t been properly formatted to align with the type of analyses the organizations is trying to do, it can cause serious performance issues, Ghodsi says. “It’s just because the way you store data in the data lake was not optimized for how you’re going to use it later,” he says.

Databricks Delta addresses that problem by maintaining a separate lineage of the data. By storing the metadata in a Spark SQL database, and performing statistical analysis upon it, it helps Databricks Delta users to achieve the maximum performance later on.

This metadata tracking functionality also allows Delta users to access their data lake as it stood at previous points in time, Ghodsi says. “It’s nice that the Delta Lake can actually reproduce any data set that you have from the past,” he says.

Databricks Delta stores data in Parquet, which is a column-optimized data format that’s popular on Spark and Hadoop clusters. If the source data lake is also storing data in Parquet, Databricks customers can save a lot of time and hassle in loading that data into Delta, because all that has to be written is the metadata, Ghodsi says.

“You don’t have to write another petabyte data set,” he says. “You don’t need to have two copies of it. The operation is significantly faster because the only data you have to write is metadata, which is always much smaller than the original data lake.”

Delta has caught on with Databricks’ cloud clients, including computer maker Apple, Ghodsi says. It’s currently only available in the Databricks cloud, but the company is talking with partners about letting it run on premise, Ghodsi says. Databricks plans to make Delta a central theme of its upcoming Spark + AI Summit 2019 conference being held in two weeks.

Related Items:

Big Data File Formats Demystified

Databricks Puts ‘Delta’ at the Confluence of Lakes, Streams, and Warehouses

Taming the Wild Side of Hadoop Data