Data Privacy Is on the Defensive During the Coronavirus Panic

(Redkey USB/Shutterstock)

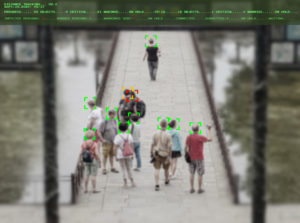

Privacy is on the run in the race to save the world from the ravages of coronavirus. COVID-19 has given surveillance advocates the upper hand in any discussions of AI for the public good. The ongoing pandemic has provided a readymade justification for using AI-driven solutions to engage in facial fever detection and other continuously intimate monitoring of the general population.

Encroachments on privacy are deepening at a disturbing pace around the world, but that doesn’t mean that public health concerns are a carte blanche for privacy encroachment.

Many nations—including one-party states such as China and multiparty democracies such as Israel and South Korea–have passed emergency laws under which they’ve implemented surveillance systems for tracking COVID-19. And it’s no surprise that the right-wing Trump administration is exploring how it might gain access to the cellphone location data of all Americans in order to track the spread of the disease.

Data privacy and other ethics concerns may sound like a namby-pamby concern in a national emergency, but it’s a moral fiber that, once ripped, may never be put right again. If the public has an compelling need to know exactly who we’ve been around, what we’ve worn, and where we’ve been touching ourselves, is that the last word on the topic? Does public health and safety render privacy concerns null and void?

When the pandemic crisis ends, will privacy controls be locked back into place throughout society? Or will surveillance advocates be able to use the endurance of viruses in the population—even when infections and deaths have significantly abated—as a pretext for arguing that intrusive monitoring be made permanent?

Circumscribing the Limits of Privacy Compromise During Emergencies

Though lives are at stake, it’s not crazy to talk about ethics of using personal data during a pandemic. In fact, it’s absolutely essential if we recognize that society also has a compelling interest in ensuring that our most cherished values remain intact both during the crisis and in its aftermath. Now, more than ever, organizations should incorporate the following safeguards into their AI DevOps pipeline to ensure that they effectively respond to the current COVID-19 crisis without running roughshod over privacy indefinitely:

- Cordoning of emergency scenarios: Data stewards should define clear, time-bounded emergency scenarios in which controls on access, use, and modeling of personally identifiable information in AI and other data-driven applications may be eased.

- Risk profiling of emergency-justified algorithms, models, and apps: Data analysts should make sure that developers consider the risks of relying on specific data algorithms or models—such as facial recognition—whose intended emergency use (such as detecting fever in a contagion-threatened population) could also be vulnerable to abuse in post-emergency scenarios (such as when it’s used to target specific demographics to their disadvantage).

- Accountability tracking of pipeline activities across the emergency/post-emergency boundary: Security analysts should ensure that AI and other data pipeline processes have immutable audit logs to account for usage of data, models, and other artifacts in emergency scenarios vs. post-emergency periods. They should ensure that there are procedures for ensuring the explainability in plain language of every pipeline task, work product, and deliverable app in terms of its relevance to emergency vs. post-emergency requirements. And they should implement quality-control checkpoints in the AI pipeline process to verify that there remain no emergency-period remnants—such as privacy-sensitive data–that might pollute any post-emergency apps in which usage of these artifacts would be prohibited.

Even if it falls on deaf ears in this administration, it’s good to see that US legislators are thinking along these lines, even in the midst of a crisis that puts their lives in danger just much as yours or mine. This past month, US Representatives Anna G. Eshoo and Suzan K. DelBene and Senator Ron Wyden sent a letter to the White House in which they urged the administration to prioritize privacy protection, even in the present COVID-19 emergency. They proposed the following controls on data management, use, and security:

- Controls on data management: They proposed the federal government only collect data necessary for responding to the current COVID-19 crisis, as determined by public health experts. This includes limiting collection to aggregated data and trends and anonymizing datasets as far as necessary without unduly compromising intended public health uses.

- Controls on data usage: They proposed that the federal government require that private companies collecting data specific to the COVID-19 crisis must not be able to use such data for any other purpose. This includes prohibitions on combining the data with other data or using it to train machine learning algorithms for unrelated uses such as behaviorally targeted advertising. They also called for prohibiting any government agency or employee from disclosing, transferring, or selling information to agencies, companies or other organizations, or individuals—such as those in law enforcement and immigration–who are not directly involved with the public health response to COVID-19.

- Controls on data security: They proposed that data be transferred and stored using the highest cybersecurity protocols. They called for prohibition of attempts to reidentify specific individuals from aggregate or anonymized datasets. And they asked that all government agencies, employees, and contractors delete identifiable data after the pandemic has ended.

Takeaway

We should commend the US legislators’ recent proposal of limits on privacy encroachment even in the face of a global pandemic.

Considering the ad-hoc nature of the collective response, in both the public and private sectors, to the COVID-19 crisis, it’s clear that few enterprise IT and business professionals are prioritizing privacy during this crisis. Nevertheless, they should still be careful not to overreach both in their own internal tracking of employees’ exposure, infection, and illnesses due to COVID-19. They should also make sure that they don’t go overboard in providing data on employee, contractor, and customer behaviors that may cross the line into privacy violations without a commensurate contribution to public health.

And they should be careful not to build useful apps during this crisis–such as contact tracing–that may persist into the inevitable post-emergency period and pose a needless threat to privacy.

About the author: James Kobielus is an industry veteran who has written extensively about big data, AI, and enterprise software. James previously was associated with Futurum Research, SiliconANGLE Wikibon, IBM, and Forrester Research. Currently, he is an independent tech industry analyst based in Alexandria, Virginia.

Related Items:

Giving DevOps Teeth To Crunch Down on AI Ethics Governance

How Badly Will COVID-19 Hurt the Data Privacy Movement?

The Pain of Watching AI Become A Pawn in the Geopolitical Fray