Solar Data Analytics Approaches Warp Speed

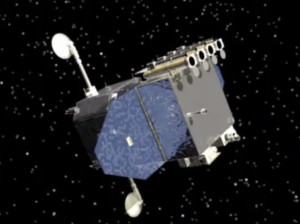

Source: NASA

Data scientists at NASA are employing GPU-powered workstations and local storage to greatly accelerate analysis of images captured by the Solar Dynamic Observatory.

Launched in 2010 to probe our yellow dwarf star and its magnetic field, the solar observatory carries three instruments: an Atmospheric Imaging Assembly, Extreme Ultraviolet Variability Experiment and a Helioseismic and Magnetic Imager. As of the observatory’s 10th anniversary, NASA said SDO has so far captured more than 350 million images of the sun.

Parked in an inclined geosynchronous orbit, SDO is part of NASA’s “Living With a Star” program designed to study the sun as a “magnetic variable star” and how it influences life on Earth. Solar flares can, for example, disrupt critical infrastructure like electrical grids and literally fry electronics.

The challenge for solar data scientists is the sheer volume of imagery—about 20 petabytes and counting. The observatory collects data by recording images of the sun every 1.3 seconds—about as “dynamic” as a space sensor gets. Processing those images requires algorithms to remove errors such as “bad pixels.” Cleaned-up images are then archived.

NASA researchers combing through about 150 million “error” files needed a way to sort and label good and bad pixels. They discovered that even the best multi-threaded CPU algorithms couldn’t cut it. “Even with one year of computation, it would still take us up to 10 years to find concrete results,” said Raphael Attie, a solar astronomer at NASA’s Goddard Space Flight Center in Maryland.

Supercomputers were an obvious solution, but demand at NASA is high and resources are limited. Hence, the SDO analysts turned to GPU-based algorithms and data science workflows based on Python. They also used TensorFlow and other machine learning platforms to accelerate computations by as much as 150 times for tasks like detecting bad pixels in SDO images.

The NASA Goddard researchers’ workflow runs on a Z by HP workstation equipped with a pair of Nvidia Quadro RTX 8000 GPUs, which the partners promote as supercomputing on a desktop. Along with a batch of 2D and 3D visualization tools, NASA researchers employed the RAPIDS data science library along with the Python GPU data frame library CuDF and Pandas, another data science tool popular with Python developers.

A key requirement for the solar data scientists is the ability to analyze and iterate calculations to get quicker results. Previously, Goddard’s Attie said it would require a decade just to sift though a year’s worth of calculations.

The desktop supercomputer approach also accelerated the solar astronomer’s workflow by enabling local data storage. Cloud-based access to computing resources often resulted in slow response times or none at all.

“A necessary condition for a responsive workflow is to have the input data rapidly accessible by your GPU devices,” said Attie. “If it’s not possible to have the data locally in the same machine as the GPU device, the network needs to be very fast and resilient, as AI applications often need fast access to the data.”

The NASA researchers are expected to have plenty more solar data to crunch as they seek to unlock the mysteries of our solar system. While the solar observatory was designed to last five years, it is now expected to remain operational for perhaps another decade.

Recent items:

Why AI is a Critical Capability for the US Space Force

NASA Upgrades Search Capabilities

RAPIDS Momentum Builds with Analytics, Cloud Backing