Big Data Apps Wasting Billions in the Cloud

(world-of-vector/Shutterstock)

Many organizations have shifted to a cloud-first mentality for deploying their big data applications. But without expending effort to optimize or tune these cloud apps, customers will waste billions of dollars’ worth of computing resources, according to a new report.

Pepperdata today released a report detailing how efficiently its customers’ cloud clusters are running (or rather, how inefficiently they were running before Pepperdata applied its machine learning-based optimization software to them).

With more than 4.5 million customer apps running on 5,000 nodes storing 400PB of data in the cloud, the company has a unique view the state of big data cloud performance, and the results are not pretty.

“Our report reveals that, within enterprise workloads that are not optimized by solutions that allow for observability and continuous tuning, there exists enormous waste–and enormous potential to optimize workloads and cut that waste,” the company says in the report, titled “Pepperdata 2020 Big Data Performance Report.”

Take Apache Spark applications, for example. Spark has largely usurped Hadoop as the big data platform of choice, whether on prem or the cloud. However, while Spark is head-and-shoulders better than Hadoop in multiple ways, it still suffers performance bugaboos, principally because it is so notoriously difficult to tune.

Among its customers’ Spark applications, Pepperdata found that the median rate of maximum memory utilization was just 42% percent. That means the Spark applications were failing to utilize all the memory that customers had allocated to the Spark environment in nearly six out of 10 cases.

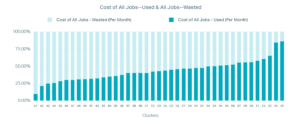

The average wastage of compute resources in large cloud clusters is 60%, according to Pepperdata’s 2020 Big Data Performance report

The average wastage across 40 large clusters was 60%, the company found. The vast majority of the wastage occurs in just 5% to 10% of the jobs, Pepperdata concluded. This is why application optimization is “inherently such a needle-in-a-haystack challenge,” the company commented.

As applications spin up on the cloud, there is a lot of money on the line–and a lot of waste to prevent or recoup. According to a Gartner forecast from November 2019, the worldwide public cloud services market is forecast to grow 17% in 2020 to total $266.4 billion, up from $227.8 billion in 2019.

Software as a service (SaaS) will constitute the lion’s share of cloud revenue, with Gartner predicting $116 billion in spending for 2020. But infrastructure as a service (IaaS), where companies will run many bespoke Hadoop and Spark apps that previously ran on prem, is predicted to be the fastest growing cloud segment, accounting for $50 billion this year and $74 billion by 2022. (These are all pre-coronavirus figures; cloud spending has been accelerating under COVID-19.)

Cloud wastage in 2019 was estimated at $14 billion, and is expected to go up to $17.6 billion this year, according to Pepperdata’s report. Another analyst estimates that the cost of idle resources alone stands at “$8.8 billion every year,” the company states. Overprovisioning is another problem, with 40% of all cloud instances being oversized, Pepperdata says.

The good news is that all this wastage can be recovered, if you know what to do. While finding the offending apps can be difficult, there are some relatively easy things that companies can do. Google, for example, estimate that businesses can save 10% of their cloud spending within two weeks with just a few tweaks, Pepperdata says.

With a more aggressive approach, customers can optimize their spending even more. Pepperdata estimates that optimization of cloud systems can “win back” about 50% of task hours. “Smaller organizations can net $400,000 in annual savings, and larger organizations can save as much as $7.9 million a year,” the company says.

The explosion of cloud resources provides unparalleled access to compute resources, which is a good thing. However, as companies move big data systems to the cloud, or build new applications there, they would do well think about wastage, and take the appropriate steps to ensure that their apps are running efficiently and they’re not overpaying in the cloud.

“The failure to optimize means companies are leaving a tremendous amount of money on the table—funds that could be reinvested in the business or drop straight to the bottom line,” Joel Stewart, Pepperdata’s vice president of customer success, states in a press release. “Unfortunately, many companies just don’t have the visibility they need to recapture the waste and increase utilization.”

Related Items:

Pepperdata Adds Kafka Monitoring to Tune Queries

Cloud Analytics Proving Costly for Some

Pepperdata Takes On Spark Performance Challenges