Systemic Data Errors Still Plague Presidential Polling

(FP-Creative/Shutterstock)

Four years ago, national polling firms almost universally failed to detect the true level of support for Donald Trump in his quest for the White House. This year, the same underlying conditions that made Trump’s electoral college victory such a surprise are still in play, according to data experts. And in fact, with the viral pandemic and racial unrest, the predictive power of polling may be even worse than 2016.

All opinion polls are subject to statistical error, or random error, and there’s not much that can be done about it. One can increase the sample size to reduce the statistical error, but it can never be eliminated completely. The best polling firms are aboveboard about the level of error in their polls, which can swing a result 3% to 6% either way, and they include that sampling error with the results.

But in 2016, pollsters failed to account for other sources of error above and beyond the statistical error, or what are called systemic errors in the data and the statistical methods that are used. Steve Bennett, the head of SAS’s global practice and a follower of political polls, says these systemic errors make the 2020 election hard to predict.

“The challenge with those systemic errors is that it’s usually impossible for you to fully estimate how big they are,” he says. “You can’t really know, and that creates challenges.”

One source of systemic error with the current polling, and the polling that was done in 2016, is the inability to accurately determine who is actually going to show up to vote on Election Day (or mail their ballots or drop them off at polling stations, thanks to COVID-19, which is another complicating factor).

These statistical models are largely based on demographic turnout of prior elections. In 2016, they over-predicted the number of black people who would vote, perhaps reflecting the surge in number of black voters who turned out for Barack Obama, our first black president, in the previous two elections. They also did not accurately predict the percentage of non-college educated white voters, who turned out in lower numbers to back Mitt Romney and John McCain in 2008 and 2012, but who turned out in larger numbers to support Trump.

Predicting which demographic groups will actually vote is one of the toughest aspects of the pollster’s job (vesperstock/Shutterstock)

The statistical models that pollsters used to determine likely voters did not pan out in 2016, and they will likely again fail to give an accurate prediction of who actually votes in 2020, says Bennett, who in 2017 wrote a story on LinkedIn detailing the shortcomings in 2016 polling, most of which he says still exist.

“It used to be, you could rely on the past three to four elections to be pretty good predictors in terms of turnout and voter models in your current election,” he says. “And that’s what broke in 2016, and I think it’s broke again in 2020.”

Another source of systemic error was the “Shy Trump Voter” effect, or the fact that a certain subset of individuals are dishonest with pollsters about their plans to vote for Trump. Bennett says his research indicates that the Shy Trump Voter effect accounted for a 2% to 6% swing in Trump’s favor.

Trump’s opponent, Joe Biden, currently leads by more than that amount in most polls, so that Shy Trump Voter effect may not ultimately matter when the electoral college convenes. “But it’s going to be closer than the polls say it’s going to be because that systemic error, with the Trump voters who are not comfortable telling polling firms, or even their neighbors that they are supporters of Trump,” Bennett says.

Exit polls—whereby people are asked how they voted as they leave polling stations–usually are more highly trusted than opinion surveys because they don’t have to take into account the likelihood that people will vote. However, data shows people still did not admit to voting for trump in exit polls in 2016.

The “Shy Trump Voter” effect in political polling accounted for a 2% to 6% swing in Trump’s favor, experts say (a katz/Shutterstock)

In the end, the polls are interesting to watch, but the only people for whom the polls really matter are the political campaigns that must make tactical decisions based on voter sentiment. And the campaigns themselves have their own closely guarded polls that are not shared with the public.

So what’s a poll-follower to do? Which polling firms are doing the best and addressing the systemic flaws in 2020? Bennett says Nate Silver’s FiveThirtyEight is still the gold standard when it comes to getting as close to the truth with the presidential race as is reasonably possible.

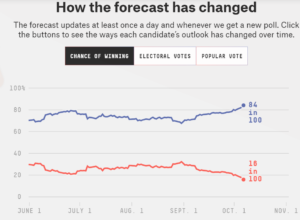

Silver uses a complex approach that aggregates the results of multiple public polls, including giving more weight to the polls that have shown the best predictive power in the past. On top of this base, Silver applies his own statistical methodology to try and correct for some of the known sources of error. He also runs tends of thousands of Monte Carlo simulations to gauge possible electoral college outcomes.

“That hopefully gets you around some of that systemic bias,” Bennett says. “It didn’t work in 2016. He [Silver] said it was about 2.5 times or 3 times as likely that [Hillary] Clinton would win. But he was by far the closest. Everybody else had her winning by much bigger margin.”

Another fan of Silver’s is Joe DosSantos, the chief data officer at Qlik. Everybody seems to want certainty from the data, but statistics deal with probabilities, and Silver has been a staunch defender of what data can and can’t tell us.

“If you had only one book about analytics, pick his book, ‘The Signal and the Noise,’” DosSantos says. “It talks about how humans are really bad at understanding how predictions and modeling work, because they want certainty, they want to know yes or no. In fact, what you’re doing is you’re trying to put together an approach that approximate a likelihood, and people are terrible at that.”

As of October 7, 2020, Biden is 5.25 times more likely to win than Trump, according to FiveThirtyEight

Most major polls are still conducted by telephone, but that is becoming a less reliable method of reaching a statistically relevant sample of the population, DosSantos says. That’s because the people who are polled by telephone generally speaking are older than the existing population, and tend to lean to the left, he says.

“If you’re 25 year’s old, you’ve been preconditioned never to answer a phone that you don’t know who’s on the other end. You screen everything,” he says. “Whereas if you’re 70, you just pick up everything. So the very nature of how we execute those polls is not really effective.”

Most adults have cell phones at this point, but the challenge is that cell phone numbers, by law, are private and are not listed in phone books, which used to be useful tools for conducting opinion surveys. However, those laws obviously aren’t stopping telemarketers and fraudsters (and the occasional poor soul from “Microsoft Support”) from calling your cell phone day and night. Now some polling firms have begun to incorporate cell phone numbers into their polls to get a better sample, too.

In the future, there may be other techniques for predicting who will win elections, and they don’t involve asking people (who are prone to lie) how they intend to vote. For example, polling firms could use social media sites to get a better sense of how people will vote.

“We will actually examine people’s behavior. We’ll look at their Facebook feeds. We’ll look at their posts. And based on activity that you do and the words that you speak, they’re generally speaking going to be better predictors of how you really feel,” DosSantos says. “To me this is the future of polling as opposed to trying and ask you a question, right here on the spot, who do you support? How do you feel?”

Betting markets can be another way to get around systemic data problems and millennials who don’t answer their phones. SAS’s Bennett tracks the odds in the presidential race at BetFair, a UK-based betting firm. The idea there is that people who are putting money on the table are more likely to incorporate all relevant sources of knowledge into their decisions. However, even the betting sites were wrong about Trump’s victory in 2016.

We won’t know the extent of systemic errors in polling data until after the 2020 election (Maisei-Raman/Shutterstock)

At the end of the day, there are no foolproof ways around the systemic problems inherent with this election. If Trump was just running for re-election and there we didn’t have COVID-19 pandemic, racial strife, and large number of mail-in ballots, then historical models could have been useful for predicting voter turnout in 2020, Bennett says.

“It’s the old garbage in, garbage out,” Bennett says. “The quality and complexity of those analytics tools, even though they’re so much better every four years, if the quality of the data is poor or uncertain, you can only go so far with those analytic tools to try and get around that.”

There’s a lot that can be done. But if you don’t know where the errors in the data are, then you don’t know how to correct for it, Bennett says. “That’s why they go out and suit up and play the game.”

Related Items:

Six Data Science Lessons from the Epic Polling Failure

Trump Victory: How Did the National Polls Get It So Wrong?