Lowering Precision Does Not Mean Lower Accuracy

(wan wei/Shutterstock)

It is important to understand the difference between accuracy and precision in the context of Neural Networks. It’s a tricky concept because, at face value, the words are very similar. Most people would assume that precision and accuracy are at least correlated. But they are not.

Neural networks are useful only if they can provide correct answers in most “real-world” situations. However, it is difficult to predict what their results will be in these situations. So, to get some idea of the quality of a neural network, accuracy is used as a theoretical quality metric based on a known set of input data. Ideally, that data is very similar to what the network will be used for in the field

It is important to remember that neural networks are never 100% accurate, even using “practice” input data, and will be still less so in the wild. For example, many know the story of the Tesla that drove straight into an overturned truck due to an inaccurate neural network that probably used high precision computation. Neural network results can be affected even by the smallest of input changes — changes so small that normally you wouldn’t bat an eye. Accuracy allows you to have some idea of the quality based on specific inputs, but small accuracy variations don’t always mean better or worse quality in the real world.

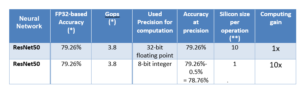

Computing a neural network basically means to perform millions or billions of simple additions and multiplications. The precision of the network is related to those operations. Neural networks that have higher rates of precision use real numbers (floating points), while networks that are less precise calculate using only integers. The chips used to compute the neural network can be much smaller when using less precise numbers, with each operation taking 10 to 30x less silicon, resulting in lower hardware costs. Neural networks that cost less to run are usually more easily deployed for real world AI applications.

You’re probably asking yourself, “Isn’t it dangerous for my neural network to be more approximative and less precise?” That’s a great question that leads me to my next point.

Accuracy ≠ Precision

Although it seems to go against common sense, reducing precision will not reduce the accuracy of a neural network, and in some cases, it may actually improve the accuracy.

As I mentioned, floating points can be highly precise, even with fewer bits than 32. When you use integral domains, there are no decimals; it’s all whole numbers, and as such, the precision of the computation is generally lower. For example, while 2.5×3.5 = 8.75, rounding down leads to 2×3 = 6 and rounding up leads to 3×4 = 12. These are large differences. And there’s a stigma around this. Many believe that because each result is less precise, the overall result after millions of computations would be much less precise and therefore less accurate. Common sense, right?

This is not the case. Most advanced quantization (the process of reducing the number of bits that represent a number in the context of neural network computation) can roughly map a set of floating operations on integer operations at lower-precision computations, but still retain comparable accuracy. A difference smaller than 1% is fairly common, and smaller than 0.1% is possible in many cases. In real world applications, these differences are equivalent to the slight noise of the input used by such neural networks.

So, what does this look like for a common neural network?

(*) source : https://arxiv.org/pdf/1512.03385.pdf

(**) relative size, actual ratio varies with technology, 10x is assumed conservative

A potential computing gain of 10x in exchange for a small loss of accuracy is something to consider for many cases before rejecting integer computation.

Be More Accurate with Less Precision

Although a slight accuracy loss is acceptable in many applications, some require the highest accuracy possible. Vehicle accident avoidance and gun detection are good examples. However, they can be limited by the computation performance at high precision. Requiring high precision to retain the accuracy means reduced performance or increased price, leading to an inability to deploy such important systems.

So, is it possible to increase accuracy while using lower precision? Some networks increase their accuracy with the number of layers and operations. For example, ResNet is more accurate using 101 layers than 50. Using less precision for a deeper ResNet looks like:

The answer is yes! Accuracy is actually higher, and the computing gain is still high. Of course, this requires more computations, but since each one is lighter, the overall computation is smaller.

So, forget common sense: less precision can result in greater accuracy!

However, there is a limitation to this approach; reducing precision too significantly will not work. While it is common to see 8-bit integers, going any lower can cause a sharp and rapid loss in accuracy, with each bit removed from the computation having more impact than the previous one. The result is poor quality that quantization is unlikely to resolve. Precision-awareness training can help, but it requires a significant amount of effort. It is all a matter of balance.

What Does This Mean When Designing AI-based Solutions?

The potential benefit of using integers is obvious: integer computing is more compact, leading to less silicon and therefore lower costs. The accuracy of the neural network does not have to suffer due to the reduced precision. Using integers comes with other advantages as well, including lower power consumption and reduced cooling requirements. And as many know, while GPUs are good at floating point operations, using integers opens the door to more robust chips like FPGAs.

When designing a system, the balance between precision and accuracy must be reviewed carefully in light of the cost. It’s important to understand your goals when deploying neural network-based applications in order to ensure that you’re making the right decisions. The earlier you know how the neural network will be used in the real world, the more you can take into account the constraints and their impact on the cost, quality or performance when deploying it.

Remember that precision is not what matters; what matters is the overall quality and the tools around the accelerator to maintain the accuracy at the right cost.

About the author: Ludovic Larzul is the founder and CEO of Mipsology, a software focused on using FPGA’s for deep learning inference.

Related Items:

Enterprise AI and the Paradox of Accuracy

Why You Need to Trust Your Data

Focus Your AI on Business Value, Not Predictive Accuracy, Aible Says