Google’s New Switch Transformer Model Achieves 1.6 Trillion Parameters, Efficiency Gains

(achinthamb/Shutterstock)

Last year, OpenAI wowed the world with its eerily human language generator, GPT-3. The autoregressive model stood at a then-staggering 175 billion parameters, ten times higher than its predecessors. Now, Google is upping the bar, delivering a model capable of 1.6 trillion parameters, nearly decupling GPT-3’s range – all while delivering major improvements in efficiency compared to previous, hardware-intensive approaches.

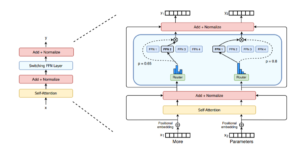

The model is built on a mixture-of-experts approach, which uses a variety of specialized “expert” models as constituent parts of the overall architecture, allowing for (as the authors of the paper say) “outrageous numbers of parameters.” “However,” they write, “despite several notable successes of MoE, widespread adoption has been hindered by complexity, communication costs and training instability.”

Enter the Switch Transformers: “scalable and effective natural language learners” that allowed the researchers to increase the parameter count while keeping floating point operations (FLOPs) per example constant. “Our hypothesis,” they say, “is that the parameter count, independent of total computation performed, is a separately important axis on which to scale.”

The Googlers built the Switch Transformers on the back of its own T5 models (introduced in 2019), powered them with 32 of Google’s in-house Tensor Processing Units (TPUs), equipped them with 2,048 “experts,” and set them to work on the Colossal Clean Crawled Corpus. The Corpus (“C4”) is a nearly terabyte-scale dataset of crawled text from major websites used to test natural language processing (NLP) models. The researchers masked 15% of the words in the C4 dataset and tasked the Switch Transformers with filling in the blanks. They also asked the model to translate among 101 languages and answer trivia questions.

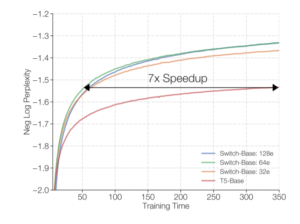

Speed gains delivered by the Switch Transformer models relative to the baseline T5 model. Image courtesy of the researchers.

The Switch Transformers performed well. The new models saw a seven-fold pretraining speedup without a commensurate increase in computational cost and exhibited “no training instability.” The researchers said that while these experiments were focused on “extremely large models,” models with as few as two experts benefit from the new approach.

“We find that these models excel across a diverse set of natural language tasks and in different training regimes, including pre-training, fine-tuning and multi-task training,” the authors conclude. “These advances make it possible to train models with hundreds of billion to trillion parameters and which achieve substantial speedups[.] … We hope our work motivates sparse models as an effective architecture and that this encourages researchers and practitioners to consider these flexible models in natural language tasks, and beyond.”

So: why hasn’t it been done before? “The motivation to try sparse models has been stymied by the massive success of scaling dense models,” they explain, “the success of which is partially driven by co-adaptation with deep learning hardware[.]”

Now, the researchers are setting out to improve the model – specifically, through increased training stability for the largest models and better understanding of the scaling relationships at play.

To learn more, read the paper, which was written by William Fedus, Barret Zoph, and Noam Shazeer (all hailing from Google Brain) and is available here.

[wpsr_share_icons icons="twitter,facebook,linkedin,reddit,email" icon_size="40px" icon_bg_color="" icon_shape="circle" hover_effect="opacity" sm_screen_width="768" lg_screen_action="show" sm_screen_action="show" page_url="https://www.bigdatawire.com/2021/02/08/googles-new-switch-transformer-model-achieves-1-6-trillion-parameters-efficiency-gains/" page_title="Google’s New Switch Transformer Model Achieves 1.6 Trillion Parameters, Efficiency Gains" page_excerpt="Last year, OpenAI wowed the world with its eerily human language generator, GPT-3. The autoregressive model stood at a then-staggering 175 billion parameters, ten times higher than its predecessors. Read more…

" share_counter=""]