Google’s ‘MUM’ Search AI Aims to Move Beyond Simple Answers

Google’s current search answers may seem complex compared to a few years ago, but to hear the search giant talk about it, this is just the beginning – and there’s a long, long way to go. Now, Google is introducing a new model – its Multitask Unified Model, or MUM – to help answer more complex, nuanced questions without requiring multiple searches.

Google’s Pandu Nayak, vice president for Google Search, delineated this “simple” versus “complex” answer divide as follows: imagine, he said, you were interested in hiking Mt. Fuji, and you’ve already hiked Mt. Adams. In its current state, Google Search would be able to help you – if you asked it a series of questions covering temperatures, elevation, trail difficulty, gear… the list goes on. A human hiking expert familiar with the area, by contrast, could offer all of this information based on a single seed question.

In true Google fashion, the company has even quantified the cost of this inefficiency: an average of seven superfluous queries for people investigating complex questions.

MUM, on the other hand, is different. It’s built on a Transformer architecture, but it’s “1,000 times more powerful” than its fellow Transformer-based Google Search model, BERT. Unlike BERT, MUM is also able to generate language, and it’s multimodal – which, for now, just means that it can process information in images as well as in text, but could someday mean video and audio capabilities as well.

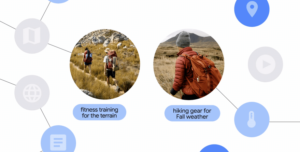

“Take the question about hiking Mt. Fuji: MUM could understand you’re comparing two mountains, so elevation and trail information may be relevant,” Nayak explained. “It could also understand that, in the context of hiking, to ‘prepare’ could include things like fitness training as well as finding the right gear. [It] could highlight that while both mountains are roughly the same elevation, fall is the rainy season on Mt. Fuji so you might need a waterproof jacket.” The list goes on.

MUM’s ability to generate language also confers on it some important abilities for returning rare information. Mt. Fuji being in Japan, for instance, might mean that a lot of the most helpful information online is written in Japanese, likely stymieing many tourists. But MUM can cross language barriers, intelligently delivering translated results.

Google is, of course, cognizant of privacy and bias concerns as AI models grow more cognizant and capable. (Shortly after its annual I/O conference, where MUM was announced, many Google users started seeing notifications across a variety of Google services reminding those users of their control over their privacy settings.) To that end, Nayak says that Google has a thorough process in place to ensure that MUM is ready for primetime.

“Every improvement to Google Search undergoes a rigorous evaluation process to ensure we’re providing more relevant, helpful results,” Nayak said. “Human raters, who follow our Search Quality Rater Guidelines, help us understand how well our results help people find information. Just as we’ve carefully tested the many applications of BERT launched since 2019, MUM will undergo the same process as we apply these models in Search. Specifically, we’ll look for patterns that may indicate bias in machine learning to avoid introducing bias into our systems.”