NASA Uses AI to Improve Its Solar Imaging

For the last 11 years, NASA’s Solar Dynamics Observatory (SDO) spacecraft has been planted in orbit around the Earth, using a series of instruments to observe the Sun in exacting detail. This extended mission has taken an enormous toll on the spacecraft – and now, NASA is using AI to correct the images to compensate for that damage.

The SDO has already more than doubled the tenure of its original five-year mission. Over time, the extraordinarily intense sunlight has impaired the lenses and sensors on the spacecraft’s imaging instruments. One of those, the Atmospheric Imagery Assembly (AIA), is responsible for capturing ultraviolet images every 12 seconds.

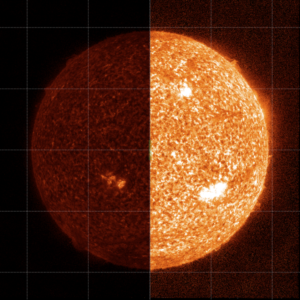

The gradual sun damage inflicted on the AIA necessitates regular calibration, which is done using sounding rockets that fly high into the atmosphere and measure the same UV wavelengths as the AIA. Those measurements, once sent back to researchers, can be compared to the AIA measurements and used to establish a correction factor for the AIA data.

AIA imaging of the Sun before (left) and after (right) correction from a sounding rocket calibration. Image courtesy of NASA.

Of course, NASA isn’t sending up sounding rockets every day – and so between rocket launches, virtual calibration is necessary. For that, NASA has turned to machine learning. NASA trained a machine learning model with labeled data from rocket calibration flights and the AIA, allowing the algorithm to learn how much calibration was necessary for a given reading. And according to the researchers, the virtual calibration performed exactly as intended, achieving accurate results.

The ability to infer these corrections is crucial for other applications, as well. “It’s also important for deep space missions, which won’t have the option of sounding rocket calibration,” said Luiz Dos Santos, a solar physicist at NASA’s Goddard Space Flight Centerand lead author on the paper, in an interview with NASA’s Susannah Darling. “We’re tackling two problems at once.”

The algorithm is also being used to generally strengthen the AIA’s observations, with the researchers using machine learning to teach the AIA what solar flares looked like on multiple wavelengths. “This was the big thing,” Dos Santos said. “Instead of just identifying it on the same wavelength, we’re identifying structures across the wavelengths.”

About the research

The research discussed in this article was published as “Multichannel autocalibration for the Atmospheric Imaging Assembly using machine learning” in the December 2020 issue of Astronomy & Astrophysics. It was written by Luiz F. G. Dos Santos, Souvik Bose, Valentina Salvatelli, Brad Neuberg, Mark C. M. Cheung, Miho Janvier, Meng Jin, Yarin Gal, Paul Boerner, and Atılım Güneş Baydin. To read the paper, click here.