Barcelona Supercomputing Center Powers Encrypted Neural Networks with Intel Tech

Homomorphic encryption provides two extraordinary benefits: first, it has the potential to be secure against intrusion by even quantum computers; second, it allows users to use the data for computation without necessitating decryption, enabling offload of secure data to commercial clouds and other external locations. However – as researchers from Intel and the Barcelona Supercomputing Center (BSC) explained – homomorphic encryption “is not exempt from drawbacks that render it currently impractical in many scenarios,” including that “the size of the data increases fiercely when encrypted,” limiting its application for large neural networks.

Now, that might be changing: BSC and Intel have, for the first time, executed homomorphically encrypted large neural networks.

“Homomorphic encryption … enables inference using encrypted data but it incurs 100x–10,000x memory and runtime overheads,” the authors wrote in their paper. “Secure deep neural network … inference using [homomorphic encryption] is currently limited by computing and memory resources, with frameworks requiring hundreds of gigabytes of DRAM to evaluate small models.”

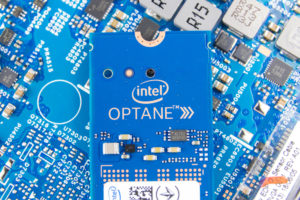

To do that, the researchers deployed Intel tech: specifically, Intel Optane persistent memory and Intel Xeon Scalable processors. The Optane memory was combined with DRAM to supplement the higher capacity of persistent memory with the faster speeds of DRAM. They tested the combination using a variety of different configurations to run large neural networks, including ResNet-50 (now the largest neural network ever run using homomorphic encryption) and the largest variant of MobileNetV2. Following the experiments, they landed on a configuration with just a third of the DRAM – but only a 10 percent drop in performance relative to a full-DRAM system.

“This new technology will allow the general use of neural networks in cloud environments, including, for the first time, where indisputable confidentiality is required for the data or the neural network model,” said Antonio J. Peña, the BSC researcher who led the study and head of BSC’s Accelerators and Communications for High Performance Computing Team.

“The computation is both compute-intensive and memory-intensive,” added Fabian Boemer, a technical lead at Intel supporting this research. “To speed up the bottleneck of memory access, we are investigating different memory architectures that allow better near-memory computing. This work is an important first step to solving this often-overlooked challenge. Among other technologies, we are investigating the use [of] Intel Optane persistent memory to keep constantly accessed data close to the processor during the evaluation.”

To learn more about this research, read the research paper, which is available in full here.

February 4, 2025

- MindBridge Partners with Databricks to Deliver Enhanced Financial Insights

- SAS Viya Brings AI and Analytics to US Government with FedRAMP Authorization

- Percona Announces Comprehensive, Enterprise-Grade Support for Open Source Redis Alternative, Valkey

- Alluxio Enhances Enterprise AI with Version 3.5 for Faster Model Training

- GridGain Enables Real-Time AI with Enhanced Vector Store, Feature Store Capabilities

- Zoho Expands Zia AI With New Agents, Agent Studio, and Marketplace

- Object Management Group Publishes Journal of Innovation Data Edition

- UMD Study: AI Jobs Rise While Overall US Hiring Declines

- Code.org, in Partnership with Amazon, Launches New AI Curriculum for Grades 8-12

- WorkWave Announces Wavelytics, Delivering Data-Driven Insights to Optimize Businesses

February 3, 2025

- New Relic Expands AI Observability with DeepSeek Integration

- LLNL Study Explores ML-Driven Binary Analysis for Software Security

- BCG: AI and Advanced Analytics Key to Cost Reduction for 86% of Executives

- EY and Microsoft Unveil AI Skills Passport to Bridge Workforce AI Training Gap

- DataChat Launches No-Code, GenAI Data Analytics Platform as Snowflake Native App on Snowflake Marketplace

- FICO Honors Amazon Team for Research on Large-Scale Delivery Network Optimization

January 31, 2025

- The Top 2025 Generative AI Predictions: Part 1

- Inside Nvidia’s New Desktop AI Box, ‘Project DIGITS’

- OpenTelemetry Is Too Complicated, VictoriaMetrics Says

- PayPal Feeds the DL Beast with Huge Vault of Fraud Data

- 2025 Big Data Management Predictions

- 2025 Observability Predictions and Observations

- The Top 2025 GenAI Predictions, Part 2

- Big Data Career Notes for December 2024

- Slicing and Dicing the Data Governance Market

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- More Features…

- Meet MATA, an AI Research Assistant for Scientific Data

- IBM Report Reveals Retail and Consumer Brands on the Brink of an AI Boom

- Oracle Touts Performance Boost with Exadata X11M

- Dataiku Report Predicts Key AI Trends for 2025

- Mathematica Helps Crack Zodiac Killer’s Code

- AI Agent Claims 80% Reduction in Time to Complete Data Tasks

- Sahara AI’s New Platform Rewards Users for Building AI Training Data

- Qlik and dbt Labs Make Big Data Integration Acquisitions

- Bloomberg Survey Reveals Data Challenges for Investment Research

- Collibra Bolsters Position in Fast-Moving AI Governance Field

- More News In Brief…

- Informatica Reveals Surge in GenAI Investments as Nearly All Data Leaders Race Ahead

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- AI and Big Data Expo Global Set for February 5-6, 2025, at Olympia London

- NVIDIA Unveils Project DIGITS Personal AI Supercomputer

- Exabeam Enhances SOC Efficiency with New-Scale Platform’s Open-API Integration

- GIGABYTE Launches New Servers with NVIDIA HGX B200 Platform for AI and HPC

- Domo Partners with Data Consulting Group to Provide Advanced BI Capabilities to Global Enterprises

- Oracle Unveils Exadata X11M with Performance Gains Across AI, Analytics, and OLTP

- Dremio’s New Report Shows Data Lakehouses Accelerating AI Readiness for 85% of Firms

- Centific Integrates NVIDIA AI Blueprint to Advance Video Analytics Across Industries

- More This Just In…