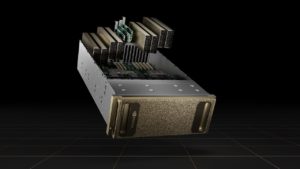

Tuesday at its GTC developer conference, Nvidia launched a new computing system for industrial digital twins, the Nvidia OVX.

Nvidia OVX was created for the purpose of running digital twin simulations within the Omniverse, Nvidia’s industrial operations metaverse that is “a real-time physically accurate world simulation and 3D design collaboration platform.”

“Just as we have DGX for AI, we now have OVX for Omniverse,” said Nvidia CEO Jensen Huang in his GTC keynote.

In fast-paced industries constrained by time, physical space, and computing limitations, digital twin technology is promising to transform operations through advanced designing, modeling, and testing capabilities.

“Physically accurate digital twins are the future of how we design and build,” said Bob Pette, vice president of Professional Visualization at Nvidia. “Digital twins will change how every industry and company plans. The OVX portfolio of systems will be able to power true, real-time, always-synchronous, industrial-scale digital twins across industries.”

Specifications

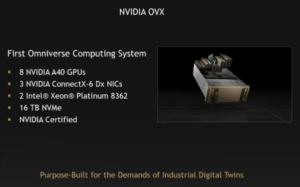

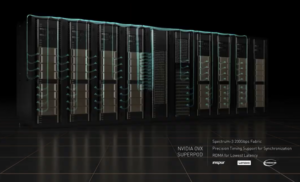

A first generation Omniverse OVX computing system is comprised of eight Nvidia A40 GPUs, three Nvidia ConnectX-6 Dx 200-Gbps NICs, dual Intel Ice Lake 8362 CPUs, 1TB system memory, and 16TB NVMe storage. The OVX computing system can be scaled from a single pod of eight OVX servers up to a SuperPOD of 32 OVX servers when connected with Spectrum-3 switch fabric. Multiple SuperPODS can also be deployed for larger simulation needs.

Capabilities

“OVX will enable designers, engineers, and planners to build physically accurate digital twins of buildings or create massive, true-to-reality simulated environments with precise time synchronization across physical and virtual worlds,” said Nvidia. With the ever-increasing complexity within industry, the testing and evaluation of intricate systems and processes that often operate simultaneously and autonomously can be challenging or sometimes impossible. Creating a digital twin of these systems, such as those in a factory or warehouse involving autonomous vehicles or robots, allows companies to digitally optimize operations for better efficiency or innovation before actual, real-world deployment of modifications or developments.

In his keynote address, Huang noted that because of the complexity of industrial systems, “the Omniverse software and computer need to be scalable, low latency and support precise time,” and since data centers process data in the lowest possible time, but not in precise time, the company wanted to create a “synchronous data center” with OVX. “Most importantly, the network and computers are synchronized using precision timing protocol and RDMA minimizes packet transfer latency,” Huang said.

Origins of Digital Twins

Also in his keynote address, Huang gave the example of the famous Apollo 13 mission where astronauts’ lives hung in the balance 136,000 miles from home after an oxygen tank exploded due to faulty wiring. Back on Earth, NASA engineers used a physical replica of the mission’s Odyssey spacecraft to test procedures for power-cycling and oxygen preservation which ultimately enabled NASA to safely return the crew. Huang noted that disaster was averted thanks to the replica and accompanying mathematical modeling, and the prototypical digital twin concept was born. NASA later coined the term digital twin, defining it as “a living virtual representation of something physical.”

A screenshot from Huang’s GTC keynote showing NASA engineers working with a replica of the Odyssey spacecraft. Source: Nvidia

While not all digital twin applications are as thrilling as saving the lives of astronauts, companies today are finding similar value in creating digital twins in the Omniverse. In Germany, DB Netze, an infrastructure arm of the national rail holding company, Deutsche Bahn, “is building in Omniverse a digital twin of Germany’s national railway network to train systems for automatic train operation and enable AI-enhanced predictive analysis for unforeseen situations in railway operations,” according to Nvidia. DB Netze is optimistic about the greater outcomes a digital twin can facilitate.

“Using a photorealistic digital twin to train and test AI-enabled trains will help us develop more precise perception systems to optimally detect and react to incidents,” said Annika Hundertmark, head of railway digitization at DB Netze. “In our current project, Nvidia OVX will provide the scale, performance, and compute capabilities that we need to generate data for intensive machine learning development and operate these highly complex simulations and scenarios.”

First generation OVX systems have already been deployed at Nvidia and for some early customers, and a second-generation system is currently in development that will benefit from Spectrum-4, a 51.2 terabits-per-second, 100 billion transistor Ethernet switch meant to tune precision timing even further. The company says its Nvidia-Certified OVX system will be available through Inspur, Lenovo, and Supermicro later this year, along with enterprise-grade support and Omniverse software to be jointly provided by Nvidia and the OEM system builders. Customers can test the OVX system at one of Nvidia’s Launchpad locations worldwide.

To watch a replay of Tuesday’s GTC keynote, visit this link.

April 25, 2025

- Denodo Supports Real-Time Data Integration for Hospital Sant Joan de Déu Barcelona

- Redwood Expands Automation Platform with Introduction of Redwood Insights

- Datatonic Announces Acquisition of Syntio to Expand Global Services and Delivery Capabilities

April 24, 2025

- Dataiku Expands Platform with Tools to Build, Govern, and Monitor AI Agents at Scale

- Indicium Launches IndiMesh to Streamline Enterprise AI and Data Systems

- StorONE and Phison Unveil Storage Platform Designed for LLM Training and AI Workflows

- Dataminr Raises $100M to Accelerate Global Push for Real-Time AI Intelligence

- Elastic Announces General Availability of Elastic Cloud Serverless on Google Cloud Marketplace

- CNCF Announces Schedule for OpenTelemetry Community Day

- Thoughtworks Signs Global Strategic Collaboration Agreement with AWS

April 23, 2025

- Metomic Introduces AI Data Protection Solution Amid Rising Concerns Over Sensitive Data Exposure in AI Tools

- Astronomer Unveils Apache Airflow 3 to Power AI and Real-Time Data Workflows

- CNCF Announces OpenObservabilityCon North America

- Domino Wins $16.5M DOD Award to Power Navy AI Infrastructure for Mine Detection

- Endor Labs Raises $93M to Expand AI-Powered AppSec Platform

- Ocient Announces Close of Series B Extension Financing to Accelerate Solutions for Complex Data and AI Workloads

April 22, 2025

- O’Reilly Launches AI Codecon, New Virtual Conference Series on the Future of AI-Enabled Development

- Qlik Powers Alpha Auto Group’s Global Growth with Automotive-Focused Analytics

- Docker Extends AI Momentum with MCP Tools Built for Developers

- John Snow Labs Unveils End-to-End HCC Coding Solution at Healthcare NLP Summit

- PayPal Feeds the DL Beast with Huge Vault of Fraud Data

- Will Model Context Protocol (MCP) Become the Standard for Agentic AI?

- OpenTelemetry Is Too Complicated, VictoriaMetrics Says

- Thriving in the Second Wave of Big Data Modernization

- Google Cloud Preps for Agentic AI Era with ‘Ironwood’ TPU, New Models and Software

- Google Cloud Fleshes Out its Databases at Next 2025, with an Eye to AI

- Can We Learn to Live with AI Hallucinations?

- Monte Carlo Brings AI Agents Into the Data Observability Fold

- AI Today and Tomorrow Series #3: HPC and AI—When Worlds Converge/Collide

- The Active Data Architecture Era Is Here, Dresner Says

- More Features…

- Google Cloud Cranks Up the Analytics at Next 2025

- New Intel CEO Lip-Bu Tan Promises Return to Engineering Innovation in Major Address

- AI One Emerges from Stealth to “End the Data Lake Era”

- GigaOM Report Highlights Top Performers in Unstructured Data Management for 2025

- SnapLogic Connects the Dots Between Agents, APIs, and Work AI

- Snowflake Bolsters Support for Apache Iceberg Tables

- Supabase’s $200M Raise Signals Big Ambitions

- Big Data Career Notes for March 2025

- GenAI Investments Accelerating, IDC and Gartner Say

- Dremio Speeds AI and BI Workloads with Spring Lakehouse Release

- More News In Brief…

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- MinIO: Introducing Model Context Protocol Server for MinIO AIStor

- Dataiku Achieves AWS Generative AI Competency

- AMD Powers New Google Cloud C4D and H4D VMs with 5th Gen EPYC CPUs

- CData Launches Microsoft Fabric Integration Accelerator

- MLCommons Releases New MLPerf Inference v5.0 Benchmark Results

- Opsera Raises $20M to Expand AI-Driven DevOps Platform

- GitLab Announces the General Availability of GitLab Duo with Amazon Q

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- Intel and IBM Announce Availability of Intel Gaudi 3 AI Accelerators on IBM Cloud

- More This Just In…