Europe’s New AI Act Puts Ethics In the Spotlight

(kentoh/Shutterstock)

AI ethics issues such as bias, transparency, and explainability are gaining new importance as the European Union marches towards implementation of the AI Act, which would effectively regulate the use of AI and machine learning technologies across all industries. AI experts say this is a great time for AI users to familiarize themselves with ethical concepts.

The AI Act, which we wrote about last week, was introduced last year and is quickly going through the review process, with implementation possibly as soon as 2023. While the law is still being written, it looks as though the European Commission is ready to take big steps to regulate AI.

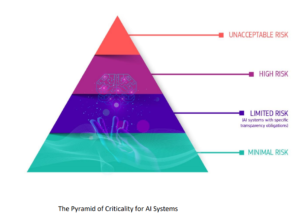

For example, the law would set new requirements for the use of AI systems, and ban some use cases altogether. So-called high-risk AI systems, such as those used in self-driving cars as well as decision-support systems in education, immigration, and employment, would require users to conduct impact assessments and audits on the AI applications. Certain AI use cases would be closely tracked in a database, and others would require an external auditor to sign off before they could be used.

There’s a lot of demand for interpretability and explainability as part of an MLOps engagement or a data science consulting engagement, says Nick Carrel, the director of data analytics consulting at EPAM Systems, a Newtown, Pennsylvania-based software engineering firm. The EU’s AI Act is also driving companies to seek insight and answers on ethical AI, he says.

“There’s a lot of demand right now for what’s called ML Ops, which is the science of operationalizing the machine learning models. We very much see ethical AI as being one of the key foundations of this process,” Carrel says. “We also have a lot more demands that come from our clients….because they read about the pending EU legislation, which is about to come into force at the end of the year around AI systems, and they want to be to be ready for that.”

Interpretability and explainabilty are separate but related concepts. The interpretability of models refers to the degree to which humans can comprehend and predict what decision a model will make, while explainability refers to the ability to describe with precision how the model actually works. You can have one without the other, says Andrey Derevyanka, the head of the data science and machine learning unit at EPAM Systems.

“Imagine that you are doing some experiments, maybe some chemical experiment mixing two liquids. This experiment is interpretable because, well, you see what you’re doing here. You take one thing, add another thing, we get the result,” Derevyanka tells Datanami. “But for this experiment to be explainable, you need to know the chemistry and you need to know how the reaction is made, is processed, and you need to know the inner details of this process.”

Deep learning models, in particular, can be interpretable but not explainable, Derevyanka says. “You’ve got a black box and it operates somehow, but you know you do not know what is inside,” he says. “But you can interpret: If you give this input, you get this output.”

Chasing Down Bias

Bias is another important subject when it comes to ethical AI. It’s impossible to complete eradicate bias from the data, but it’s important that organizations put in the effort to remove bias from their AI models, says Umit Cakmak, the director of EPAM Systems’ data and AI practice.

“These things have to be analyzed over time,” Cakmak says. “This is a process, because the bias is already baked into historical data. There is no way of cleaning the bias from the data. So as a business, you have to set up some certain processes so that your decision gets better over time, which will improve your data quality over time, therefore you have less and less bias over time.”

Explainability is important for giving stakeholders–including internal or external auditors, as well as customers and executives who are putting their reputations on the line–the confidence that AI models are not making poor decisions based on biased data.

There are many examples in the literature of data bias leaking into automated decision-making systems, including racial bias showing up in models used to rate employee performance or to pick job candidates from resumes, Cakmak says. Being able to show how a model came to its conclusion is important to showing that steps were taken to remove data bias from the models.

Cakmak recalls how a lack of explainability led a healthcare company to abandon an AI system developed for cancer diagnoses. “The AI worked up to some extent, but then the project got cancelled because they couldn’t develop their trust and confidence in the algorithm,” he says. “If you’re not able to explain why the result came in that way, then you’re not able to proceed with your treatment.”

EPAM Systems helps companies implement AI in a trusted manner. The company typically will follow a specific set of guidelines, starting with how the data is collected to how the machine learning models are prepared to how the models are validated and explained. Making sure the AI team successfully meets and documents these checks or “quality gates” is an important element in ethical AI, Cakmak says.

Ethics and the AI Act

The biggest and most well-run companies have already gotten the message on the need for responsible AI, according to Steven Mills, the Global GAMMA Chief AI Ethics Officer at Boston Consulting Group.

However, as the AI Act gets closer to becoming law, we will see more companies around the world accelerating their responsible AI projects to ensure they’re not running afoul of the changing regulatory environment and new expectations.

“There’s a lot of companies that have started implementing AI and are realizing we don’t have our arms around, as much as we want to, all the potential unintended consequences, and we need to get that in order very quickly,” Mills said. “This is top of mind. It does not feel like people are just being haphazard and how they apply this.”

The pressure to implement AI in an ethical manner is coming from the top of organizations. And in some cases, it’s coming from outside investors, who don’t want the risk of their investments being tarnished by the perception of using AI in a bad way, Mills said.

“We are seeing a trend where investors, whether they’re public companies or venture funds, they want to make sure that the AI is being built responsibly,” he said. “ It might not be overt. It might not be obvious to everyone. But in the background, that is happening where some of these VCs are being thoughtful about where they’re putting money in making sure those startups are doing things the right way.”

While the specifics of the AI Act are also vague at this time, the law has the potential to bring clarity to the use of AI, which will benefit both companies as well as consumers, said Carrel.

“My first reaction was that it’s going to be really restrictive,” said Carrel, who implemented machine learning models in the financial services industry prior to joining EPAM Systems. “I’ve gone through years of trying to push the boundaries of decisioning within financial services, and suddenly there’s a piece of legislation that comes in, it’s going to kind of sabotage the work that we’ve done.

But the more he looks at the pending law, the more he likes what he sees.

“I think that this will also progressively increase the confidence of the public in terms of the use of AI within different industries,” Carrel said. “The legislation stipulates that you have to register high-risk AI systems with the EU, which means that you know there will be a very clear list compiled somewhere of every single AI high-risk system being used. And this gives good power to the auditors, which means that progressively the naughty boys and the bad players will be punished, and hopefully over time we get left with best practice on people who want to use AI and ML for the better cause and in a responsible way.”

Related Items:

Europe’s AI Act Would Regulate Tech Globally

To Bridge the AI Ethics Gap, We Must First Acknowledge It’s There

Looking For An AI Ethicist? Good Luck