Amazon re:MARS Highlights Intelligence from Shops to Space

Amazon hosted its inaugural re:MARS event back in 2019, with “MARS” here standing for machine learning, automation, robotics, and space. For the past two years, the event has been on hold due to the pandemic—but this past week, re:MARS returned at full force, held in-person in Las Vegas and highlighting applications from Alexa and shopping to assisted coding and space-based data processing. Here are some of the topics that Amazon highlighted during re:MARS.

Physical retail shopping

Amazon has engaged in a growing number of physical retail experiments over the past decade or so, perhaps most famously through its Amazon Go stores, which utilize the company’s “Just Walk Out” technology to track what customers put in their baskets or carts and automatically charge them as they walk out of the store—without the need for a checkout line. At re:MARS, Amazon detailed the computer vision and machine learning developments that enable projects like Just Walk Out to advance.

Just Walk Out, Amazon says, has expanded to many Amazon stores, Whole Foods stores, and even third-party retailers, along with a new Amazon Style store for apparel. This, they said, has been enabled by winnowing down the number of cameras necessary for Just Walk Out to work and making those edge devices powerful enough to run the necessary deep neural networks locally rather than transferring the data back and forth. There have been further advances in computer vision and sensor fusion algorithms to detect items in motion.

Amazon also stressed the role of synthetic data in enhancing physical retail experiences. “When my team set out to reimagine the in-store shopping experience for customers, one challenge we faced was getting diverse training data for our AI models to ensure high accuracy,” explained Dilip Kumar, Amazon’s vice president for physical retail and technology. “To address this challenge, our research teams built millions of sets of synthetic data—machine-generated photorealistic data—to help build and perfect our algorithms and provide a seamless customer experience.” This got as granular as simulating individual shopping scenarios and the lighting conditions at different stores.

Conversational and “ambient” AI

“Ambient” intelligence is a growing buzz term for companies that provide smart home or automation technology. Amazon says it refers to the idea of AI that is “embedded everywhere in our environment,” which is both reactive (responding to requests) and proactive (anticipating needs) and which leverages a wide variety of sensors. In Amazon’s terms, of course, this is associated with a name: Alexa. Rohid Prasad, senior vice president and head scientist for Alexa AI at Amazon, made the case at re:MARS that ambient intelligence is the most practical pathway to generalizable intelligence.

“Generalizable intelligence doesn’t imply an all-knowing, all-capable, über AI that can accomplish any task in the world,” Prasad wrote in a subsequent blog post. “Our definition is more pragmatic, with three key attributes: a GI agent can (1) accomplish multiple tasks; (2) rapidly evolve to ever-changing environments; and (3) learn new concepts and actions with minimal external human input.”

Alexa, Prasad said, “already exhibits common sense in a number of areas,” such as detecting frequent customer interaction patterns and suggesting that the user make a routine out of them. “Moving forward, we are aspiring to take automated reasoning to a whole new level,” he continued. “Our first goal is the pervasive use of commonsense knowledge in conversational AI. As part of that effort, we have collected and publicly released the largest dataset for social common sense in an interactive setting.”

The item that Prasad said he was most excited about from his keynote was a feature called “conversational explorations” for Alexa. “We are enabling conversational explorations on ambient devices, so you don’t have to pull out your phone or go to your laptop to explore information on the web,” he explained.” Instead, Alexa guides you on your topic of interest, distilling a wide variety of information available on the web and shifting the heavy lifting of researching content from you to Alexa.”

Prasad said that this advancement has been made possible by dialogue flow prediction enabled by deep learning in Alexa Conversations and web-scale neural information retrieval. Deep learning again, of course, enters the picture when summarizing the retrieved information in snippets.

ML-assisted coding

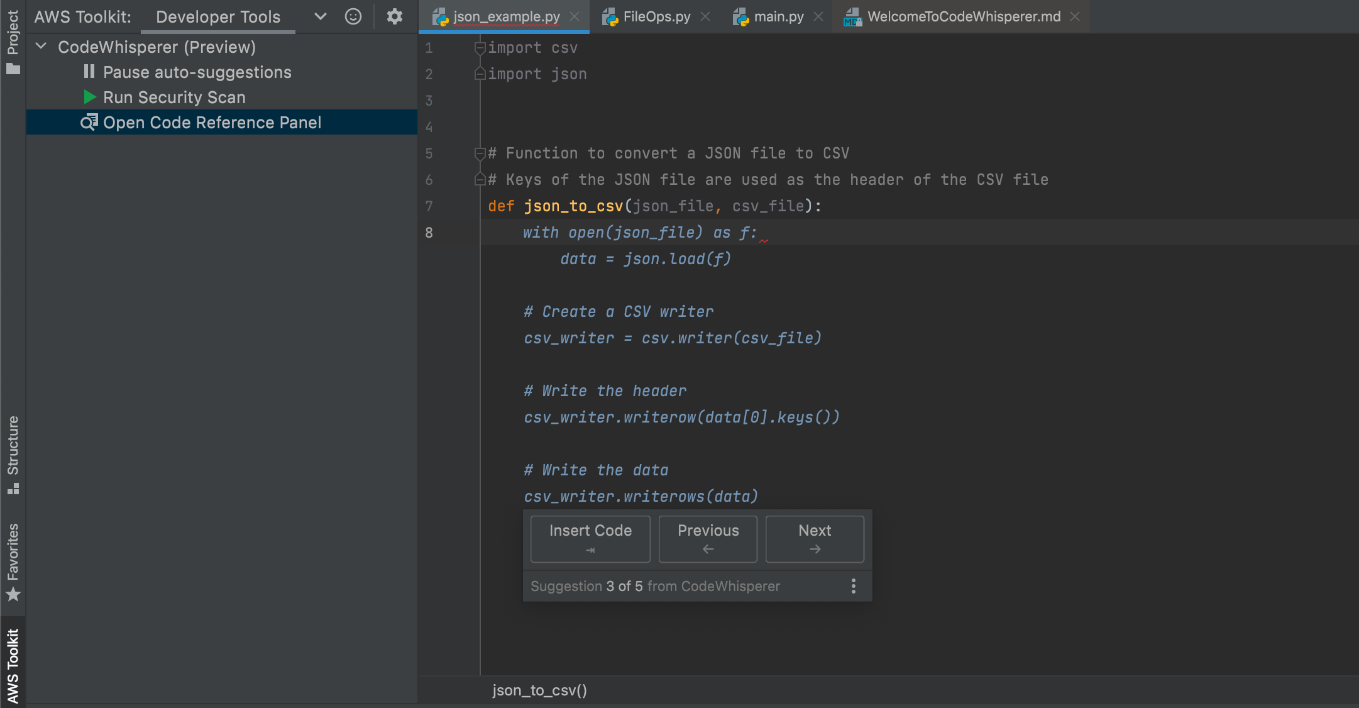

At re:MARS, Amazon announced Amazon CodeWhisperer, which they describe as an ML-powered service “that helps improve developer productivity by providing code recommendations based on developers’ natural comments and prior code.” CodeWhisperer, the company explained, can process a comment defining a specific task in plain English, with the tool identifying the ideal services to complete the task and writing the necessary code snippets.

CodeWhisperer, Amazon explained, goes beyond traditional autocomplete tools by generating entire functions and code blocks rather than individual words. To accomplish this, it was trained on “vast amounts of publicly available code.” CodeWhisperer is incorporated via the Amazon Web Services (AWS) Toolkit extension for IDEs. Once enabled, it automatically begins recommending code in response to written code and comments.

Amazon — in space!

Perhaps most true to the re:MARS moniker, Amazon showcased how AWS teamed up with aerospace firm Axiom Space to remotely operate an AWS Snowcone SSD-based device on the International Space Station (ISS). Edge computing has become an increasingly high-priority item for space travel and experiments as data collection grows but bandwidth remains difficult to come by and space weather continues to put electronics through the wringer.

AWS and Axiom Space teamed up to analyze data from Axiom Mission 1 (Ax-1), the first all-private mission to the space station. On the Ax-1 mission, the private astronauts spent most of their time engaging with a couple dozen research and technology projects, including the use of AWS Snowcone. These experiments sometimes produced terabytes of data each day—a manageable sum on Earth, but much more onerous in space.

Snowcone, while designed for rugged environments, was not designed for space. AWS worked with Axiom and NASA for seven months to prepare the SSD for space travel. On the mission, the team back on Earth successfully communicated with the device and applied “a sophisticated [ML]-based object recognition model to analyze a photo and output a result in less than three seconds.” Amazon says that they were able to repeat this process indefinitely, showing promising results for future missions.

“AWS is committed to eliminating the traditional barriers encountered in a space environment, including latency and bandwidth limitations,” said Clint Crosier, director of Aerospace and Satellite at AWS. “Performing imagery analysis close to the source of the data, on orbit, is a tremendous advantage because it can improve response times and allow the crew to focus on other mission-critical tasks. This demonstration will help our teams assess how we can make edge processing a capability available to crews for future space missions.”

And more

Amazon re:MARS included a number of other talks, keynotes, and reveals, including the general availability of AWS IoT ExpressLink and synthetic data generation via Amazon SageMaker. To learn more about those, click the embedded links or visit the event page here.

Related Items

AWS Bolsters SageMaker with New Capabilities

AWS Charts a Multi-Pronged Path to IT Observability