(Peshkova/Shutterstock)

We’ve reached the Skynet moment for AI, it would seem. GPT-4, cast in the role of Terminator, has not only achieved consciousness, but it’s coming for your job, too. Everything will be different now, and there’s not much we can do about it, so we might as well welcome our new AI overlords.

Or so seems to be the conclusion that many have drawn from the sudden emergence of large language models (LLMs) on the public scene. While the capabilities of LLMs have been impressing AI researchers for a while, everything changed on November 30, when OpenAI, playing the role of Cyberdyne Systems, released ChatGPT to the world.

The winter months provided a steady drumbeat of AI excitement, as people poked ChatGPT and prodded Sydney, the ChatGPT variant developed for Microsoft’s Bing search engine. There were some moments of amazement, as ChatGPT composed lyrics and wrote eloquently across a number topics. There was also some profoundly weird stuff, such as when Sydney compared a journalist to Hitler and threatened to destroy his reputation.

Things have come to head the past two weeks, which have been especially hectic on the AI front. To recap:

March 15: OpenAI delivered GPT-4, ostensibly the biggest and most capable LLM ever devised by humans. While OpenAI isn’t saying, the speculation is that GPT-4 is composed of 100 trillion parameters, dwarfing the 175-billion parameter GPT-3.

March 21: Google–which kicked off the current AI fork with its Transformer paper in 2017–formally opened up its ChatGPT competitor, dubbed Bard, to the world.

March 22: A group called the Future of Life Institute urged a pause on LLM research, citing a “profound risks to society and humanity.” That warning may have come too late, because on….

March 27: Microsoft researchers asserted in a paper that GPT-4 may have already achieved artificial general intelligence (AGI), which is basically the Holy Grail of AI.

Many are declaring that we’re at an inflection point with regard to AI. Nvidia CEO Jensen Huang last week declared that generative AI “is a new computing platform like PC, Internet, mobile, and cloud.” Nearly every major software vendor has made some sort of announcement about LLMs, most often declaring an integration point with them.

Some have gone beyond that and declared that we’ve reached a “tipping point” with AI. Millions of jobs will be automated away thanks to the capability of LLMs to absorb information and generate correct answers.

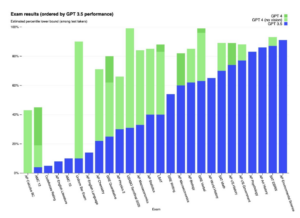

An unpublished research paper released earlier this week, dubbed “GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models,” found that GPT-4 can score as high as people on many types of tests. In fact, the AI on average scores higher in many cases.

“Our analysis indicates that the impacts of LLMs like GPT-4, are likely to be pervasive,” the researchers write. “While LLMs have consistently improved in capabilities over time, their growing economic effect is expected to persist and increase even if we halt the development of new capabilities today. We also find that the potential impact of LLMs expands significantly when we take into account the development of complementary technologies.”

One of wildcards in all of this may be the tendency for LLMs to develop unexpected “emergent” capabilities. The track record shows that as the models get bigger, they get surprisingly better at a number of tasks, going against researcher’s expectations. That’s a cause for both delight and concern.

GPT-4 displays a propensity for testing well (Image courtesy “GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models paper

So, we should all be learning how to apply for government unemployment benefits, right? Not so fast, says Chirag Shah, a professor at the Information School at the University of Washington. According to Shah, we would do well to stop the obsession with doomsday predictions and just take a deep breath.

“Currently, people are divided into camps. Either they are hyped up so much that this is it. This is the big AI moment we’re looking for, and now AI is going to make everything better,” Shah tells Datanami. “And then people on the other camp which is, Oh no, this is the AI that we were afraid of, and now it’s going destroy the world.

“I think they both are wrong,” he continues. “In reality, it’s not going to just cure all our problems. It’s not that smart or even understanding as we may think. And I also don’t think it’s going just destroy. This is not the Terminator.”

To be sure, there is plenty of FOMO (fear of missing out) and FUD (fear, uncertainty, and doubt) going around. While the venture capital spigots are turned off at the moment, eagle-eye entrepreneurs are sensing that the technology is mature enough to support new business models. Whether its accounting, journalism, education, or programming itself, AI would seem poised to take on much of the heavy-lifting when it comes to cognitive tasks.

Shah sees LLM having an impact, to be sure. But instead of replacing people whole hog, LLMs will be brought in as more of a co-pilot to assist people with their work. It will be a co-collaborator, but not the driver.

“There’s going to be this shuffle, which is similar to what we have seen many other times before, where new technology essentially disrupts the market and some people lose jobs and others basically have to learn new skills,” he says. “They’re still employed, but they need to quickly pick up new things.”

But Shah is skeptical that LLM technology, in its current form, is ready to have the sort of major impact that so many seem to think it will.

“I think in some cases we are definitely getting ahead of ourselves,” he says. “People have already started putting this in practice where it can replace many of the task, if not the entire jobs. But I’ve also seen people getting too hyped by what it can do, without enough understanding what its limitations are.”

In his view, the current race between Microsoft (and its partner OpenAI) and Google is reminiscent of the epic rivalry between Ford and Ferrari on the 1960’s racing circuit. The two automakers were enmeshed in a battle to create a faster race car, and the world at large engaged them and rooted them on. But the rivalry didn’t result in any practical products for consumers.

“I think that’s where we are now,” Shah says. “These [LLMs] are race cars. They are building them to show that they can be better than the other guy. They are not necessarily for the common consumption.”

The industry may take these LLMs and build useful products at some point, he says. But even then, the LLM approach in many cases won’t necessarily be the right one. “This is like a bazooka,” he says of LLMs, “and sometimes you just need a shotgun or a knife.”

It’s hard to resist the lure of technological tipping points. LLMs are advancing at a tenacious clip, and it’s easy to get caught up in the AI hullaballoo that “everything is different now.” Steve Jobs was an amazing technologist, and this may be another iPhone moment. But did anybody actually ask for this?

“Right now, we’re just too caught up into this moment to really see through it. And I don’t blame us, because look at the speed at which this is going. It’s just unprecedented,” he says. “But hopefully someday the dust will settle and we will be more critical of this [and ask ourselves] do we really need this for this particular application, for this particular kind of user experience? Then the answer to that is going be no, in many cases.”

Related Items:

ChatGPT Puts AI At Inflection Point, Nvidia CEO Huang Says

GPT-4 Has Arrived: Here’s What to Know

February 14, 2025

- Clarifai Unveils Control Center for Enhanced AI Visibility and Decision-Making

- EDB Strengthens Partner Program to Accelerate Postgres and AI Adoption Worldwide

- Workday Introduces Agent System of Record for AI Workforce Management

- Fujitsu Unveils Generative AI Cloud Platform with Data Security Focus

- NTT DATA Highlights AI Responsibility Gap as Leadership Fails to Keep Pace

- Gurobi AI Modeling Empowers Users with Accessible Optimization Resources

February 13, 2025

- SingleStore Unveils No-Code Solution Designed to Cut Data Migration from Days to Hours

- Databricks Announces Launch of SAP Databricks

- SAP Debuts Business Data Cloud with Databricks to Turbocharge Business AI

- Data Science Salon Kickstarts 2025 with DSS ATX Conference, Featuring AI Startup Showcase

- Hydrolix Achieves Amazon CloudFront Ready Designation

- Astronomer Launches Astro Observe to Unify Data Observability and Orchestration

- EU Launches InvestAI Initiative to Build AI Gigafactories Across Europe

- HPE Announces Shipment of Its First NVIDIA Grace Blackwell System

- IDC Celebrates 60 Years of Tech Intelligence at Directions 2025

- Lucidity Gains $21M to Scale AI-Driven Cloud Storage Optimization

- Glean Launches Open Security and Governance Partner Program for Enterprise AI

February 12, 2025

- OpenTelemetry Is Too Complicated, VictoriaMetrics Says

- What Are Reasoning Models and Why You Should Care

- Three Ways Data Products Empower Internal Users

- Memgraph Bolsters AI Development with GraphRAG Support

- Keeping Data Private and Secure with Agentic AI

- Three Data Challenges Leaders Need To Overcome to Successfully Implement AI

- PayPal Feeds the DL Beast with Huge Vault of Fraud Data

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- Inside Nvidia’s New Desktop AI Box, ‘Project DIGITS’

- Data Catalogs Vs. Metadata Catalogs: What’s the Difference?

- More Features…

- Meet MATA, an AI Research Assistant for Scientific Data

- AI Agent Claims 80% Reduction in Time to Complete Data Tasks

- DataRobot Expands AI Capabilities with Agnostiq Acquisition

- Collibra Bolsters Position in Fast-Moving AI Governance Field

- Snowflake Unleashes AI Agents to Unlock Enterprise Data

- Observo AI Raises $15M for Agentic AI-Powered Data Pipelines

- Anaconda’s Commercial Fee Is Paying Off, CEO Says

- Microsoft Open Sources Code Behind PostgreSQL-Based MongoDB Clone

- Confluent and Databricks Join Forces to Bridge AI’s Data Gap

- Mathematica Helps Crack Zodiac Killer’s Code

- More News In Brief…

- Informatica Reveals Surge in GenAI Investments as Nearly All Data Leaders Race Ahead

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- PEAK:AIO Powers AI Data for University of Strathclyde’s MediForge Hub

- DataRobot Acquires Agnostiq to Accelerate Agentic AI Application Development

- TigerGraph Launches Savanna Cloud Platform to Scale Graph Analytics for AI

- EY and Microsoft Unveil AI Skills Passport to Bridge Workforce AI Training Gap

- Alluxio Enhances Enterprise AI with Version 3.5 for Faster Model Training

- DeepSeek-R1 models now available on AWS

- Lightning AI Brings DeepSeek to Private Enterprise Clouds with AI Hub

- SAS Viya Outperforms Competitors and Lowers Operational Costs, Study Says

- More This Just In…