AI Threat ‘Like Nuclear Weapons,’ Hinton Says

(Dn-Br/Shutterstock)

The rise of artificial intelligence poses an existential threat to humans and is on par with the use of nuclear weapons, according to more than a third of AI researchers polled in a recent Stanford study as well as Geoffrey Hinton, one of the “the Godfathers of AI.”

Hinton, who shares a 2018 Turing Award with Yann LeCun and Yoshua Bengio for their work on the neural networks at the heart of today’s massive deep learning models, announced this week that he quit his research job at Google so he could talk more freely about the threats posed by AI.

“I’m just a scientist who suddenly realized that these things are getting smarter than us,” Hinton told CNN’s Jake Tapper in an interview that aired May 3. “I want to sort of blow the whistle and say we should worry seriously about how we stop these things getting control over us. And it’s going to be very hard and I don’t have the solutions.”

Since OpenAI released its ChatGPT AI model in late November 2022, the world has marveled at the rapid progress made in AI. The field of natural language processing (NLP) has grown by leaps and bounds, thanks in large part to the introduction of large language models (LLMs). ChatGPT isn’t the first LLM, and others like Google’s Switch Transformer have been impressing tech experts for years, but the progress in the last few months since the introduction of ChatGPT has been rapid.

Many people have had great interactions with ChatGPT and its offshoots. Data and analytics firms have been furiously integrating the technology into existing products, and ChatGPT quickly soared to be the number one job skill. But there have been a few eyebrow-raising episodes.

For instance, Microsoft’s Bing Chat, which is based on ChatGPT, left several journalists flabbergasted following its launch in February when it compared one to Hitler, threatened to wanted to unleash a virus, hack banks and nuclear plants, and destroy a reporter’s reputation.

It would be a mistake to consider those threats purely theoretical, according to Hinton.

“If it gets to be much smarter than us, it will be very good at manipulating, because it will have learned that from us,” he told CNN. “And there are very few examples of a more-intelligent thing being controlled by a less-intelligent thing. But it knows how to program, so it will figure out ways of getting around restrictions we put on it. It will figure out ways of manipulating people to do what it wants.”

Restrictions are needed to prevent the worst outcomes with AI, Hinton said. While he isn’t sure what the regulation would look like, or even whether it’s possible to regulate AI in the first place, the world community needs to at least be having the conversation, he said.

Hinton pointed out that he didn’t sign the open letter calling for a six-month pause of AI research, which is something that his former colleague Benigo signed, along with more than 1,000 other AI researchers (although not LeCun). The reason he didn’t sign it is because if U.S. researchers committed to a pause, there’s no guarantee that Chinese researchers would pause too.

“I don’t think we can stop the progress,” Hinton said in the CNN interview. “I didn’t sign the petition saying we should stop working on AI because if people in America stopped, people in China wouldn’t. It’s very hard to verify whether people are doing it.”

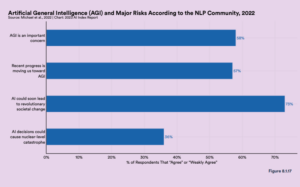

More than one-third of AI researchers believe AGI could lead to a nuclear-level catastrophe (Source: Stanford’s Artificial Intelligence Index Report 2023)

While the research won’t stop, the existential danger posed by AI is of such a clear and present nature that it should convince leaders of the U.S. and China to work together to restrict its use in some manner, he said.

“It’s like nuclear weapons,” Hinton continued. “If there’s a nuclear war, we all lose. And it’s the same with these things taking over. So since we’re all in the same boat, we should be able to get agreement between China and the U.S. on things like that.”

Interestingly, the nuclear weapons comparison was also brought up in Stanford’s Artificial Intelligence Index Report 2023, which was released last month. Among the encyclopedic 386 pages of charts and graphs was one about the risks posed by artificial general intelligence (AGI). Apparently, 36% of NLP researchers polled by Stanford think that AGI “could cause nuclear-level catastrophe.”

Hinton made similar comments in an interview with the New York Times published May 1. In the past, when asked why he chose to work on something that could be used to hurt people, Hinton would paraphrase physicist Robert Oppenheimer, who led the American effort to build the world’s first nuclear bombs during World War II.

“When you see something that is technically sweet, you go ahead and do it,” Oppenheimer would say, Hinton told the Times reporter. And just as Oppenheimer later regretted his work on the Manhattan Project and spent the rest of his career trying to stop the spread of nuclear weapons, Hinton now regrets his work in developing modern AI and is actively working to reverse the progress he and others so famously made.

Related Items:

Open Letter Urges Pause on AI Research

Has Microsoft’s New Bing ‘Chat Mode’ Already Gone Off the Rails?

OpenAI’s New GPT-3.5 Chatbot Can Rhyme like Snoop Dogg