Altman’s Suggestion for AI Licenses Draws Mixed Response

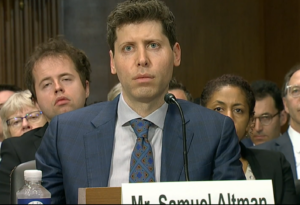

OpenAI CEO Sam Altman testifies in Congress on May 16, 2023

The US should consider creating a new regulatory body to oversee the licensing and use of AI “above a certain threshold,” OpenAI CEO Sam Altman said in Congressional testimony yesterday. His recommendation was met with disdain from some, while other AI experts applauded his idea.

“OpenAI was founded on the belief that artificial intelligence has the potential to improve nearly aspect of our lives but also that it creates serious risks we have to work together to manage,” Altman told a US Senate subcommittee Tuesday.

“We think that regulatory intervention by governments will be critical to mitigate the risks of increasing powerful models,” he continued. “For example, the US government might consider a combination of licensing and testing requirements for development and release of AI models above a threshold of capabilities.”

Altman suggested the International Atomic Energy Agency (IAEA), which inspects nuclear weapons development programs, as a model for how a future AI regulatory body could function. Geoffrey Hinton, one of the main developers of the neural networks at the heart of today’s powerful AI models, recently compared AI to nuclear weapons.

What exactly that threshold would be for licensing, Altman didn’t make clear. The release of a bioweapon created by AI, or an AI model that can “persuade, manipulate, [or] influence a person’s behavior or a person’s beliefs” would be possible thresholds for government intervention, he said.

Florian Douetteau, the CEO and co-founder machine learning and analytics software developer Dataiku, applauded Altman’s approach.

“Unprecedented technology requires unprecedented government involvement to protect the common good,” Douetteau told Datanami. “We’re on the right track with a licensing approach–but of course the hard part is ensuring the licensing process keeps pace with innovation.”

The notion that government should be in the business of doling out licenses for the right to run software code was panned by others, including University of Washington Professor and Snorkel AI CEO Alex Ratner.

“Sam Altman is right: AI risks should be taken seriously–especially short-term risks like job displacement and the spread of misinformation,” Ratner said. “But in the end, the proposed argument for regulation is self-serving, designed to reserve AI for a chosen few like OpenAI.”

Because of the cost to train large AI models, they have primarily been the domain of tech giants like OpenAI Google, Facebook, and Microsoft. Introducing regulation at this early stage in the development of the technology would solidify any leads those tech giants currently have and slow AI progress in promising areas of research, such as drug discovery and fraud detection, Ratner said.

“Handing a few large companies state-regulated monopolies on AI models would kill open-market capitalism and all the academic and open-source progress that actually made all of this technology possible in the first place,” he said.

Epic Games CEO Tim Sweeney objected to the idea based on constitutional grounds. “The idea that the government should decide who should receive a license to write code is as abhorrent as the government deciding who is allowed to write words or speak,” Sweeney wrote on Twitter.

The prospect of jobs being created and destroyed by AI also came up at the hearing. Altman indicated he was bullish on the potential AI to create better jobs. “GPT-4 will I think entirely automate away some jobs and it will create new ones that we believe will be much better,” he said.

Gary Marcus, a college professor who was asked to testify along with Altman and IBM’s chief privacy and trust officer, Christina Montgomery, expressed skepticism at Altman’s testimony, noting that OpenAI hasn’t been transparent about the data it uses to train its models.

“We have unprecedented opportunities here,” Marcus said, “but we are also facing a perfect storm of corporate irresponsibility, widespread deployment, lack of adequate regulation and inherent unreliability.”

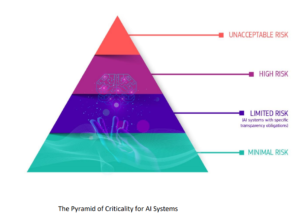

The three Senate guests were united in one respect: the need for an AI law. Marcus and Montgomery joined Altman in calling for some regulation. Montgomery called for a new law similar to Europe’s proposed new AI law, dubbed the AI Act.

The AI Act would create a regulatory and legal framework for the use of AI that impacts EU residents, including how it’s developed, what companies can use it for, and the legal consequences of failing to adhere to the requirements (fines equaling 6% of the company’s annual revenue have been suggested). Companies would be required to receive approval before rolling out AI in some high-risk cases, such as in employment or education, and other uses would be outright outlawed, such as real-time public biometric systems or social credit systems such as the one employed by China’s government.

If the European Parliament approves the AI Act during a vote planned for next month, the law could go into effect later this year or in 2024, although a grace period of two years is expected.

Related Items:

Open Letter Urges Pause on AI Research

Self-Regulation Is the Standard in AI, for Now