Why Samsara Picked Ray to Train AI Dashcams

When the engineers at Samsara began building their first smart dashcam several years ago, they found themselves using a series of different frameworks to collect data from the IoT devices, train the machine learning models, and perform other tasks. Then they discovered Ray could dramatically simplify the workflow, and the rest is history.

Well, there’s actually quite a bit more that goes into Samsara’s use of Ray, the distributed data processing engine developed at UC Berkely RISELab. And like some of the best tech stories, it starts with a cheesy beginning.

A boutique cheese company called Cowgirl Creamery needed a way to monitor temperatures in its delivery trucks, so Samsara CEO Sanjit Biswas–an MIT grad who sold his first startup, Meraki, to Cisco for $1.2 billion–obliged with a network of mobile sensors.

Fast forward a few years, and Samsara’s ambitions–as well as its capabilities–have entered “big cheese” territory. Driven by the fact that 40% of the country’s economic output, currently about $8 trillion, is closely tied to physical operations, such as trucking, Biswas realized there was a huge potential to leverage emerging IoT and machine learning technology in the physical world, and so he set out to build a system to do that.

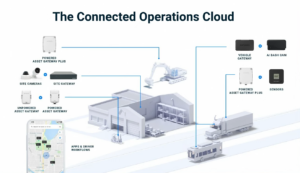

Connected Operations Cloud

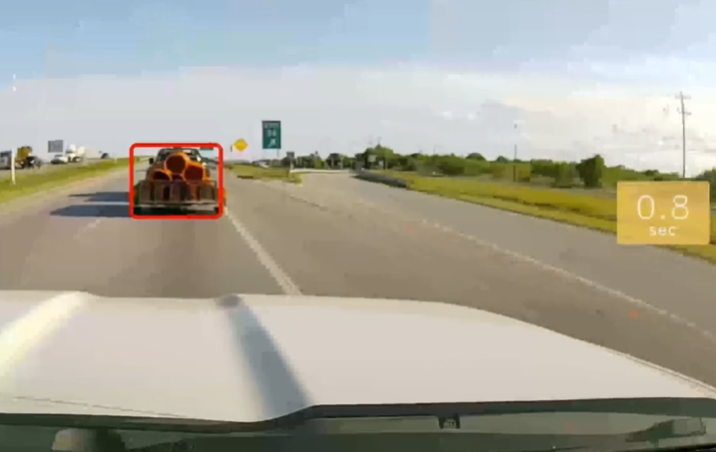

The smart dashcams are the pointy end of the spear for Samara’s Connected Operations Cloud. The company’s AI dashcams not only are able to detect, in real time, hazards that exist on the road, but also detect hazards that exist behind the wheel, says Evan Welbourne, the company’s head of data and AI.

“The main thing really is real-time event detection in the field on an AI dashcam that can alert a driver if they’re driving too closely or if there’s a risk of a forward collision,” Welbourne tells Datanami, “or if they’re doing something unsafe, like looking at their phone while they’re driving.”

In addition to the real-time component, Samsara dashcams also collect data for later analysis, which helps customers coach their drivers on how to improve safety over time. Samsara also develops vehicle gateways that sit in the glove compartment of the truck and collect other types of data, including vehicle location and speed, as well as operations- and maintenance-related items, like fuel consumption and tire pressure.

Beyond dashcams, Samsara also develops cameras and other sensors that can be deployed in remote sites, like mining camps, factories, or warehouses, all in support of the company’s goal to bring real-time alerting and AI to the physical world.

Distributed IoT

Samsara faced several tech challenges in developing its Connected Operations Cloud and IoT devices that deploy to the field.

For starters, the company needs to be able to fuse the various different data types and run ML inference on top of them in real time. It also needs to collect data samples to upload to the cloud for later analysis. From a hardware standpoint, all of this software has to run on small devices that lives on the edge with limited processing capabilities and restrictive thermal properties.

The amount of data Samsara collects and processes on behalf of customers poses a major challenge. With millions of deployed devices with more than 17,000 Samsara customers, the scale of the data involved keeps engineers on their toes, Welbourne says.

“Video of course is a big component of it, but there’s also text data,” he says. “There’s all kinds of sensor data and diagnostics. We have this ever-expanding number of types of devices and types of diagnostics that we’re accommodating and serving back to our customers.”

There’s no shortage of machine learning frameworks available that are open source. Samsara initially used two popular frameworks, Tensorflow and PyTorch, to build its computer vision models to detect cars that are travelling too close or a truck driver who’s distracted. It’s also started using generative AI capabilities and foundation models for things like multi-model training and labeling data, Welbourne says.

A Unified Stack

But there’s a lot more that goes into deploying a workable AI product in the field than just picking the right model. According to Welbourne, the company’s biggest challenge is the end-to-end implementation of the entire solution. That’s where Ray has paid real dividends, Welbourne says.

“The AI development cycle consists of things like data collection, training, retraining, evaluation, and a bunch of deployment and maintenance,” Welbourne says. “That entire process has really changed and accelerated in this new world, and what we find is that Ray has been a really good framework to kind of string it all together.”

Instead of having separate teams for data science and data engineering and other disciplines, Samsara seeks to empower all of its scientists and developers to take a full stack approach. Instead of developing a model and handing it over to an operations team to implement it, the scientists are also responsible for deployment. Ray has been instrumental in enabling this approach.

“We can offer a unified programming and AI development process using Ray to thread it all together,” Welbourne says. “So they can write Python code and a little bit of orchestration, and then a single scientist can develop everything from concept all the way through training and launch, and then maintaining the model and operating the model that they built.”

In addition to open source Ray, the company is using the Raydp library developed by Intel to run Spark on Ray. It’s also adopted Dagster to provide data orchestration capabilities, according to the company’s Ray Summit 2023 presentation. The company developed a Python wrapper for Dagster, dubbed Owlster, to enable scientists to define their data pipeline using YAML.

Ray In Action

Ray’s big selling point is that it dramatically simplifies distributed processing. Developers can take a Python application they wrote on their laptop and scale it up to run at any scale. (Anyscale, of course, is the name of the company formed by Ray creator Robert Nishihara and his advisor, Ion Stoica, to commercialize Ray.)

Samsara leverages Ray’s powerful abstraction to enable it to build powerful AI systems that run in the cloud, and then shrink the models down to run efficiently on small devices, like its AI dashcam. Welbourne appreciates how Ray brings it all together for Samsara.

“We’ve got the hardware, but we’ve also got the backend system where we’re building and training the models, but also post-processing the data and then eventually exposing it to a customer–that’s a pretty full-stack system,” he says. “The hardest part is it’s got to work well on device. The model that we build has to be optimized to run efficiently within the bounds of memory. There’s thermal constraints. We can’t overheat the dashcams or other device, and that adds more constraints to the models we build. So there’s a lot to manage.”

According to Welbourne, Samsara uses Ray in combination with the AI frameworks to develop and train AI models that deploy to the dashcams and other devices. Over the past year, the company has shrunk its modeling serving costs in the cloud by more than 50%, which the company attributes directly to Ray.

Ray itself doesn’t run on the dashcams. Instead, the company uses quantization and other techniques to shrink the models it develops with Ray to run efficiently on the company’s firmware running on the dashcams.

“We have a device farm in a laboratory where we have at least 10 devices hooked up all the time,” Welbourne says. “We actually did the work to connect Ray to that device farm, so using the same kind of scripting that they’ve been using to build the model, they can train it and tune it to the device.”

Without Ray, Samsara would be looking at a lot more overhead in its AI development process, according to Welbourne. That might have been an acceptable tradeoff for a company to benefit from the power of AI in the past, but Samsara is seeking out a new way forward.

“It’s been kind of a revelation that we can empower an individual scientist in such an end-to-fashion,” he says. “It’s just something we’ve never been able to do before. And we’re finding we’re in a particularly good position because we don’t have that heavy big legacy machine learning system that a lot of bigger companies have built. We’re in a position to start fresh and we found that using Ray we can build a lot leaner and still get the end-to-end support that larger companies have.”

Related Items:

AnyScale Bolsters Ray, the Super-Scalable Framework Used to Train ChatGPT

Anyscale Branches Beyond ML Training with Ray 2.0 and AI Runtime

Why Every Python Developer Will Love Ray