AI Regs a Moving Target in the US, But Keep an Eye on Europe

(Roman Samborskyi/Shutterstock)

If you’re trying to pin down the rules and regulations for artificial intelligence in the United States, good luck. They’re evolving quickly at the moment, which makes them a moving target for American businesses that want to stay on the straight and narrow. However, the general thrust of the regulations, as well as work done by the Europeans, gives us an indication of where they may likely end up in the US.

Most eyes are on the European Union’s AI Act when it comes to setting a global standard for AI regulation. The law, which was first proposed in April 2021, was initially approved by the European Parliament in June 2023, followed by the approval of certain rules last month. The act still has one more vote to clear this year, after which there would be a 12-24 month waiting period before the law began to be enforced.

The EU AI Act would create a common regulatory and legal framework for the use of AI in Europe. It would control both how AI is developed and what companies can use it for, and would set consequences for failure to adhere to requirements.

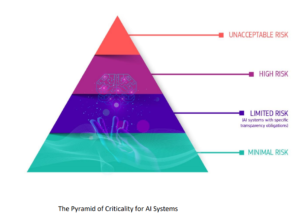

There are three broad categories of AI usage that the AI Act is seeking to regulate, according to Traci Gusher, Americas Data and Analytics Leader for EY. At the top of the list are AI uses that would be banned, like remote biometric authentication, such as facial recognition.

Traci Gusher, Americas Data and Analytics Leader for EY, speaking at Tabor Communications’ HPC + AI on Wall Street, September 2023

“So the idea that you could be remote anywhere in the world looking at a face that somebody in Europe and identify them for insert here reasons, was part of what was suggested as being inappropriate use of artificial intelligence,” Gusher said during last fall’s 2023 HPC + AI on Wall Street conference in New York City.

The second category is high-risk AI usage, or AI uses that can be appropriate but also must be more closely governed, and may require approval and tracking by regulators, Gusher said.

“These include where you’re losing AI on things like monitoring infrastructure, in usage in educational and schools and law enforcement and a number of other areas,” she said. “And what this really says is that, if you’re using AI for one of these types of purposes, it needs to be governed more closely, and in fact, it has to be registered with the EU.”

The third area of the EU Act relates to GenAI, and covers transparency, which is not exactly a GenAI strong suit. According to Gusher, the EU AI Act will put certain limits on Gen AI usage.

“Really the crux of what the regulation dictates for generative AI is that it needs to be transparent,” she says. “There’s a lot of different pieces of the regulations that that that are listed here as well as some of the frameworks and guidelines to talk about transparency, but it got really specific in saying that generative AI is being used in the production of text, of voice, of video, of anything, it has to clearly state that it is using it.”

Europe is leading the way in AI regulation around the globe with the AI Act, just as it did with the Global Data Privacy Regulation (GDPR) several years ago. But the EU AI Act is not the only game in town when it comes to AI regulation, Gusher said during her presentation.

China is also moving forward with its own AI regulation. Dubbed the Internet Information Service Algorithmic Recommendation Management Provisions, the regulation is really aimed at ensuring that AI and machine learning uses are implemented in an ethical manner, that they uphold Chinese morality, are accountable and transparent, and “actively spread positive energy.”

The Chinese IISARMP (for lack of a better name) went into effect in March 2022, according to an August 2023 Latham & Watkins brief. The People’s Republic of China added another law in January 2023 to regulate “deep synthesis” and accounted for generative AI with another new law in July 2023.

AI is also coming under regulation in the Great White North. The Canadian legislature is still considering Bill C-27, a far-reaching addition to the law that would implement certain data privacy requirements as well as regulate AI. It’s unclear whether the law will go into effect before the Canadian election in 2025.

The regulations are not as far along in the US. The National AI Initiative Act of 2020, which was signed into law by President Trump, aimed primarily at increasing AI investment, opening up federal computing resources for AI, setting AI technical standards, building an AI workforce, and engaging with allies on AI. According to Gusher, it has not resulted in AI regulation.

President Biden went a bit further with his October 2023 executive order, which required developers of foundation model to notify the government when training the model and sharing the results of the tests. It also requires the Department of Commerce to develop standards for detecting AI-generated content, such as through watermarks. However, the executive order lacks the force of law.

There have been some attempts to pass a national law. Senators Dick Blumenthal and Josh Hawley introduced a bipartisan AI framework last August, but it hasn’t moved forward yet. In lieu of new laws passed by Congress, various states have picked up their AI regulation pens. According to Gusher, no fewer than 25 states, plus the District of Colombia, have introduced AI bills, and 14 of them have adopted resolutions or enacted legislation.

Gusher points out that not all AI rules may come from the national or state legislatures. For example, one year ago, the federal government’s National Institute of Standards and Technology (NIST) published an AI risk management framework that aims to help organizations adopt AI in a controlled and managed manner.

The NIST Risk Management Framework (RMF), which you can download here, encourages adopters to get a handle on AI by adopting its recommendations in four areas, including Map, Measure, Manage, and Govern. After mapping out their AI tech and usage, they devise ways to measure risks in a qualitative and quantitative way. Those measurements help the organizations devise ways to manage the risks in an ongoing fashion. Together these capabilities give the organizations the means to govern their AI and its risks.

While the NIST RMF is voluntary and not backed by any government force, it’s nevertheless gaining quite a bit of momentum in terms of adoption across industry, Gusher said.

“The NIST framework is really the one that everyone is rooting in, in terms of starting to think about what kinds of standards and frameworks should be applied to the artificial intelligence that they are building within their organizations,” she said. “This is a pretty robust and comprehensive framework that gives us a good roadmap to the types of things that organizations need to think about, as well as the things that they’re going to have to monitor and govern. And it’s really the framework that is being recognized as the basis for what will help organizations prepare for impending regulation.”

Eventually, AI regulation will come to the US, Gusher said. When it does, it will most likely follow in the footsteps of GDPR, which has been replicated by numerous states in the US, including California with its CCPA (and subsequent CPRA). Whatever AI regulation eventually emerges in the US, Gusher said it will likely resemble the EU AI Act.

“Europe was really the first group of countries to align around a standard for data privacy and customer protection for data privacy globally. The result of which is that not only is it the standard in Europe, but it has been used as a basis and the replication for legislation around the world,” she said.

“The same types of thing we expect to happen with artificial intelligence regulation, where once the EU lands on and deploys this– the finalization of this legislation is scheduled to be completed by the end of this year [2023] and enacted next year [2024],” she continued. “Once we have a clear grasp on exactly what is going to be deployed in Europe, we will expect that we will start to see other countries follow suit outside of Europe.”

Related Items:

European Policymakers Approve Rules for AI Act

Biden’s Executive Order on AI and Data Privacy Gets Mostly Favorable Reactions

NIST Puts AI Risk Management on the Map with New Framework