Databricks Sees Compound Systems as Cure to AI Ailments

(Daniel Chetroni/Shuttersetock)

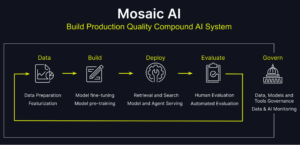

Databricks today unveiled a series of enhancements to its Mosaic AI stack that is aimed at addressing some of the challenges that customers face building GenAI systems, including accuracy, toxicity, latency, and cost. At the core of Databricks’ approach is a belief that stringing together AI systems from multiple, smaller AI models will deliver an application that outperforms an application built atop a single monolithic large language model (LLM).

Just as monolithic mainframe applications are being broken up and replaced with a collection of more nimble REST microservices, the days of monolithic GenAI apps built atop a single LLM would appear to be numbered. That’s according to Databricks, which introduced its new compound systems approach with Mosaic AI during the second day of its Data + AI Summit.

The problem stems from different LLMs having different capabilities when it comes to metrics like quality, privacy, latency, and cost. For instance, OpenAI’s GPT-4 may provide the highest accuracy and lowest hallucination rate, but it may not fit the bill when it comes to cost and latency. Similarly, Llama-3 may check the boxes for quality and tunability, but leave something to be desired when it comes to toxicity and privacy.

The solution, according to Databricks, is to build compound GenAI applications that utilize the best of each LLM. With today’s updates to its Mosaic AI platform, Databricks says customers can string together compound AI systems that connect LLMs to customers’ data using vector databases, vector search, and retrieval augmented generation (RAG) capabilities.

One of Databricks customers that has adopted the compound AI approach is, FactSet, according to Joel Minnick, Databricks vice president of marketing. FactSet developed a GenAI system for a pharmaceutical client, but wasn’t happy with the initial performance.

“They had an LLM that was building formulas for them,” Minnick tells Datanami. “Just using GPT-4, they had 55% accuracy and 10 second of latency.”

After working with Databricks, FactSet decided to take a different approach. Instead of relying on GPT-4 for everything, they brought in Google’s Gemini to generate the formula, used Meta’s Llama-3 to generate the arguments, and used OpenAI’s GPT-3.5 to bring it all together, Minnick says, with a generous helping of vector and RAG capabilities in Mosaic AI.

When it was all said and done, the new system was able to achieve 87% accuracy with three seconds of end-to-end latency, Minnick says.

“When customers start building their end to end application this way, they can get the accuracy way up and latency way down, but it’s also much easier to iterate on them too, because I have to just solve individual pieces of the problem, rather than try to have to pull the overall system apart,” he says.

Databricks believes that this compound approach will work for a variety of use cases, according to Matei Zaharia, Co-founder and CTO at Databricks.

“We believe that compound AI systems will be the best way to maximize the quality, reliability, and measurement of AI applications going forward, and may be one of the most important trends in AI in 2024,” Zaharia says in a press release.

The trick will be how does the customer string all of this together, which Databricks hopes to simplify with new Mosaic AI capabilities that features around chaining models using LangChain or other techniques, and connecting the models to customer’s data using RAG and other LLM prompting techniques.

To that end, Databricks today unveiled several new pieces to Mosaic AI, the GenAI software stack that it obtained with its acquisition of MosaicML last year for $1.3 billion. The new additions to Mosaic AI include: Agent Framework; Agent Evaluation; Tools Catalog; Model Training; and Gateway. All of these new offerings are now in public preview, except for Model Tools Catalog, which is in private preview.

Mosaic AI Agent Framework is designed to utilize RAG techniques that connect foundation models to customers’ proprietary data, which remains in Unity Catalog where it’s secured and governed.

Agent Evaluation, meanwhile, is designed to help customers monitor their GenAI applications for quality, consistency, and performance. It’s really aimed at doing three things, Minnick says. First, it will enable teams to collaboratively label responses from models to get to “ground truth.” Second it will foster the creation of LLM judges that pass judgement on the output of production LLMs. Lastly, it will support tracing in GenAI apps.

“Think of tracing like being able to debug an LLM, being able to step back through every step in the chain that the model took to deliver that answer,” Minnick says. “So taking a black box that a lot of LLMs are today and opening that box up and saying exactly why did it make the decisions that it made.”

AI models are similar to kids “I don’t know why you just did the thing you did that was really stupid,” Minnick says. “I see you did it, and now we can have a conversation about why that was the wrong thing to do.”

Mosaic AI Tools Catalog, meanwhile, lets organizations govern, share, and register tools using Unity Catalog, Databricks’ metadata catalog that sits between compute engines and data (see today’s other news about the open sourcing of Unity Catalog).

If customers want to fine-tune their foundation models on their own data to gain better accuracy and decrease cost, they can choose Mosaic AI Model Training. Mosaic AI Gateway functions as an abstraction layer that sits between GenAI applications and LLMs and allows users to switch out LLMs without changing application code. It will also provide governance.

“It’s to move the ball forward in being able to go and pursue compound systems,” Minnick says. “We have a strong belief this is the future of what generative applications are going to look like. And so giving customer the toolsets and the capability to build and deploy these compound systems as they begin to move away from just monolithic models.”

Another important component to compound applications is Vector Search, which Databricks made generally available last month. Vector Search functions as a vector database that can store and serve vector embeddings to LLMs. Additionally, it provides vector capabilities for search engine use cases; it also supports keyword search.

For more details on this set of announcements, read this blog post by Naveen Rao and Patrick Wendell.

Related Items:

Databricks to Open Source Unity Catalog

All Eyes on Databricks as Data + AI Summit Kicks Off

What Is MosaicML, and Why Is Databricks Buying It For $1.3B?