LOTUS Promises Fast Semantic Processing on LLMs

Researchers at Stanford University and UC Berkeley recently announced the version 1.0 release of LOTUS, an open source query engine designed to make LLM-powered data processing fast, easy, and declarative. The project’s backers say developing AI applications with LOTUS is as easy as writing Pandas, while providing performance and speed boosts compared to existing approaches.

There’s no denying the great potential to use large language models (LLMs) to build AI applications that can analyze and reason across large amounts of source data. In some cases, these LLM-powered AI apps can meet, or even exceed, human capabilities in advanced fields, like medicine and law.

Despite the massive upside of AI, developers have struggled to build end-to-end systems that can take full advantage of the core technological breakthroughs in AI. One of the big drawbacks is the lack of the right abstraction layer. While SQL is algebraically complete for structured data residing in tables, we lack unified commands for processing unstructured data residing in documents.

That’s where LOTUS–which stands for LLMs Over Tables of Unstructured and Structured data–comes in. In a new paper, titled “Semantic Operators: A Declarative Model for Rich, AI-based Analytics Over Text Data,” the computer science researchers–including Liana Patel, Sid Jha, Parth Asawa, Melissa Pan, Harshit Gupta, and Stanley Chan–discuss their approach to solving this big AI challenge.

The LOTUS researchers, who are advised by legendary computer scientists Matei Zaharia, a Berkeley CS professor and creator of Apache Spark, and Carlos Guestrin, a Stanford professor and creator of many open source projects, say in the paper that AI development currently lacks “high-level abstractions to perform bulk semantic queries across large corpora.” With LOTUS, they are seeking to fill that void, starting with a bushel of semantic operators.

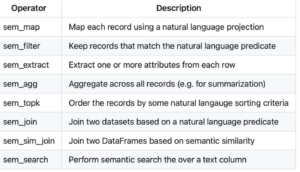

“We introduce semantic operators, a declarative programming interface that extends the relational model with composable AI-based operations for bulk semantic queries (e.g., filtering, sorting, joining or aggregating records using natural language criteria),” the researchers write. “Each operator can be implemented and optimized in multiple ways, opening a rich space for execution plans similar to relational operators.”

These semantic operators are packaged into LOTUS, the open source query engine, which is callable through a DataFrame API. The researchers found several ways to optimize the operators speed up processing of common operations, such as semantic filtering, clustering and joins, by up to 400x over other methods. LOTUS queries match or exceed competing approaches to building AI pipelines, while maintaining or improving on the accuracy, they say.

“Akin to relational operators, semantic operators are powerful, expressive, and can be implemented by a variety of AI-based algorithms, opening a rich space for execution plans and optimizations under the hood,” one of the researchers, Liana Patel, who is a Stanford PhD student, says in a post on X.

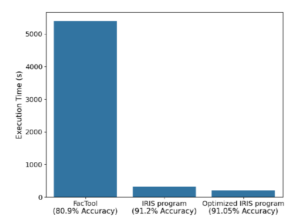

Comparison of state-of-the-art fact-checking tools (FacTool) vs a short LOTUS program (middle) and the same LOTUS program implemented with declarative optimizations and accuracy guarantees (right). (Source: “Semantic Operators: A Declarative Model for Rich, AI-based Analytics Over Text Data”)

The semantic operators for LOTUS, which is available for download here, implement a range of functions on both structured tables and unstructured text fields. Each of the operators, including mapping, filtering, extraction, aggregation, group-bys, ranking, joins, and searches, are based on algorithms selected by the LOTUS team to implement the particular function.

The optimization developed by the researchers are just the start for the project, as the researchers envision a wide variety being added over time. The project also supports the creation of semantic indices built atop the natural language text columns to speed query processing.

LOTUS can be used to develop a variety of different AI applications, including fact-checking, multi-label medical classification, search and ranking, and text summarization, among others. To prove its capability and performance, the researchers tested LOTUS-based applications against several well-known datasets, such as the FEVER data set (fact checking), the Biodex Dataset (for multi-label medical classification), the BEIR SciFact (for search and ranking), and the ArXiv archive (for text summarization).

The results demonstrate “the generality and effectiveness” of the LOTUS model, the researchers write. LOTUS matched or exceeded the accuracy of state-of-the-art AI pipelines for each task while running up to 28× faster, they add.

“For each task, we find that LOTUS programs capture high quality and state-of-the-art query pipelines with low development overhead, and that they can be automatically optimized with accuracy guarantees to achieve higher performance than existing implementations,” the researchers wrote in the paper.

You can read more about LOTUS at lotus-data.github.io

Related Items:

Is the Universal Semantic Layer the Next Big Data Battleground?

AtScale Claims Text-to-SQL Breakthrough with Semantic Layer

A Dozen Questions for Databricks CTO Matei Zaharia