Live from GTC Asia: Accelerating Big Science

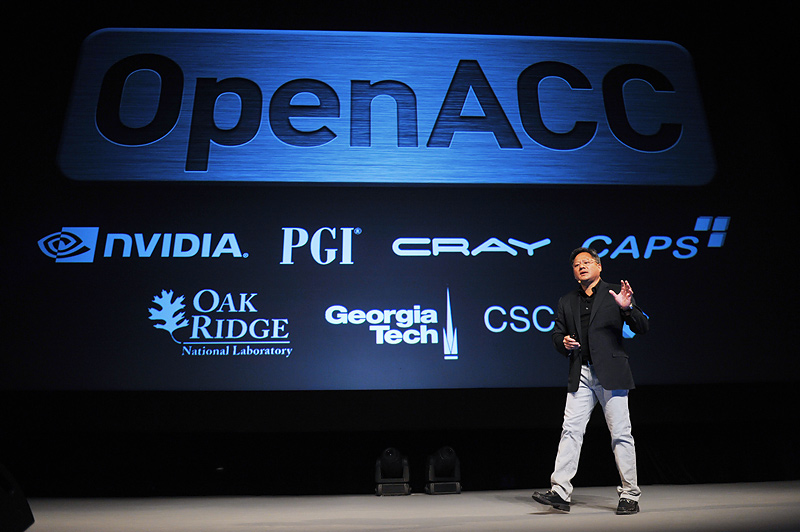

GPU computing is becoming a predominant force in supercomputing and is finding its way into a number of big data disciplines in both science and industry. As NVIDIA CEO Jen-Hsun Huang said during his opening keynote at this week’s GTC Asia event this week, the GPU is the engine of twenty-first century computing.

During his kickoff speech, Jen-Hsun Huang traced the roots in gaming up to the present, noting his company’s investment in the future of entertainment, but the real star of the show this week was science—and the potential for accelerating areas that are dealing with unprecedented amounts of data, and demanding an uninterrupted need for efficient but performance-geared compute power.

During his kickoff speech, Jen-Hsun Huang traced the roots in gaming up to the present, noting his company’s investment in the future of entertainment, but the real star of the show this week was science—and the potential for accelerating areas that are dealing with unprecedented amounts of data, and demanding an uninterrupted need for efficient but performance-geared compute power.

Such a statement has already echoed within academia with many new Top500-caliber systems, including the coming Titan supercomputer, carrying a wealth of GPUs, but as the conference topics unfolded, it became clear that this is a message many enterprise ears are tuning into as well, especially in data-heavy industries like life sciences and oil and gas.

We were on-site this week at GTC in Beijing to learn firsthand about the large number of case studies and real-world applications involving massive datasets that are being powered by GPUs. It was hard not to walk away from the event feeling that academic use of the technology was the first stepping stone to much broader use of accelerators to power big data applications. Further, innovations in programming, (including opening CUDA and making it more broadly accessible and simpler to implement via directives) could bring GPUs into more enterprise IT shops, marking a new era for what the datacenter of the future could look like.

The scope of many applications that can take advantage of GPUs involves not only the need for rapid computation, but for crunching data sets at sizes that are unprecedented. Below we have highlighted some of the key areas and topical tracks that demonstrated how data-intensive science (and the industries that rely on such innovations) are finding new capability via GPU acceleration.

GPUs in Life Sciences and Genomics

From studies of gene expression, metagenomic and next generation sequencing approaches, this track provided detailed views of how the scope of applications in these areas and other tangential fields, including quantum chemistry and molecular dynamics, are aided by GPU computing.

Among some of the more compelling topics that were tackled was the GPU accelerated life sciences research going on at the Beijing Genomics Institute (BGI). Bing Qiang Wang, head of HPC at BGI discussed how, as massive data is generated, processing and analysis at the large scale is posing significant challenges. He describes how BGI has implemented existing open source GPU accelerated bioinformatics tools to run their analysis pipelines with less cost and high throughput. He also describes how new tools, powered by GPUs are producing speedups of 10-50x compared to traditional genomics systems, making the big data problems his institute is addressing less of a time, cost and power drain.

Another session that piqued the interest of those with data-heavy computational concerns was one that addressed the acceleration of MrBayes, a software package for phylogenetic inferences to propose a “tree of life” for a collection of species whose DNA sequences are known. This software presents both a Bayesian inference and model choice across a wide range of phylogenetic and evolutionary models and uses Monte Carlo methods to estimate parameters of the overall models. Dr. Xiaoguang Lue from Nankai University discussed how this data-intensive software achieved a speedup over serial MrBayes of more than 20x on a large dataset using a single GPU and nearly linear speedups on a GPU cluster.

Oil and Gas: Big Data, Big Acceleration

When it comes to massive data sets, there are few areas that rack up the petabytes faster than the oil and gas industry. As NVIDIA stated of this track, “the cost of finding and extracting energy resources continues to escalate as easy hydrocarbons have been discovered. It takes increasingly more data and compute resources to make the best choices.” According, these sessions featured a number of oil and gas industry leaders as they discussed how their data-heavy field is being aided by the computational boost provided by GPU computing.

This week Anthony Lichnewsky, HPC Engineer from Schlumberger discussed how the oil and gas industry is embracing GPUs to tackle new and complex geological settings around the world. He presented an overview of the business and geopolitical drivers in the industry and how GPUs are meeting the big data and computational challenges of seismic modeling, imaging and inversion.

Adding to Lichnewsky’s assessment of how the oil and gas industry is using GPUs to contend with massive data and computational resource demands, HP’s Global Oil and Gas HPC Segment Manager, Richard Bland also weighed in how his company is deriving benefits from NVIDIA’s GPUs via its own machines across the oil and gas workflow. He claims that with GPUs, the oil and gas industry is seeing higher throughput, sharper subsurface mages, reduced cycle times and improved TCO. To highlight these points, Bland presented an HP/NVIDA design centered on oil and gas applications that they say can transform the industry. He demonstrated reference architectures benchmarked from large-scale GPU based production environments, showing one way the industry can contend with growing datasets and the need for increased clarity and insights from them.

Data-Heavy CFD, CAE Applications and GPUs

This track featured progress in GPU computing for CAE and CFD applications as well as a few asides regarding the use of GPUs in Computational Structural Mechanics. Sessions focused on research developments of algorithms and applications from academic and government research institutes as well industry-scale applications that included work done by ISVs, including ANSYS, SIMULIA and others.

Peter Lu, a research fellow at Harvard presented on the topic of “Enabling Interactive Physics Experiments with CUDA.” He noted that many applications of GPGPU marshal hundreds of GPUs in large computer clusters, enabling massive simulations where the ratio of calculation to actual data is large. However, as he described at GTC this week, “In the laboratory, many experimental applications have large quantities of data, so large, in fact that moving the data to a remote cluster may take longer than analyzing once it arrives.” Lu pointed out that the “ability to bring supercomputing to the data in the form of NVIDIA GPUs allows high-throughput analysis where results can be obtained fast enough to guide subsequent experiments.” Lu believes this creates a science-boosting “feedback loop” that can be applied to analyzing images and data in the lab, including swimming bacteria, diffusing colloids, and optical tomography as well as phase-separating liquid-gas colloidal mixtures on board the space station.

Climate and Weather Applications

A large number of sessions were devoted to the range of data-intensive applications that fall under climate and weather modeling. These sessions were geared toward demonstrating how to get these complex applications into CUDA for optimization. Several speakers from research institutes and operational instituties presented their requirements for porting existing Fortran algorithms to CUDA, using commercial compilers from PGI and CAPS. Speakers also addressed their methodologies for developing new models for data-intensive weather and climate models using CUDA directly.

On CUDA, China and the Data Deluge…

NVIDIA says that choosing to host GTC in China presented an obvious choice, both because two of the world’s top supercomputers are located here (Tianhe-1A and Nebulae) and because the country is finding innovative ways of putting GPUs to work across many disciplines, including oil and gas, molecular modeling, fusion research and genetic engineering.

One of the main attractors for a few of the Chinese nationals I spoke with who were here to learn more about GPU-enabled science said that they had come for the deep-dive sessions on getting started with CUDA. During the show, NVIDIA offered a number of basic CUDA sessions, including an intro where attendees were able to learn everything they needed from the C or C++ starting ground to writing their first “Hello, World” CUDA C program. This seemed to have generated a great deal of excitement and a few of the Chinese researchers, many of whom were graduate students, said they were hoping to find ways to have GPUs enter their areas of study.

One of the main attractors for a few of the Chinese nationals I spoke with who were here to learn more about GPU-enabled science said that they had come for the deep-dive sessions on getting started with CUDA. During the show, NVIDIA offered a number of basic CUDA sessions, including an intro where attendees were able to learn everything they needed from the C or C++ starting ground to writing their first “Hello, World” CUDA C program. This seemed to have generated a great deal of excitement and a few of the Chinese researchers, many of whom were graduate students, said they were hoping to find ways to have GPUs enter their areas of study.

We will be featuring a story this week from the conference here in Beijing that highlights the use of GPUs in accelerating big data business intelligence and enterprise analytics—bringing the concept closer to home that indeed, GPU computing could become a central component as the big data era unfolds.

Related Articles:

Live from SC11: Turning Big Data into Big Physics

The Big Data Opportunity for HPC

Pervasive Lends Supercomputing Center Analytical Might