Tag: supercomputer

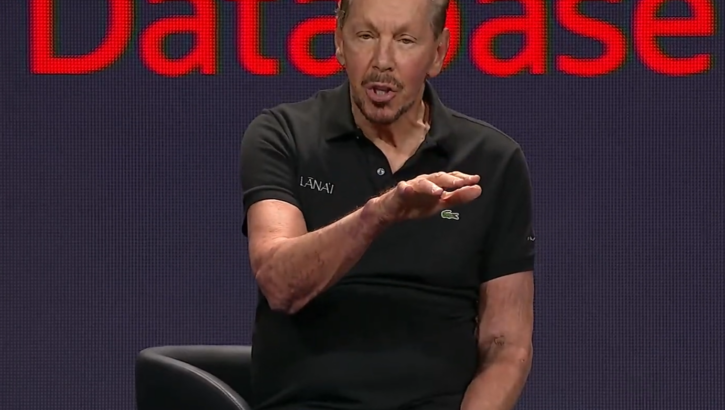

Oracle Bolsters Big Data, Analytics, and AI at CloudWorld

Oracle unleashed a torrent of new analytic and AI capabilities this week at its Oracle CloudWorld conference in Las Vegas. The fun kicked off yesterday with an expansion of capabilities in AWS and Google Cloud, updates t Read more…

Microsoft Seeks $10B Investment in OpenAI: Report

Microsoft, which is already invested in OpenAI to the tune of $1 billion and enjoys an exclusive partnership with it to train its AI models, reportedly is looking to invest $10 billion in the company following the breako Read more…

HPCwire Readers’ and Editors’ Choice Awards Turns 15

A hallmark of sustainability is this: If you are not serving a need effectively and efficiently you do not last. The HPCwire Readers’ and Editors’ Choice awards program has stood the test of time. Each year, our re Read more…

Pushing the Scale of Deep Learning at ISC

Deep learning is the latest and most compelling technology strategy to take aim at the decades-old “drowning in data/starving for insight” problem. But contrary to the commonly held notion, deep learning is more than Read more…

Spelunking Shops and Supercomputers

While this might come as a surprise to those outside the bubble, the majority of data that is collected within an enterprise setting is machine-generated. In other words, everything from operational data (messaging, web services, networking and other system data) to customer-facing systems and beyond. This week we talk with Splunk about its role in IT shops to supercomputers and... Read more…

World’s Top Data-Intensive Systems Unveiled

This year at the International Supercomputing Conference in Germany, the list of the top data-intensive systems was revealed with heavy tie-ins to placements on the Top500 list of the world's fastest systems, entries from most of the national labs, and plenty of BlueGene action. The list of the top "big data" systems, called the Graph500, measures the performance of.... Read more…

Understanding Data Intensive Analysis on Large-Scale HPC Compute Systems

Data intensive computing is an important and growing sector of scientific and commercial computing and places unique demands on computer architectures. While the demands are continuing to grow, most of present systems, and even planned future systems might not meet these computing needs very effectively. The majority of the world’s most powerful supercomputers are designed for running numerically intensive applications that can make efficient use of distributed memory. There are a number of factors that limit the utility of these systems for knowledge discovery and data mining. Read more…

SSDs and the New Scientific Revolution

SSDs are anything but a flash in the pan for big science. With news of the world’s most powerful data-intensive systems leveraging the storage technology, including the new Gordon supercomputer, and other new “big data” research centers tapping into the wares... Read more…

Rutgers to Become Big Data Powerhouse

Today Rutgers University released official word about a new high performance computing center that is dedicated to tackling big data problems in research and science as well as supporting the complex needs of business users in the... Read more…

Cray Opens Doors on Big Data Developments

This week we talked with Cray CEO, Peter Ungaro and the new lead behind the company's just-announced big data division, Arvind Parthasarathi. The latter joined the supercomputing giant recently from Informatica and brings a fresh, software-focused presence to a company that is now an official... Read more…

Bar Set for Data-Intensive Supercomputing

This week the San Diego Supercomputer Center introduced the flash-based, shared memory Gordon supercomputer. Built by Appro and sporting capabilities at the 36 million IOPS range, the center's director made no mistake in stating that a new era of data-intensive science has begun. Read more…